Push application containers to Google Artifact Registry

This tutorial shows how to use a Harness Continuous Integration (CI) pipeline to build and push application container images to Google Artifact Registry (GAR).

You'll learn how to:

- Use kaniko to build an application container image.

- Create projects in your Harness account.

- Add secrets to projects.

- Add a Google Cloud Platform (GCP) Connector to a project.

- Use a CI pipeline to build and push an application container image.

In this tutorial you'll build a simple Go application that calls https://httpbin.org/get and returns a JSON response, such as:

{

"args": {},

"headers": {

"Accept-Encoding": "gzip, deflate, br",

"Accept-Language": "en-GB,en-US;q=0.9,en;q=0.8",

"Host": "httpbin.org",

"Referer": "https://httpbin.org/",

"User-Agent": "go-resty/2.7.0 (https://github.com/go-resty/resty)",

"X-Amzn-Trace-Id": "Root=1-63bd82a6-48984f670886c5f55890feea",

"X-My-Header": "harness-tutorial-demo"

},

"origin": "192.168.1.1",

"url": "https://httpbin.org/get"

}

Prerequisites

In addition to a Harness account, you need the following accounts and tools:

- gcloud CLI

- Drone CLI to build the application locally

- Docker Desktop

- Terraform CLI

- A GitHub account where you can fork the tutorial repo

- A Google Cloud account

If you don't have a Harness account yet, you can create one for free at app.harness.io.

Configure a container registry

To avoid scenarios where builds only work on specific machines, you can use Docker containers to provide clean environments that run specified toolsets. This is a DevOps best practice that helps identify potential problems throughout development.

Drone by Harness is an open source CI platform that helps developers build and test on local machines without manually installing different tools for each language.

Before building the application, you need a location to store build artifacts, which are also known as container images. Externally-hosted locations are ideal because they are more accessible. Container image storage spaces are called Container Registries. Examples of container registry providers include Docker Hub, Quay.io, Harbor, Google Artifact Registry (GAR), and Elastic Container Registry (ECR).

Configure a Google Cloud service account

In this tutorial, you'll push an httpbin-get application container image to Google Artifact Registry. You'll also use Terraform scripts to provision Google Cloud resources that you need for this tutorial. To run the Terraform scripts you need to create a Google Cloud service account with the following roles:

- Artifact Registry Administrator: Allows the account to create and manage Docker artifact repositories.

- Service Account Admin: Allows the account to create other service accounts used in this tutorial.

- Service Account Key Admin: Allows the account to create service account keys used in this tutorial

- Security Admin: Allows the account to set service account IAM policies for a Google Cloud project.

After creating the service account, download the Service Account Key JSON. In this tutorial, refer to the service account as $INFRA_SA and refer to the Service Account Key JSON as $GOOGLE_APPLICATION_CREDENTIALS. Activate your Service Account using these references.

Clone the tutorial repo

This tutorial uses a sample repo referred to as the tutorial repo or $TUTORIAL_GIT_REPO.

Clone the tutorial repo:

# clone httpbin-get repository

git clone https://github.com/harness-apps/httpbin-get.git \

&& cd "$(basename "$_" .git)"

# navigate to the clone repository folder

export TUTORIAL_HOME="$PWD"

GitHub CLI is useful for working with GitHub repositories on the command line.

Fork the tutorial repository:

gh repo fork

You can also fork the tutorial repo from the GitHub web UI.

Configure the Google Cloud infrastructure

The tutorial repo contains Terraform scripts that set up Google Cloud infrastructure. These scripts:

- Create a Google Artifact Registry repository called

harness-tutorial. - Create a Google service account called

harness-tutorial-sawith permission to administer theharness-tutorialGoogle Artifact Registry repository and deploy services to Google Cloud Run

The terraform.tfvars file contains all default variables. Edit project_id and region to suit your Google Cloud settings.

Deploy the infrastructure:

make init apply

A successful run generates a service account key file at $TUTORIAL_HOME/.keys/harness-tutorial-sa.

Verify the infrastructure

Run a simple Drone pipeline locally to verify your infrastructure. Verification ensures that you can build a container image and deploy it through a Google Cloud run.

The pipeline uses the following environment variables:

PLUGIN_IMAGE: The name of the application container image.PLUGIN_SERVICE_ACCOUNT_JSON: The base64-encoded content of the Google Cloud service account key file.

Run the following script to generate a $TUTORIAL_HOME/.env file that contains the two environment variables:

$TUTORIAL_HOME/scripts/set-env.sh

The PLUGIN_ prefix allows variables to be implicitly passed as parameters, or settings, to Drone plugins.

You'll also refer to these variables later in this tutorial when building a Harness CI pipeline.

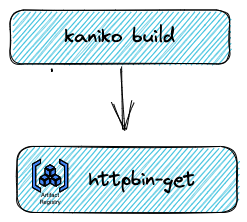

Next, you need to build and push the go application. This uses a simple two-step pipeline. The first step uses kaniko to build an image. The second step pushes the image to the Google Artifact Registry called harness-tutorial/httpbin-get.

Run the following to build and push the image to the Google Artifact Registry:

drone exec --env-file=.env

This can take some time to build and push. Drone tries to pull container images if they don't already exist.

A successful run produces output similar to the following:

...

[build and push:41] INFO[0110] Taking snapshot of files...

[build and push:42] INFO[0110] EXPOSE 8080

[build and push:43] INFO[0110] Cmd: EXPOSE

[build and push:44] INFO[0110] Adding exposed port: 8080/tcp

[build and push:45] INFO[0110] CMD ["/app"]

[build and push:46] INFO[0110] Pushing image to <your gcp region>-docker.pkg.dev/<your google cloud project>/harness-tutorial/httpbin-get

[build and push:47] INFO[0113] Pushed <your gcp region>-docker.pkg.dev/<your google cloud project>/harness-tutorial/httpbin-get@sha256:67cca19fd29b9c49bb09e5cd2d50c4f447bdce874b11c87c8aae4c3171e659e4

...

To check the pushed image, navigate to https://$PLUGIN_IMAGE.

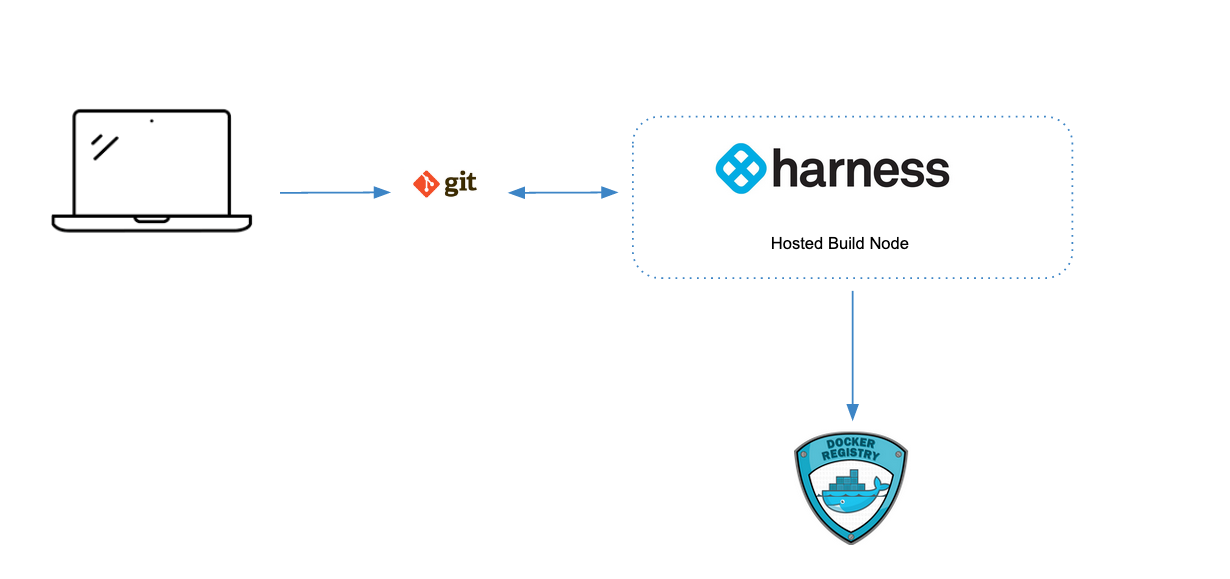

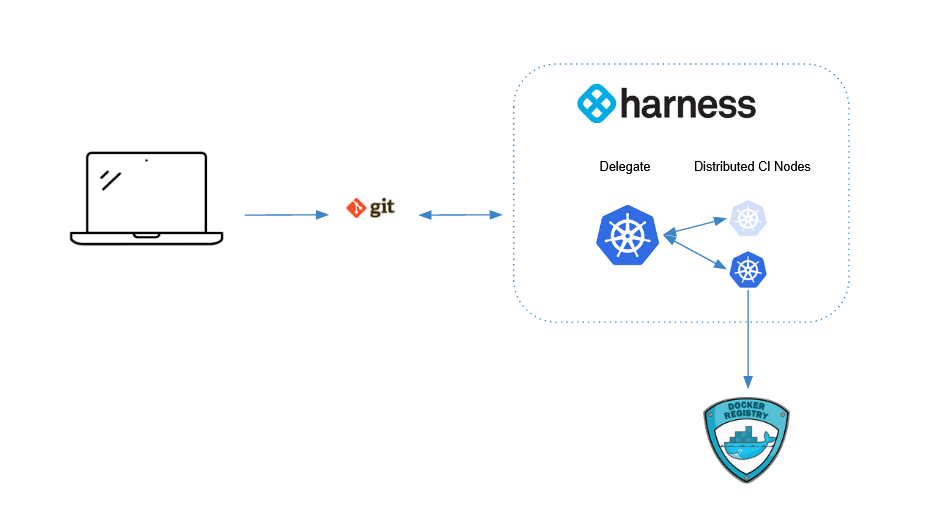

If you monitored your machine while running drone exec, you may have noticed a temporary slowdown. This might not be a problem for a single engineer, but scaling this process up to dozens, hundreds, or even thousands of engineers can strain system resources. Fortunately, modern continuous integration platforms use distributed nodes to support infrastructure at scale. Harness CI supports scaling and helps you externalize, centralize, and automate the build processes, as demonstrated in the next part of this tutorial.

Build a Harness CI pipeline

The drone exec command you ran in the previous section is adequate while you're learning or building locally for yourself. However, when working on a team to deliver enterprise applications, you need to offload, centralize, and automate this process. With Harness CI, you can create pipelines that make your build processes repeatable, consistent, and distributed.

The rest of this tutorial shows how to create a Harness CI pipelines that mimics the local drone exec steps that build and push an application container image to Google Artifact Registry.

While the Harness platform has several modules, this tutorial focuses on the Continuous Integration (CI) module. If you don't already have a Harness account, sign up for a Harness account.

Build infrastructure hosting options

Pipelines require build infrastructure to run. When you create your own pipelines, you can use either Harness-hosted infrastructure or bring your own build infrastructure. This tutorial uses Harness-hosted infrastructure, also called Harness Cloud.

Harness Cloud uses Harness-hosted machines to run builds. Harness maintains and upgrades these machines, which gives you more time to focus on development.

With self-hosted build hardware, your pipelines run on your local machines or your Kubernetes clusters. To learn about self-hosted options, go to Set up build infrastructure.

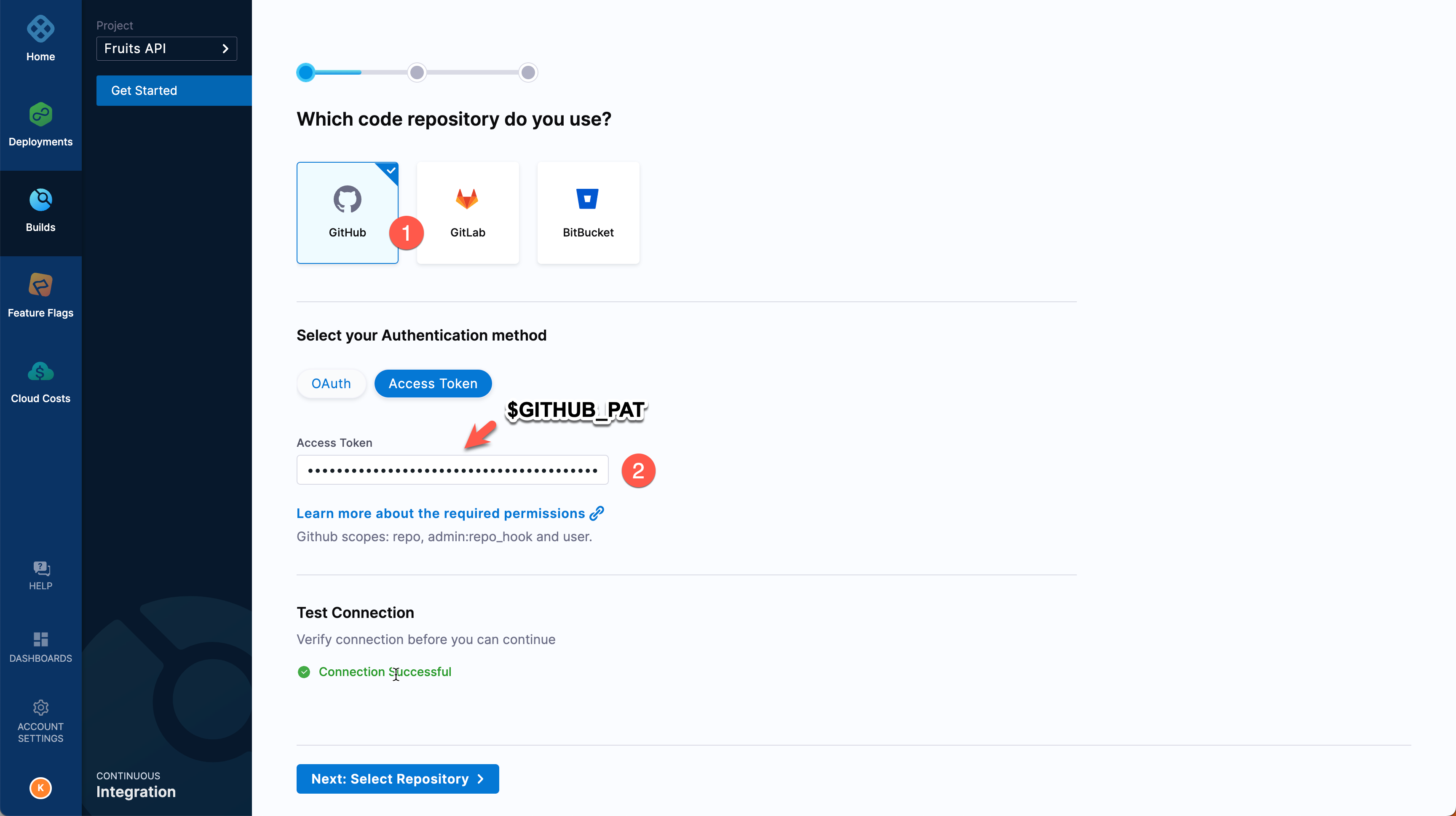

GitHub personal access token

For this tutorial, Harness needs access to your fork of the tutorial repo on GitHub. GitHub personal access tokens are the preferred mode for providing Github credentials.

The GitHub documentation explains how to Create a personal access token.

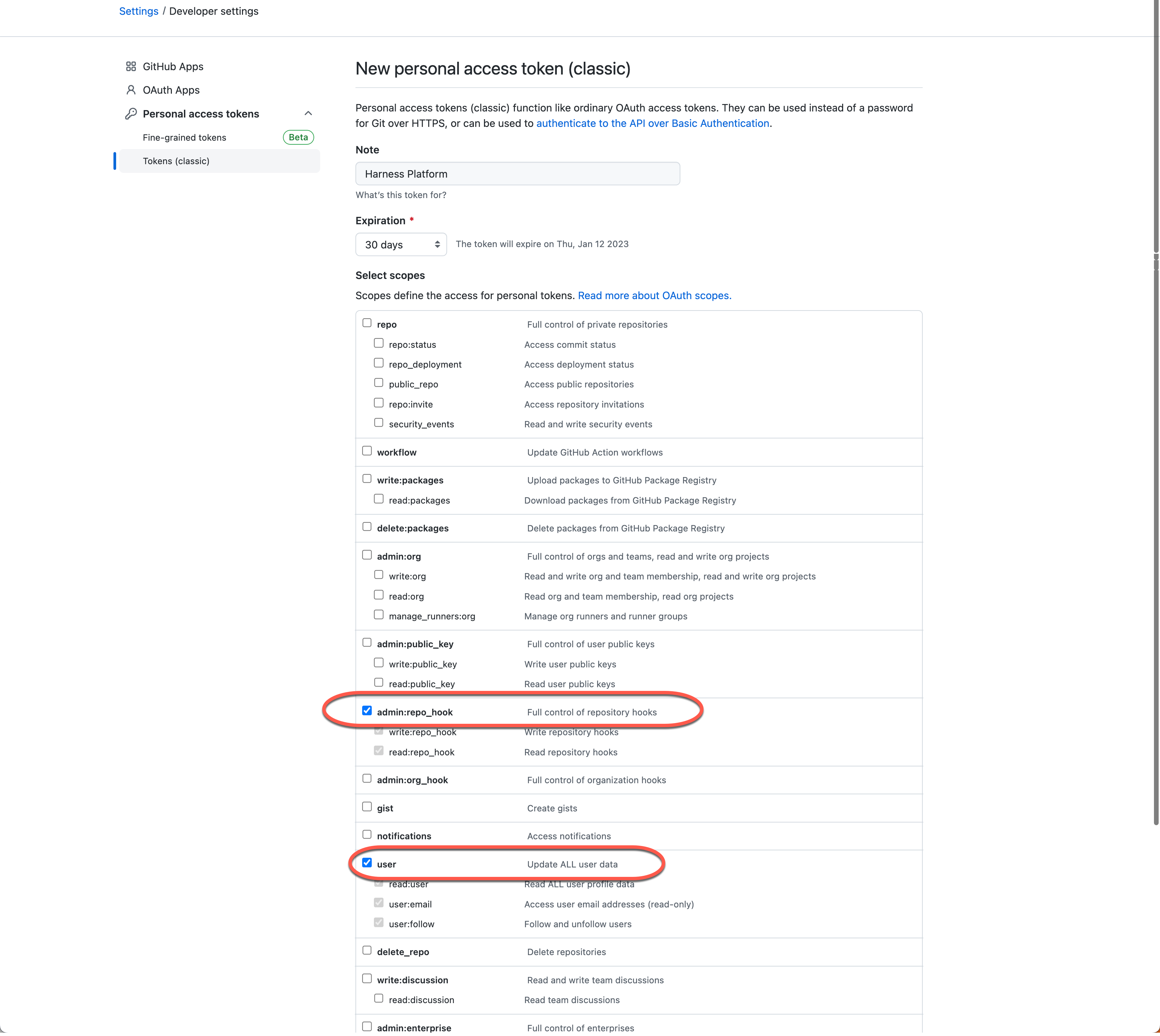

If you are using an existing personal access token, make sure it has the admin:repo_hook and user scopes.

The token is only displayed once. Copy the token and save it to your password manager or another secure place. The rest of this tutorial refers to this token value as $GITHUB_PAT.

Create a project

In the Harness Platform, you declare and configure resources, such as pipelines, secrets, and connectors. The availability of these resources depends on the scope where the resource was declared. Resources can be available across an entire account, to an organization within an account, or limited to a single project. For this tutorial, you'll create all resources at the project scope.

Log in to your Harness account that you created earlier and create a project.

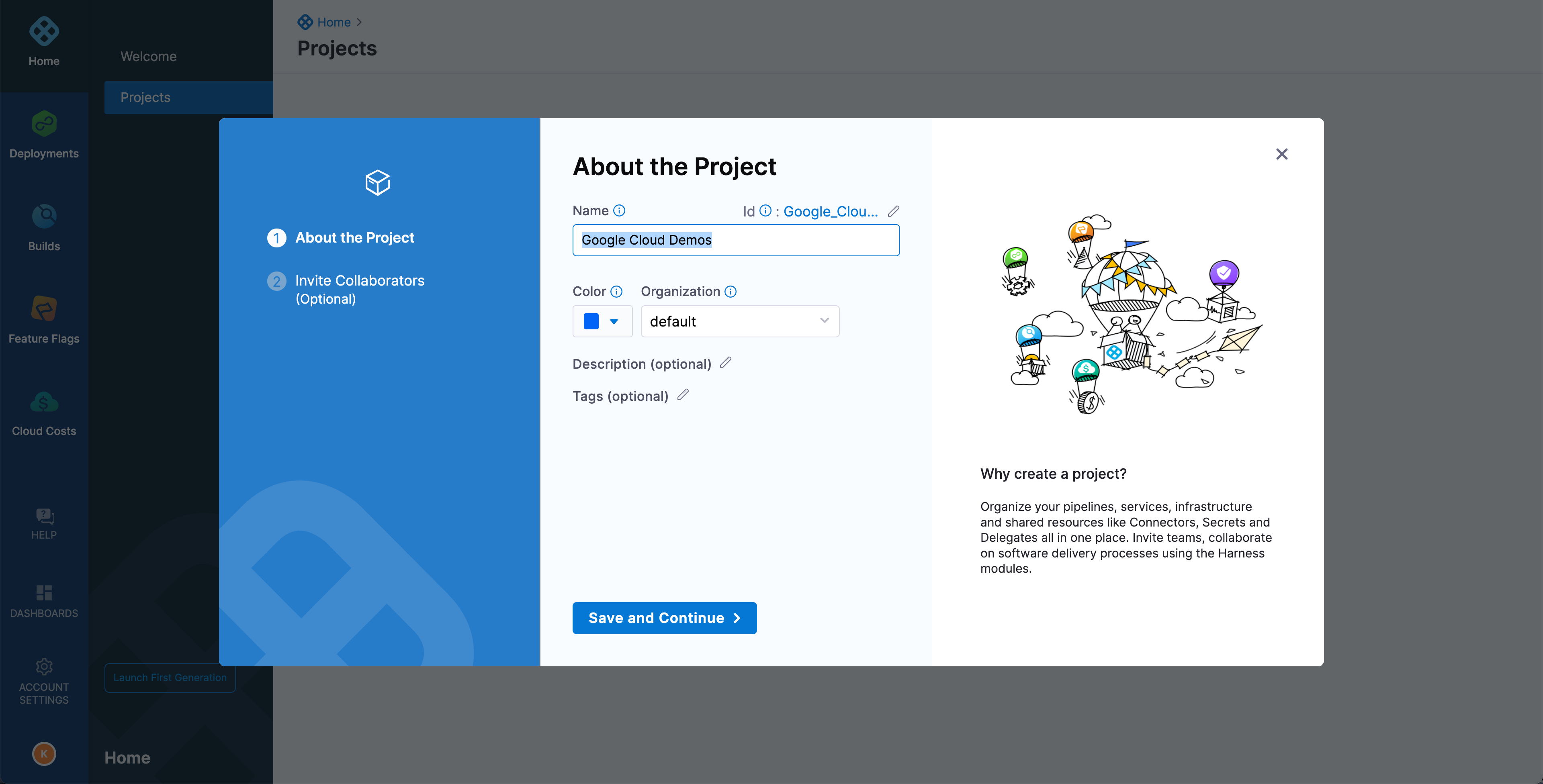

Name the new project Google Cloud Demos, leave other options as their defaults, and then select Save and Continue.

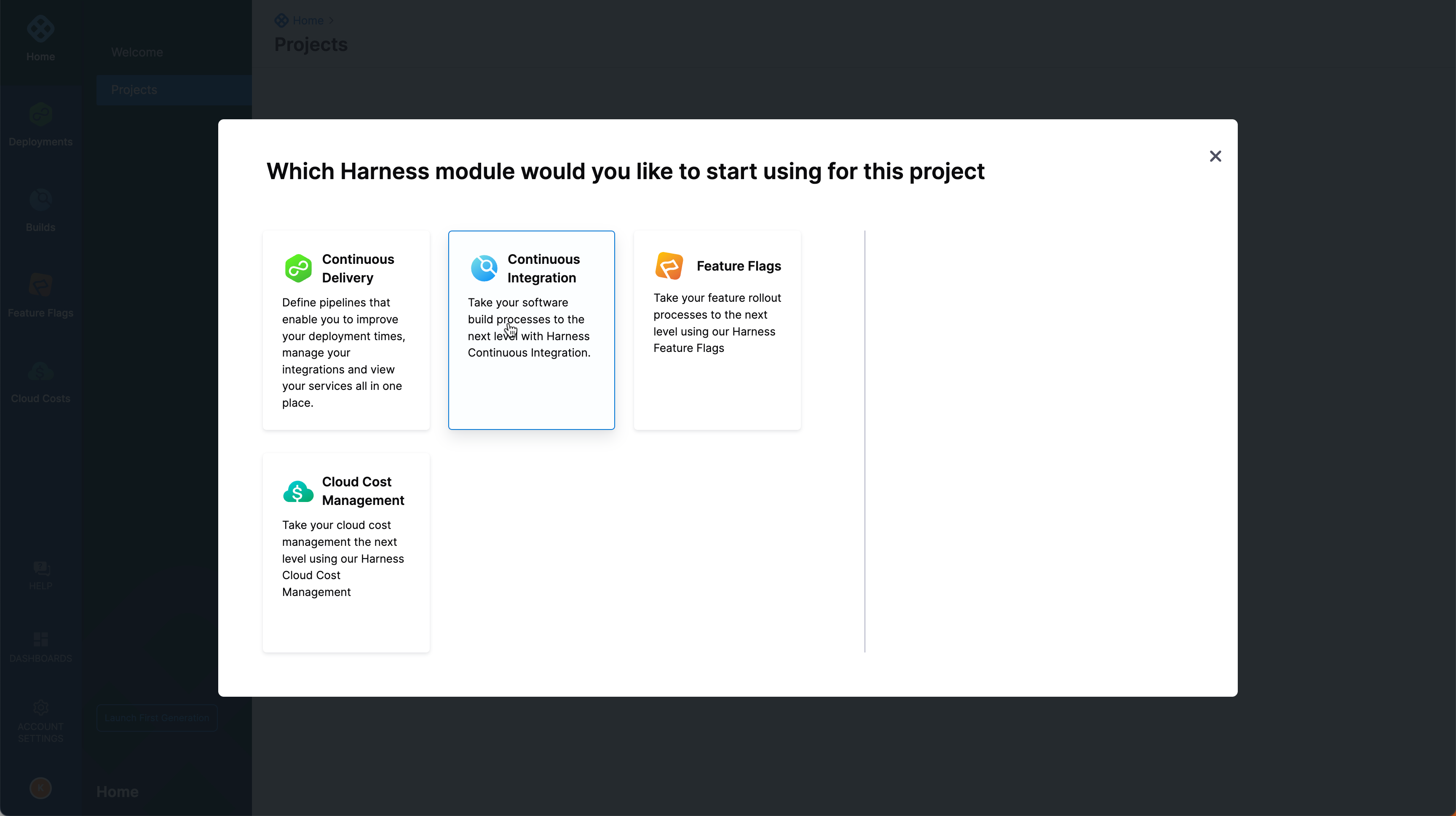

Select the Continuous Integration module.

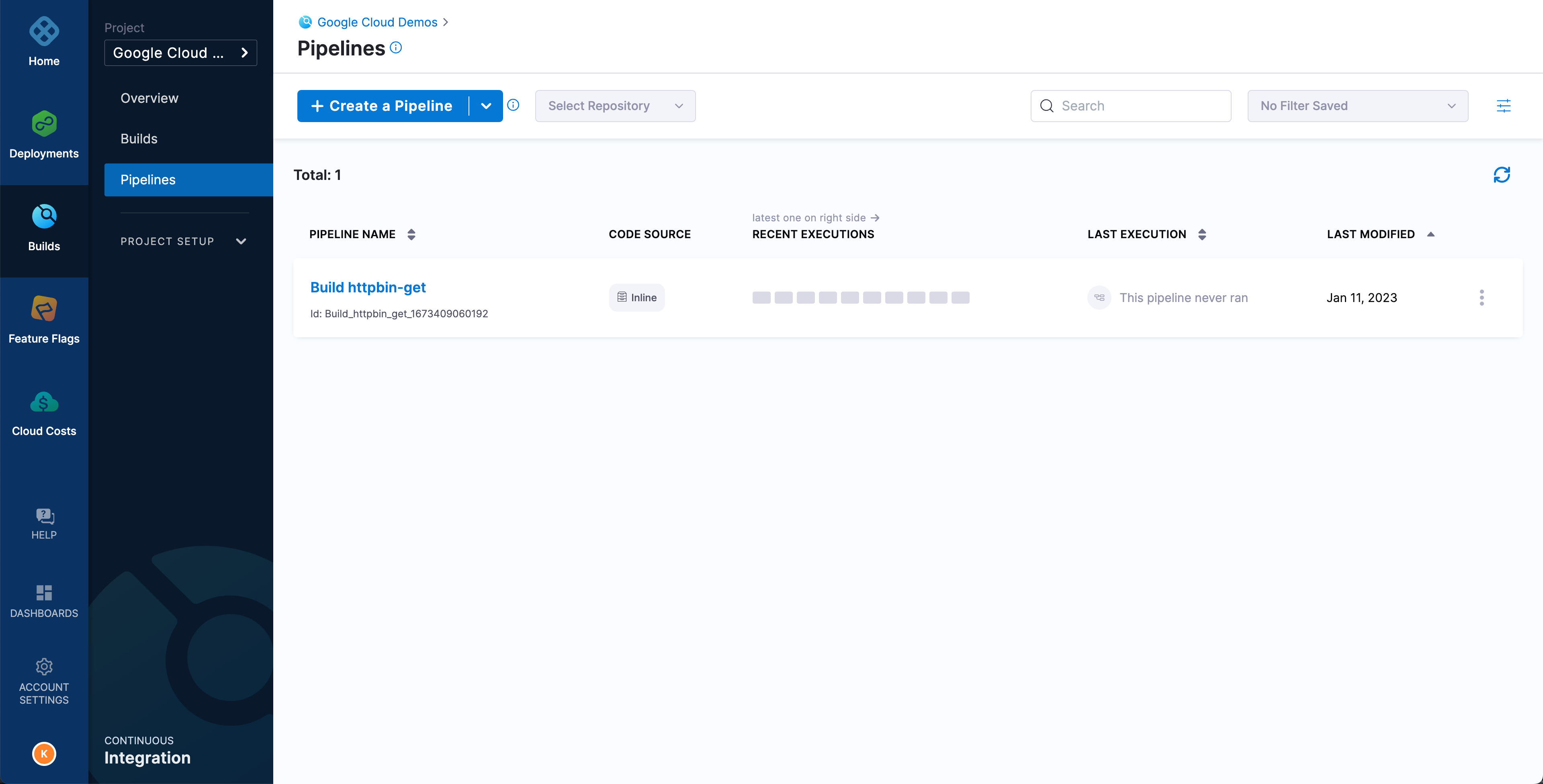

Create a pipeline

The Harness CI pipeline wizard creates a basic pipeline for you.

Select Get Started to begin.

Select GitHub as the repository to use, select Access token as the authentication method, input your GitHub personal access token (

$GITHUB_PAT) in the Access Token field, and select Test Connection to verify your credentials.

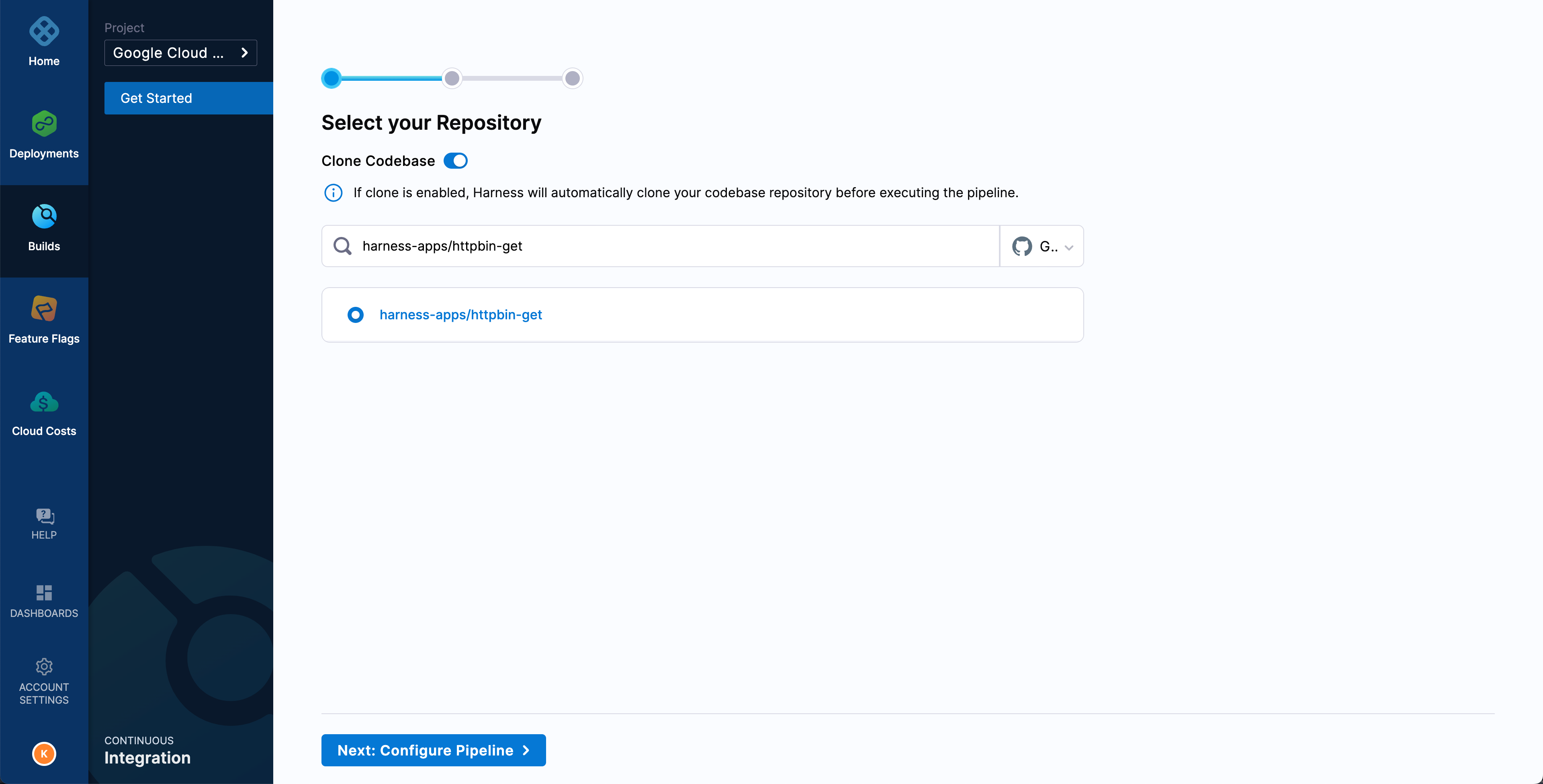

Select Next: Select Repository, choose your fork of the tutorial repo

httpbin-get, and then select Next: Configure Pipeline.

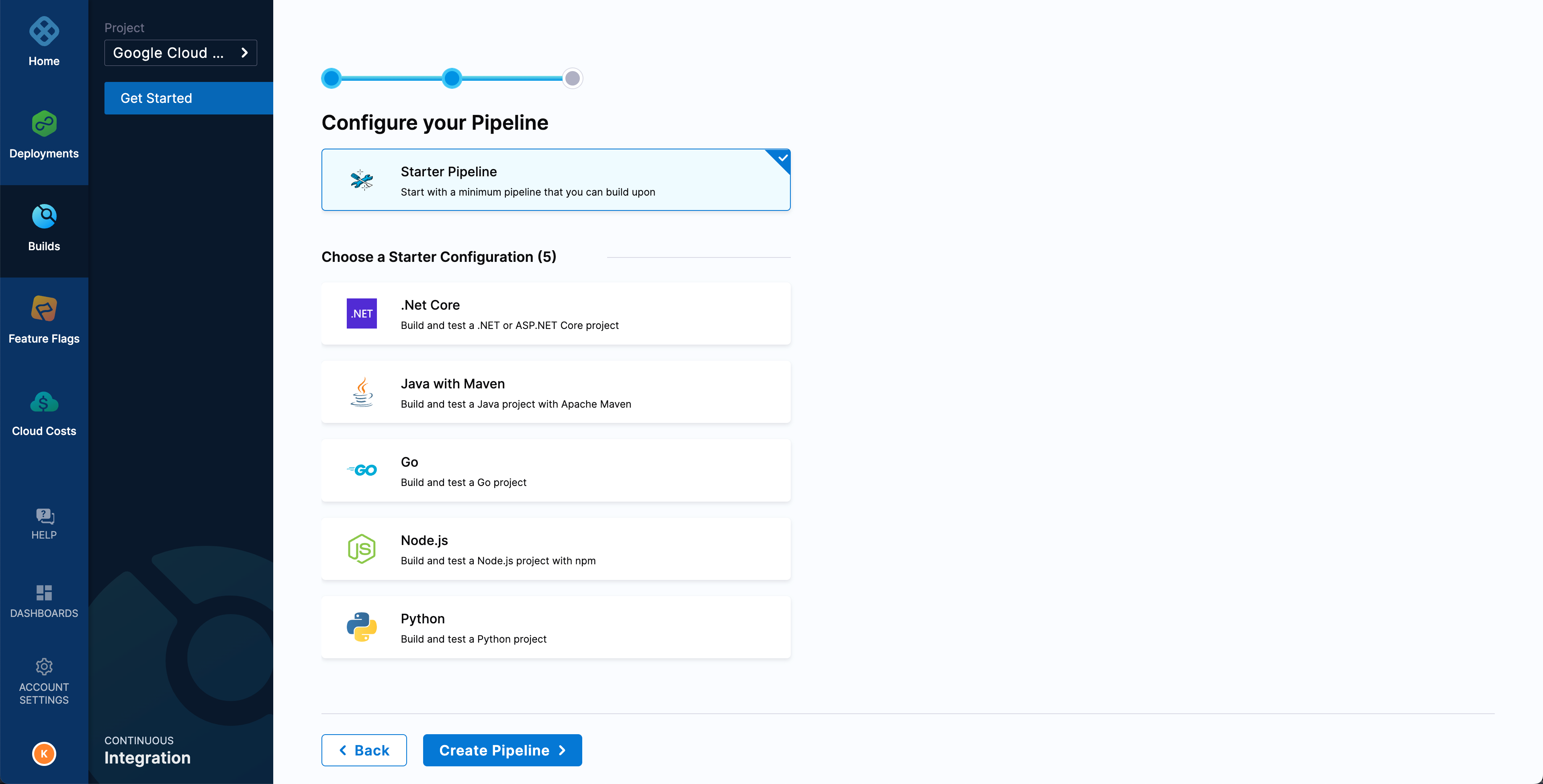

Select Starter Pipeline or one of the starter configurations. Since this tutorial uses a go application, you could use the Go starter configuration. Select Create Pipeline.

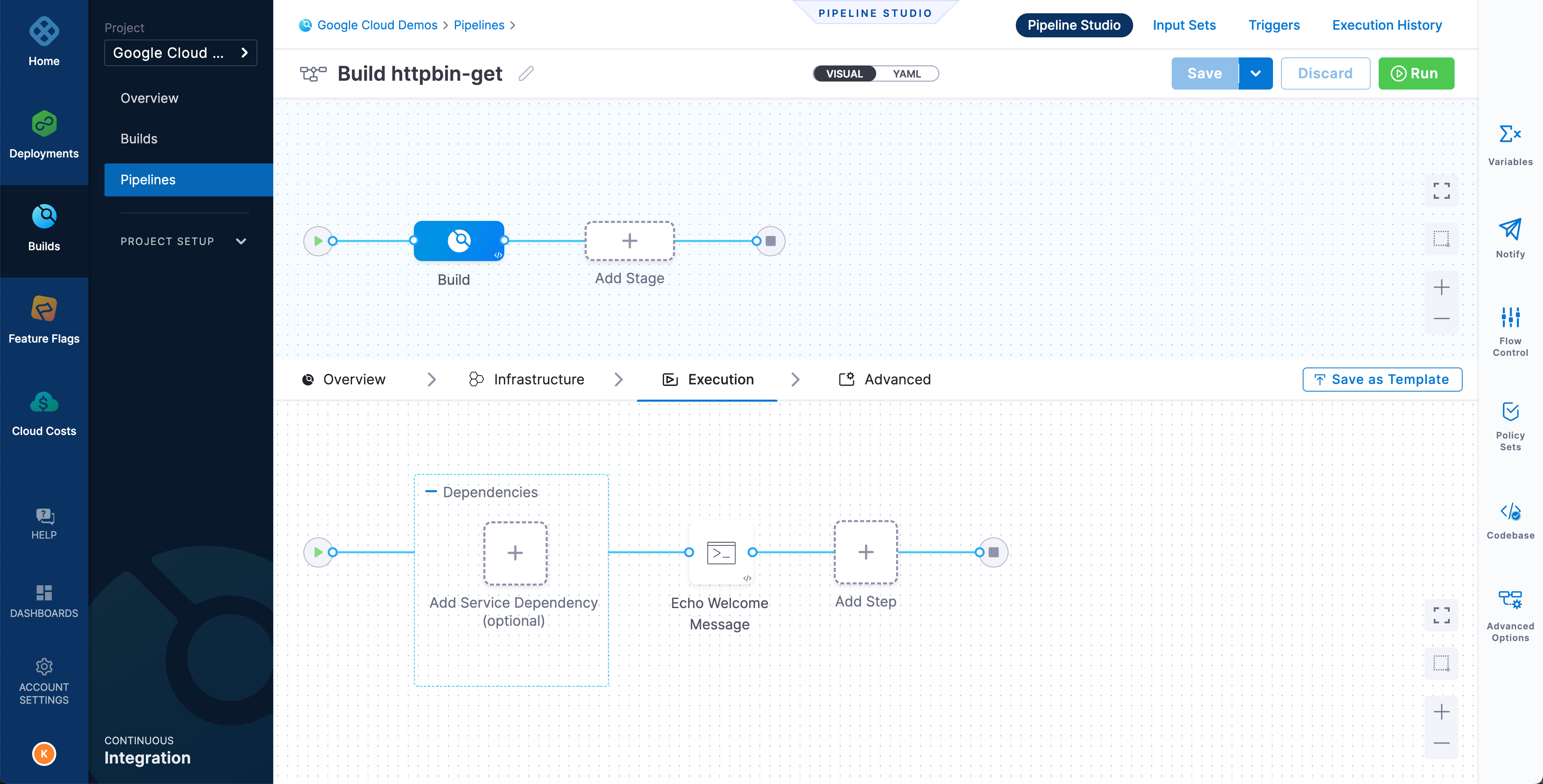

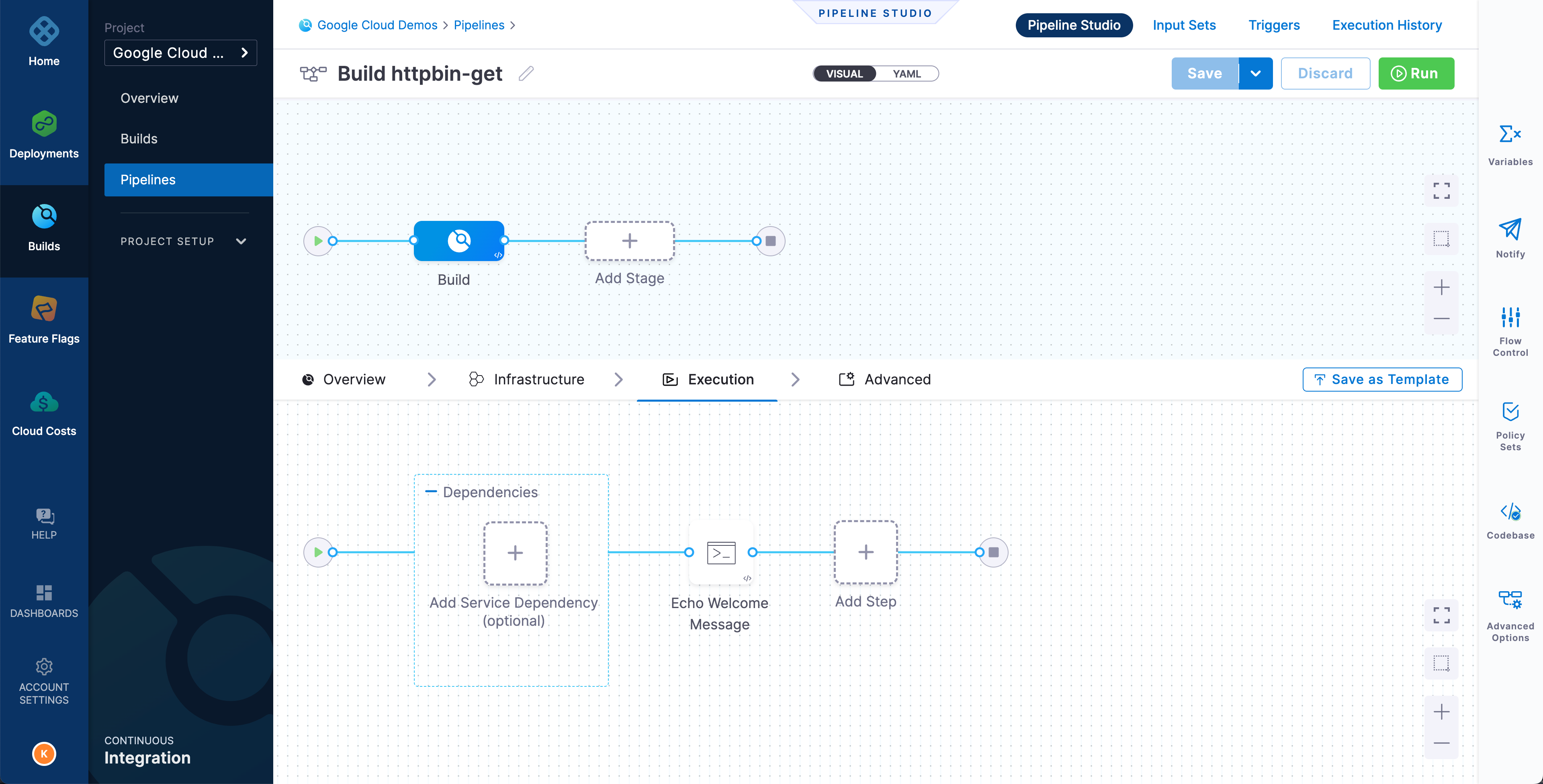

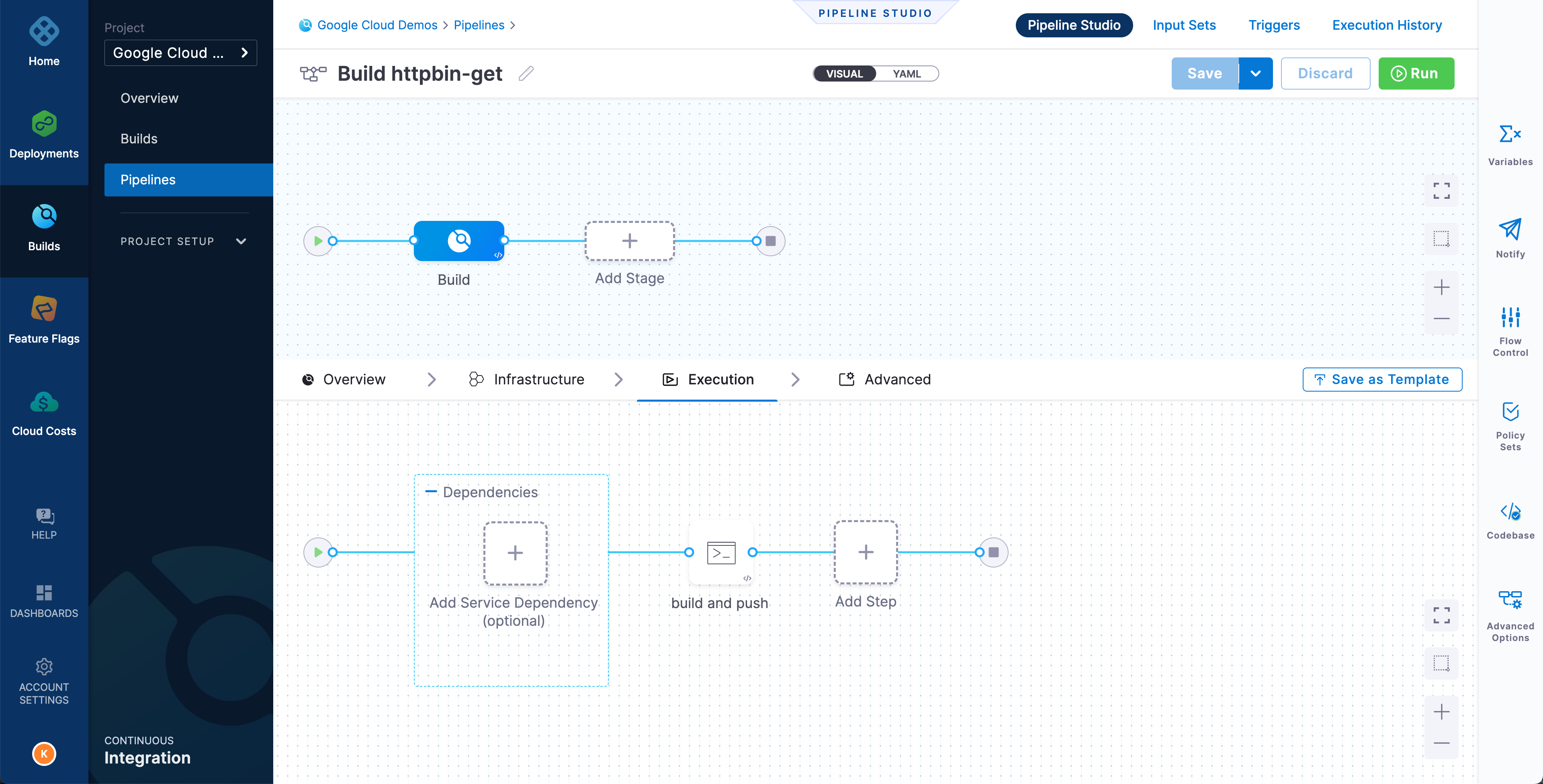

You can use either the visual or YAML editor to add pipeline steps. This tutorial uses the visual editor.

Initially, you will have a single stage, called Build, and single step, called Echo Welcome Message. You will modify this stage so that the pipeline builds and pushes an image to Google Artifact Registry. However, first you must configure additional resources that the steps require, namely secrets and connectors.

Add a Google service account secret key

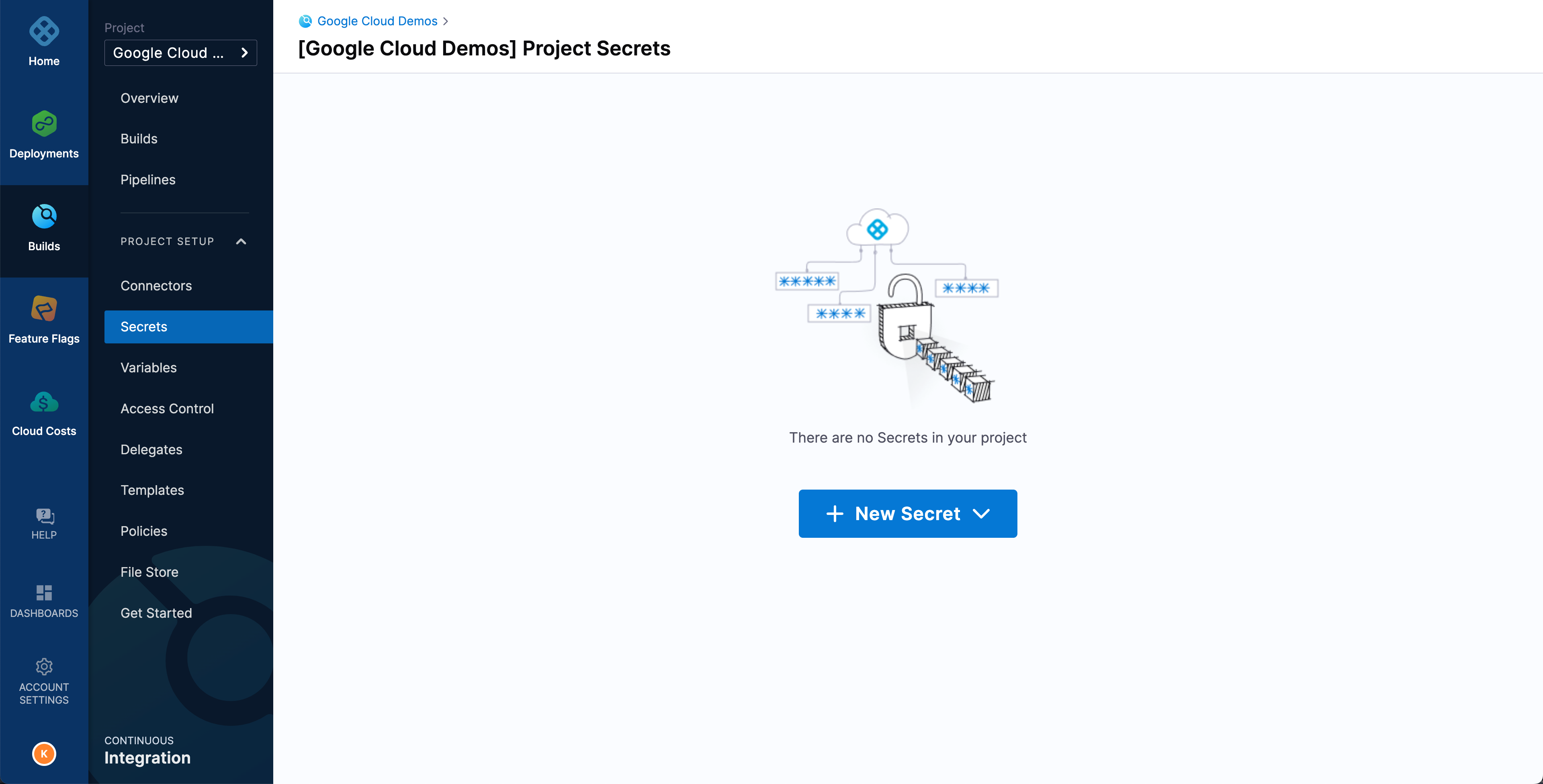

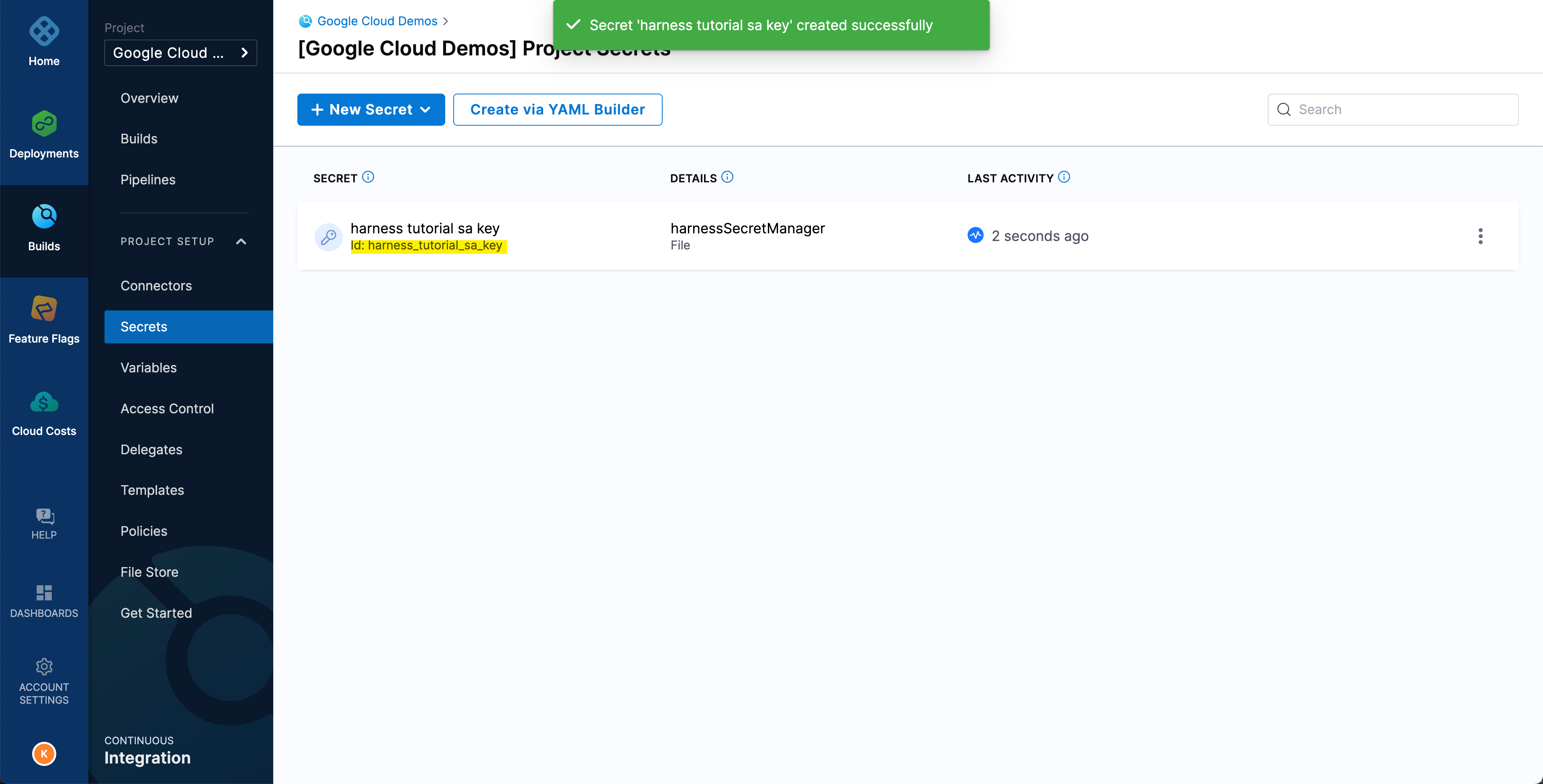

Under Project Setup, select Secrets.

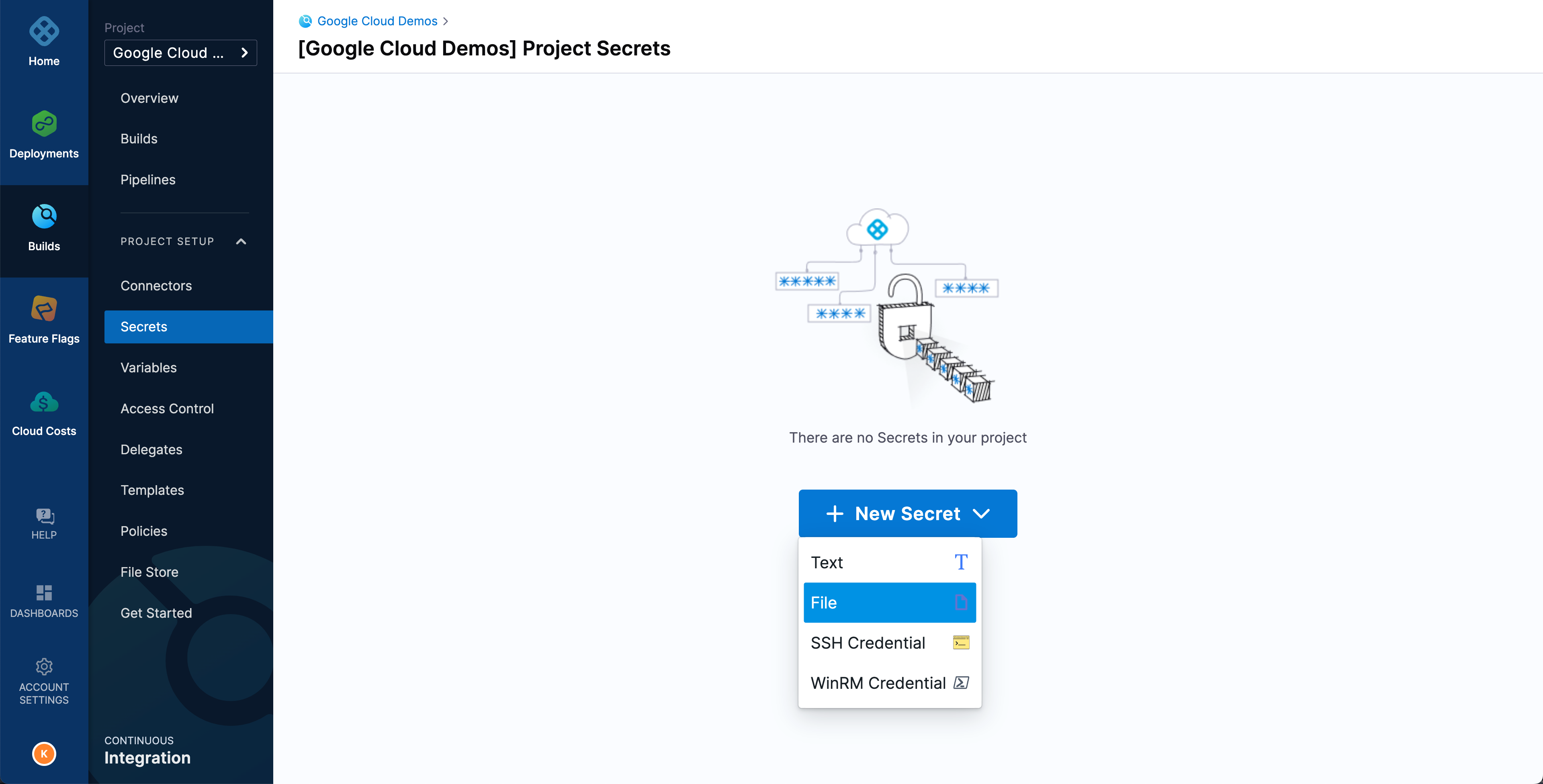

Select New Secret, and then select File.

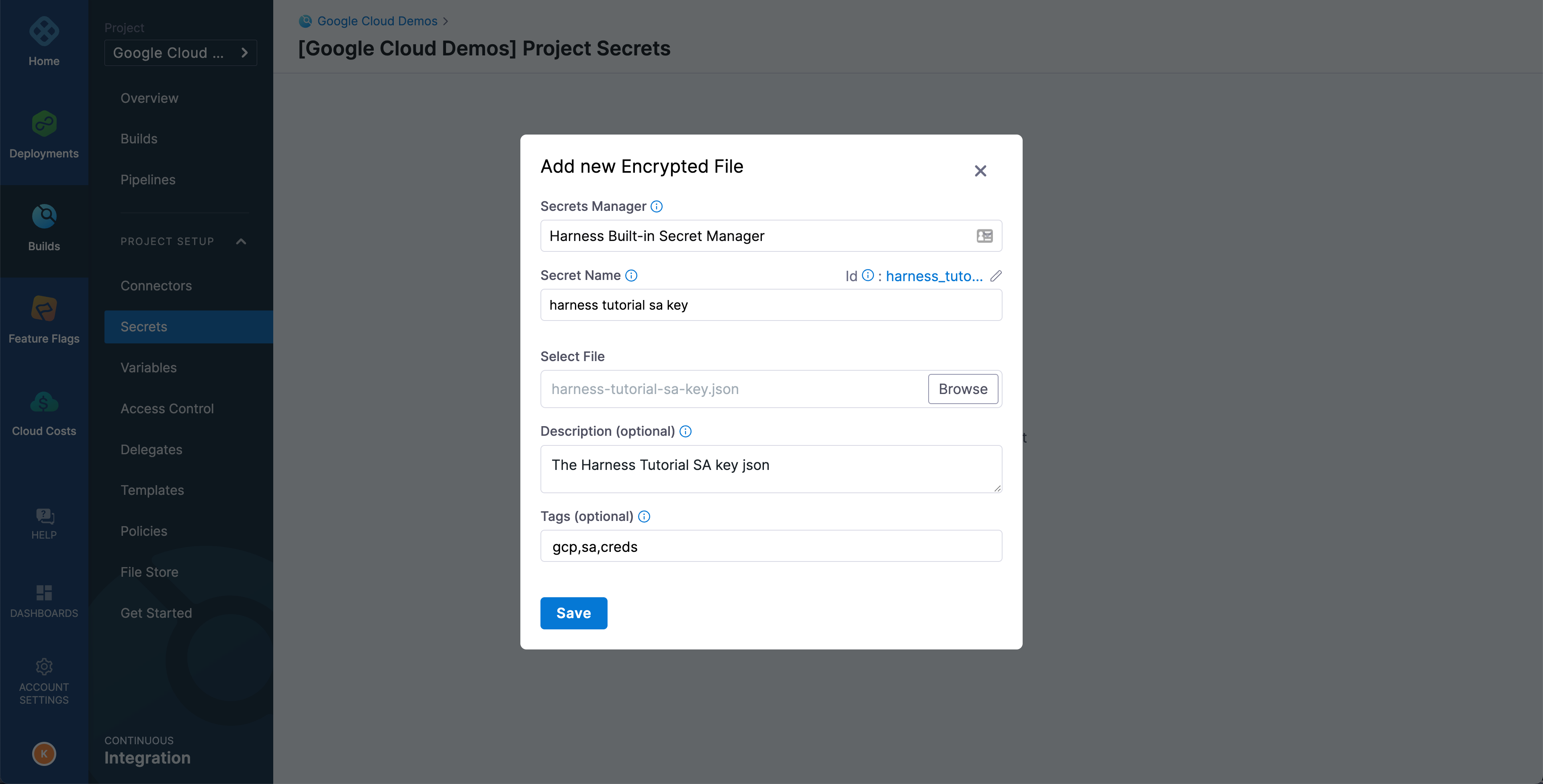

On the Add new Encrypted File window, populate the fields as described below, and then select Save.

- Secrets Manager: Harness Built-in Secret Manager

- secret Name: harness tutorial sa key

- Select File: Choose the

$TUTORIAL_HOME/.keys/harness-tutorial-sa-key.jsonfile - Description and Tags: Optional

On the secrets list, make a note of the

idfor the harness tutorial sa key. You need thisidlater for your CI pipeline.

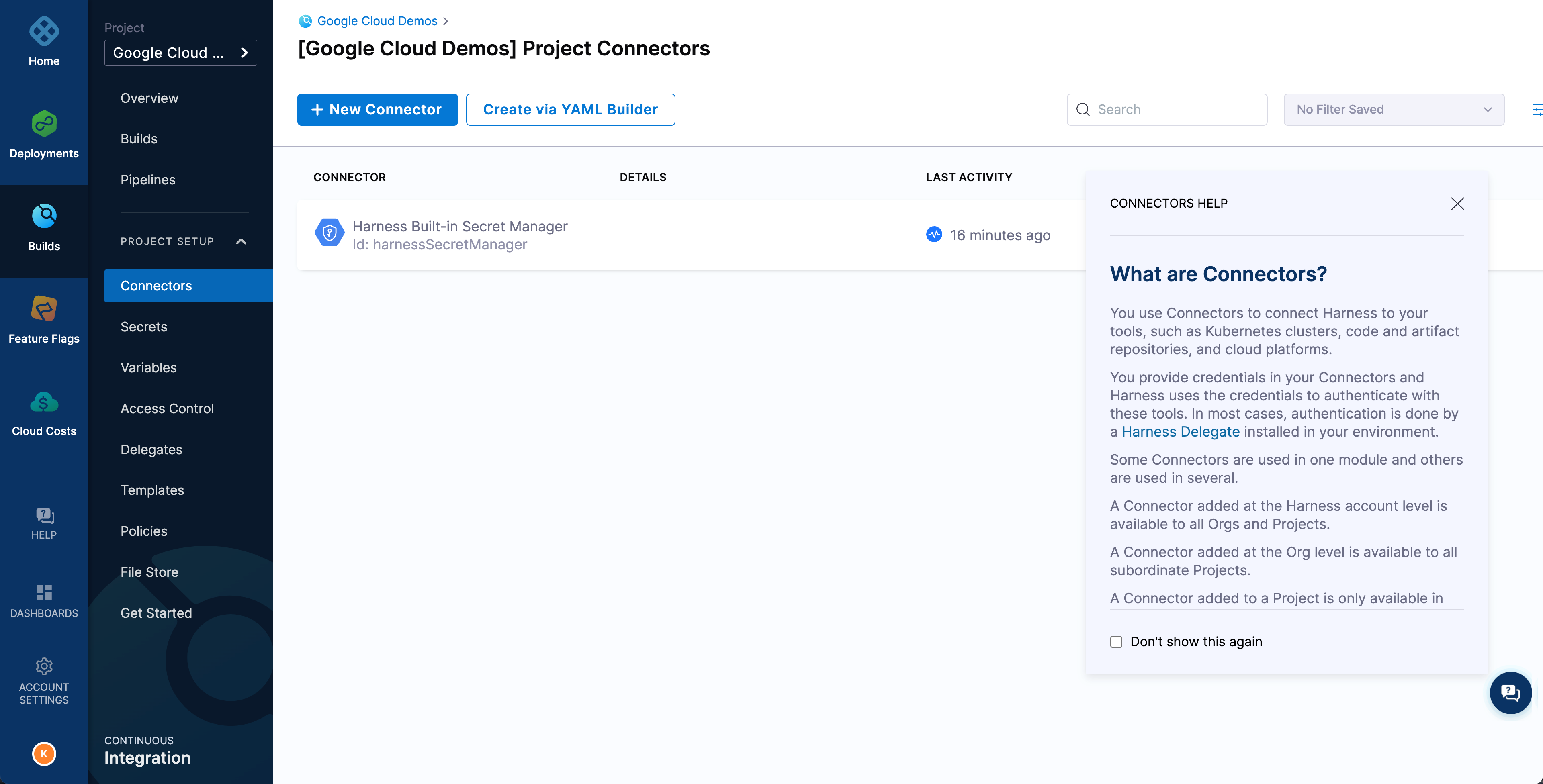

Create a Google Cloud Platform (GCP) connector

You must add a connector that allows your build infrastructure to connect to Google Cloud Platform.

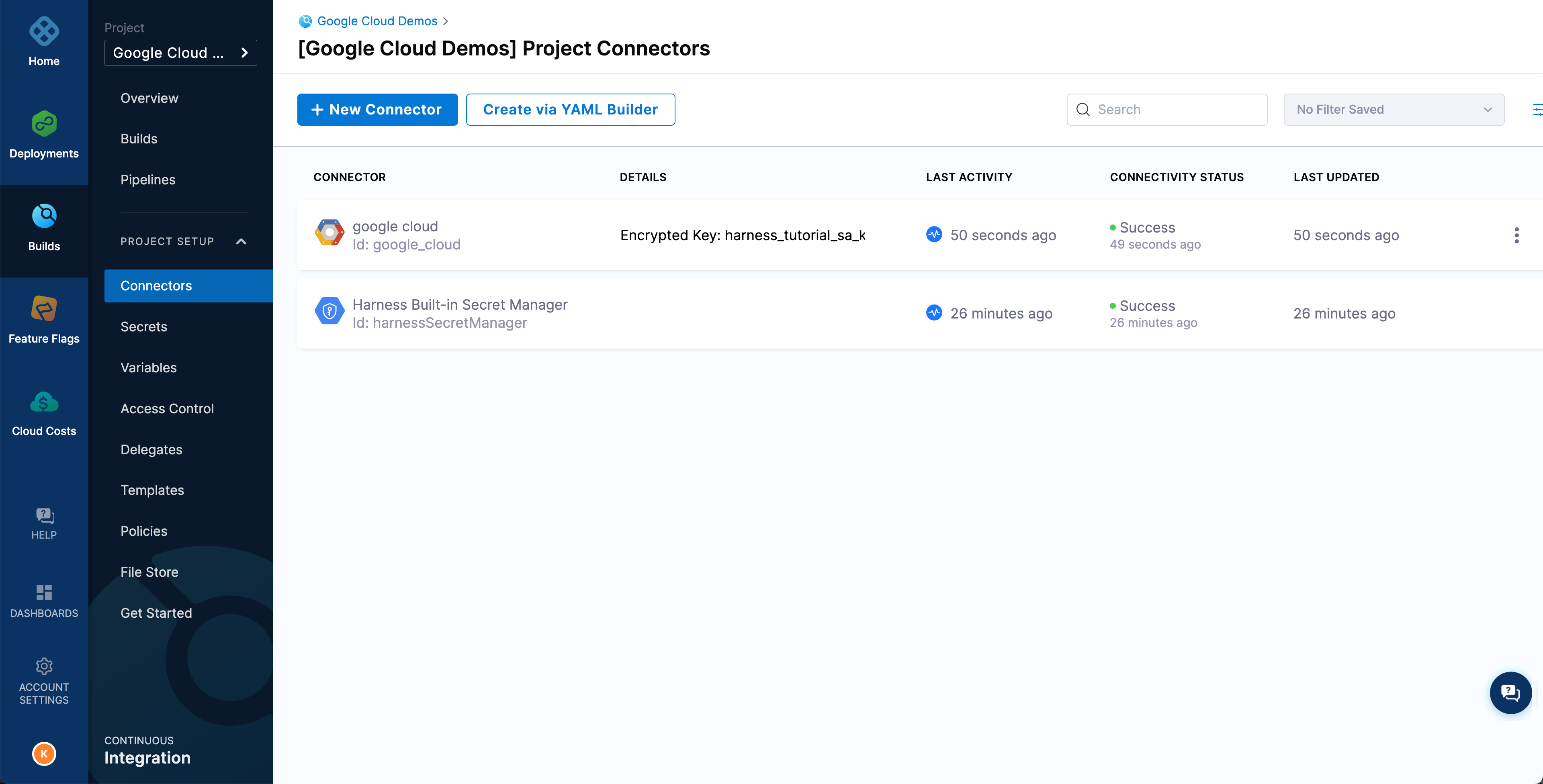

Under Project Setup, select Connectors.

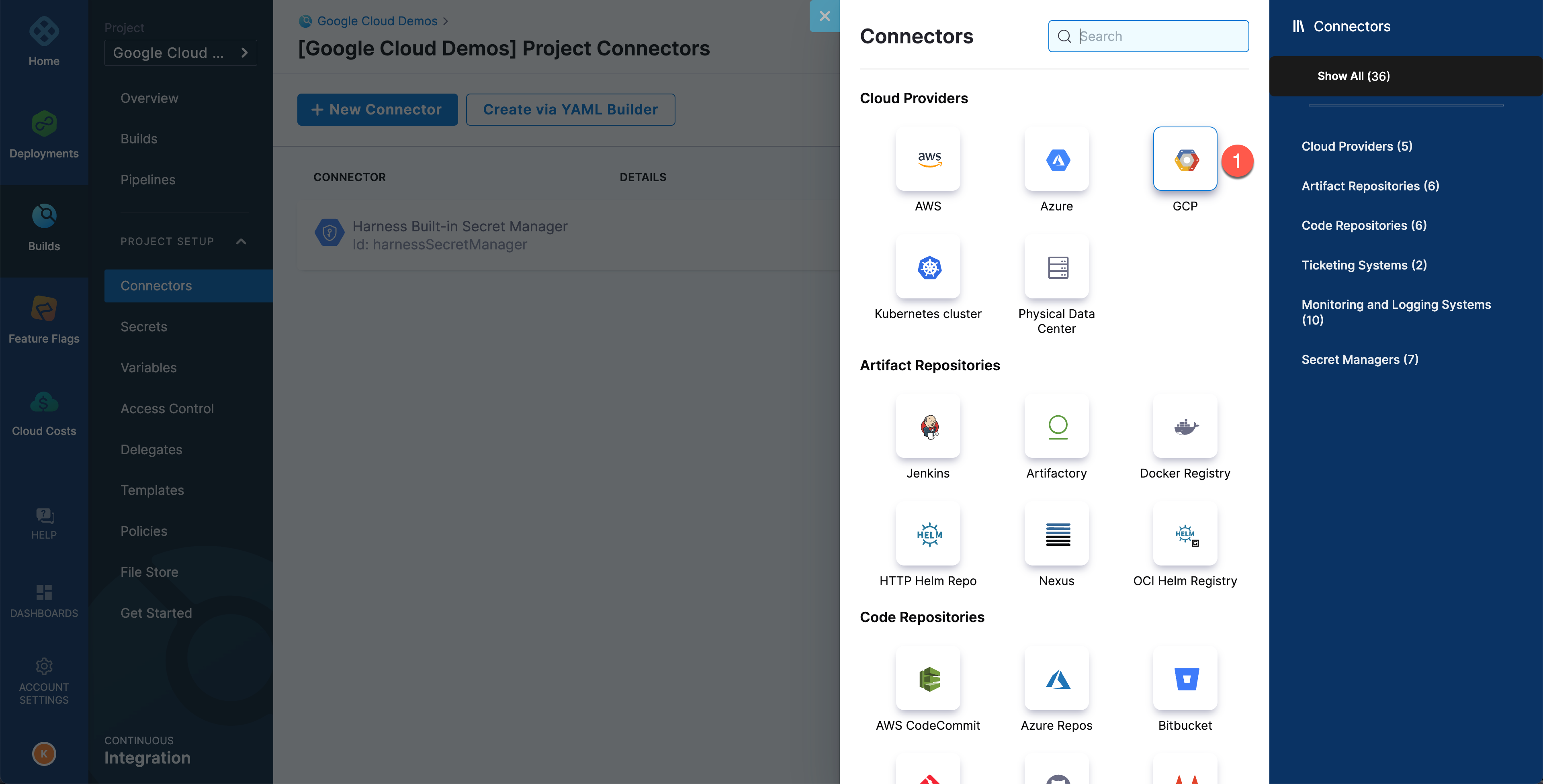

Select New Connector and then select GCP,

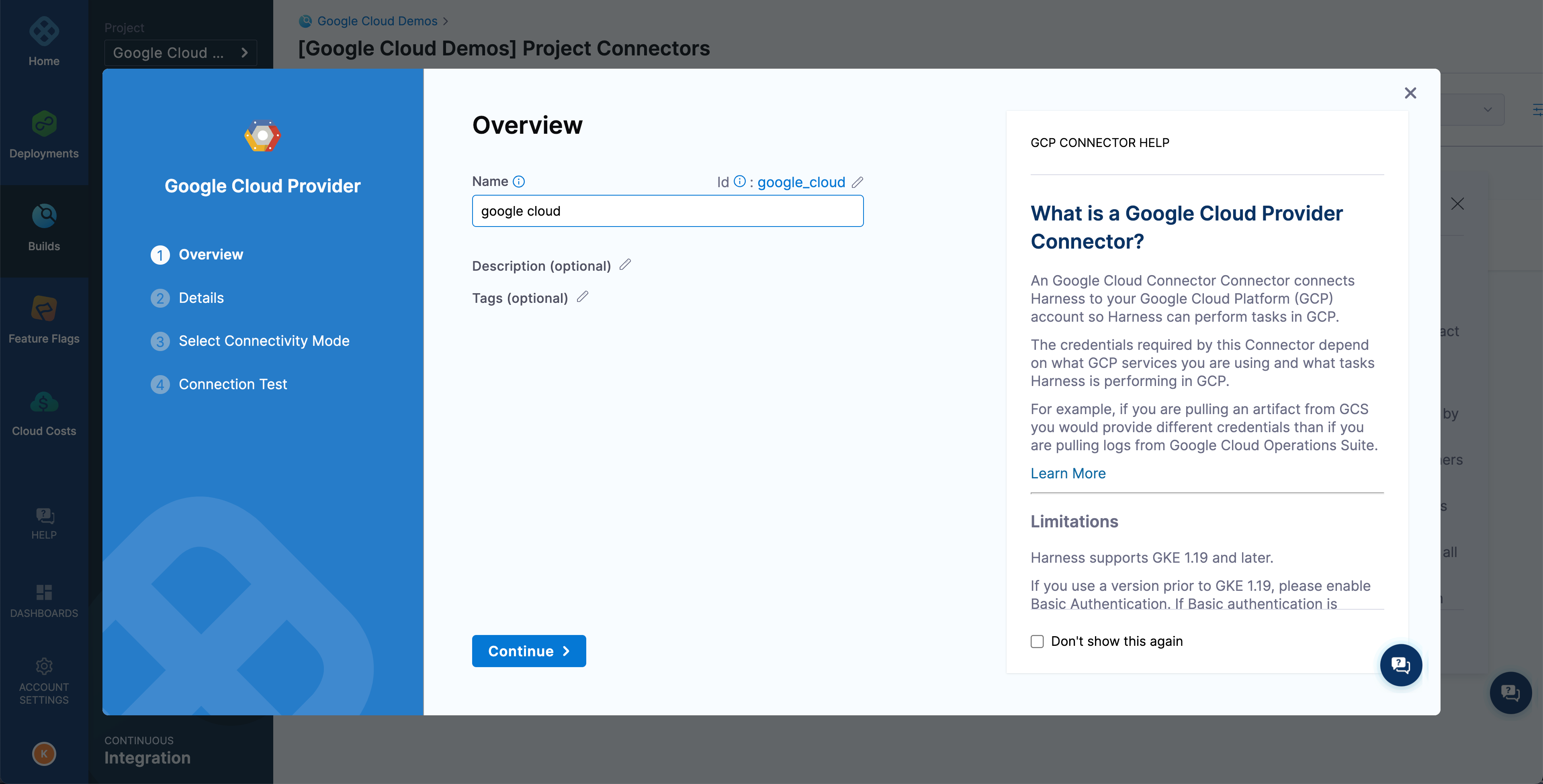

Follow the prompts in the new connector wizard. On the Overview page, input google cloud as the connector Name. Then select Continue to configure the credentials.

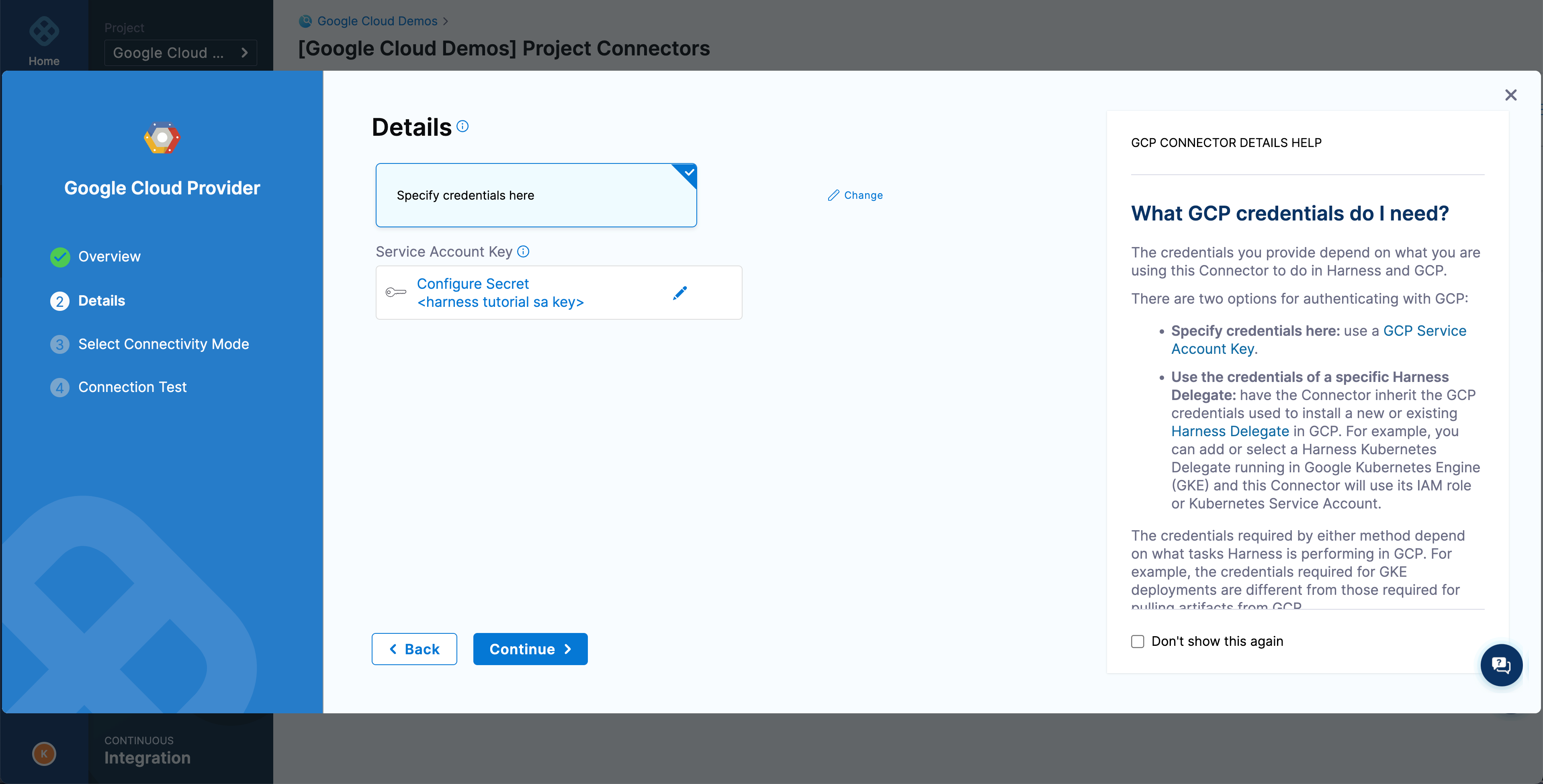

On the Details page, select Specify credentials here, choose the harness tutorial sa key secret that you created earlier, and select Continue.

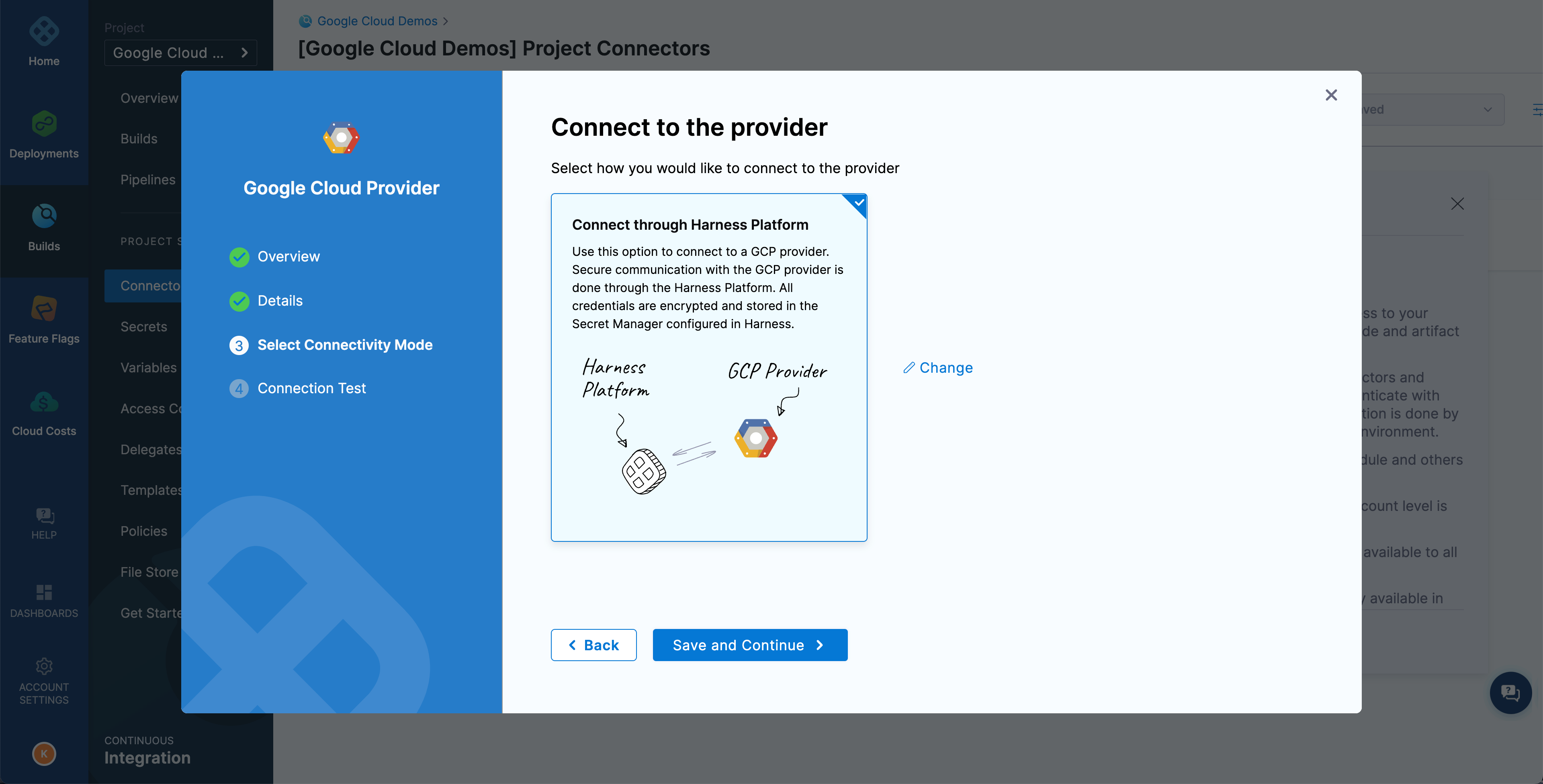

Select Connect through Harness Platform.

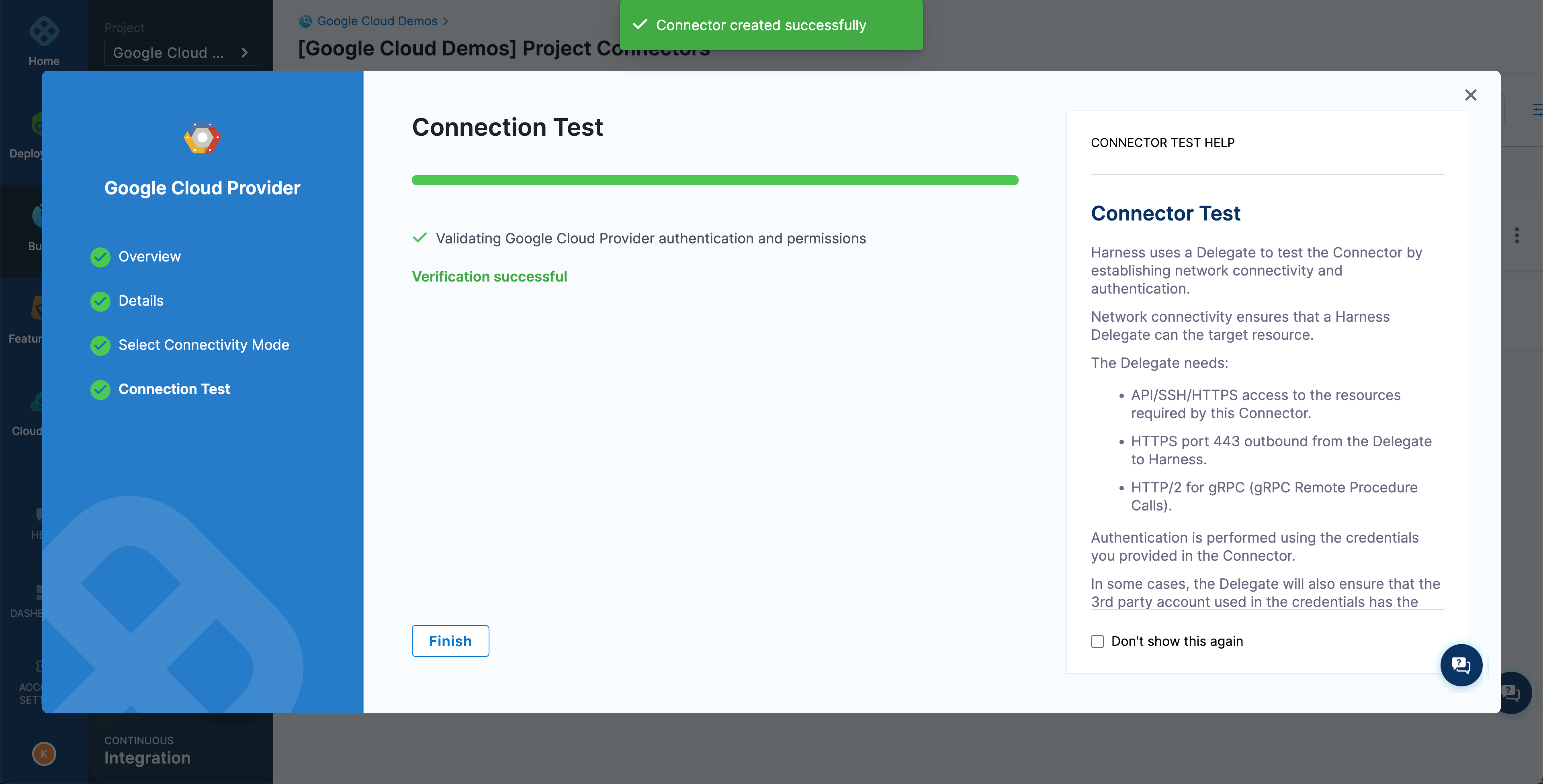

Select Save and Continue to run the connection test,

If the connection is successful, select Finish.

Configure pipeline steps

Go back to Pipelines and select the Build httpbin-get pipeline that you created earlier.

Select the Build stage.

Delete the Echo Welcome Message step by selecting the

xthat appears when you hover over the step.Select Save to save the pipeline.

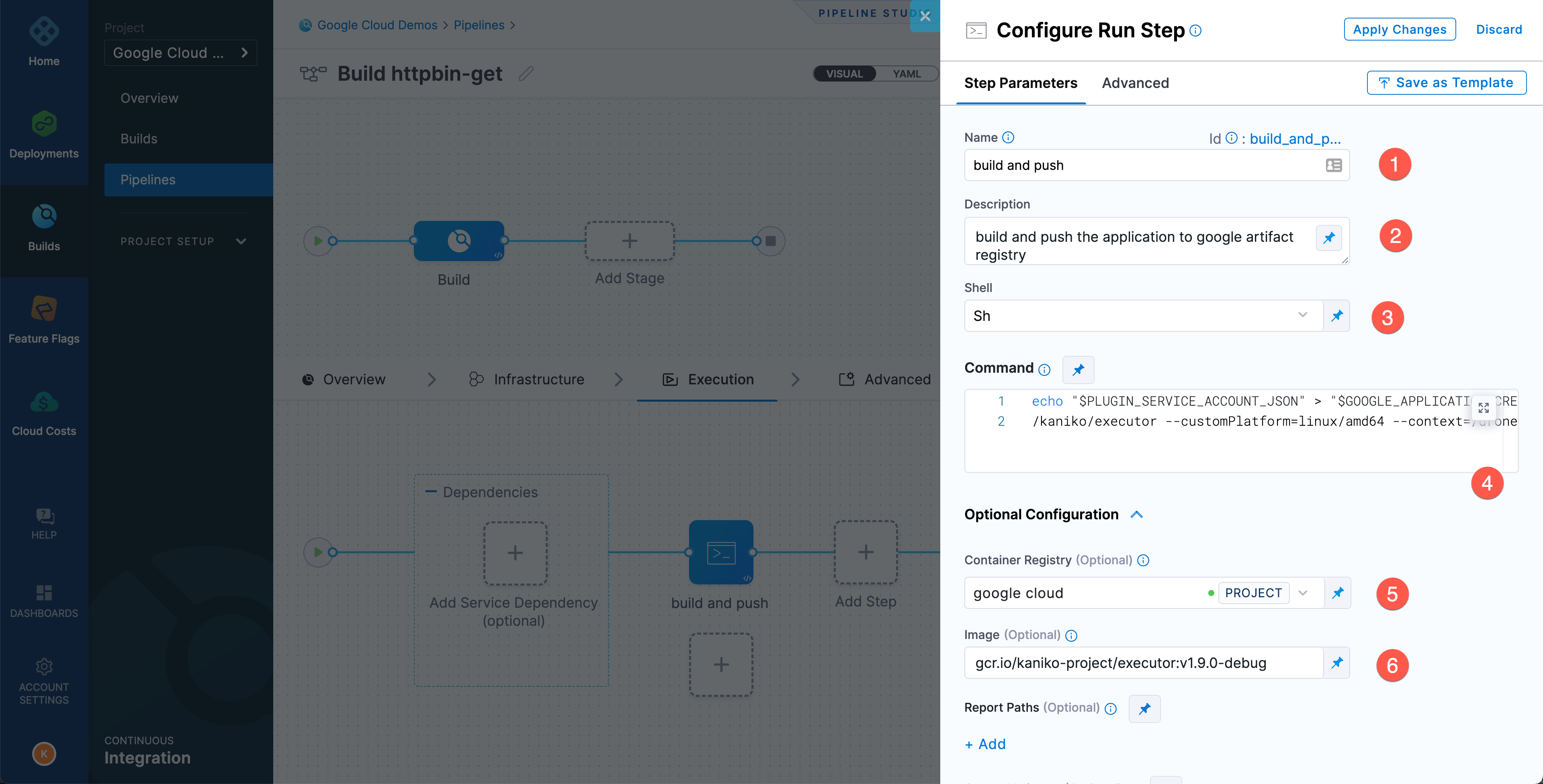

Add a build and push step. Select Add Step. In the Step Library choose Run as the step type, and then populate the fields as follows:

Name: build and push

Description: build the httpbin-get go application

Shell: Sh

Command: Input the following code:

echo "$PLUGIN_SERVICE_ACCOUNT_JSON" > "$GOOGLE_APPLICATION_CREDENTIALS"

/kaniko/executor --customPlatform=linux/amd64 --context=/harness --destination=$PLUGIN_IMAGEContainer Registry: google cloud

Image: _cr.io/kaniko-project/executor:v1.9.0-debug

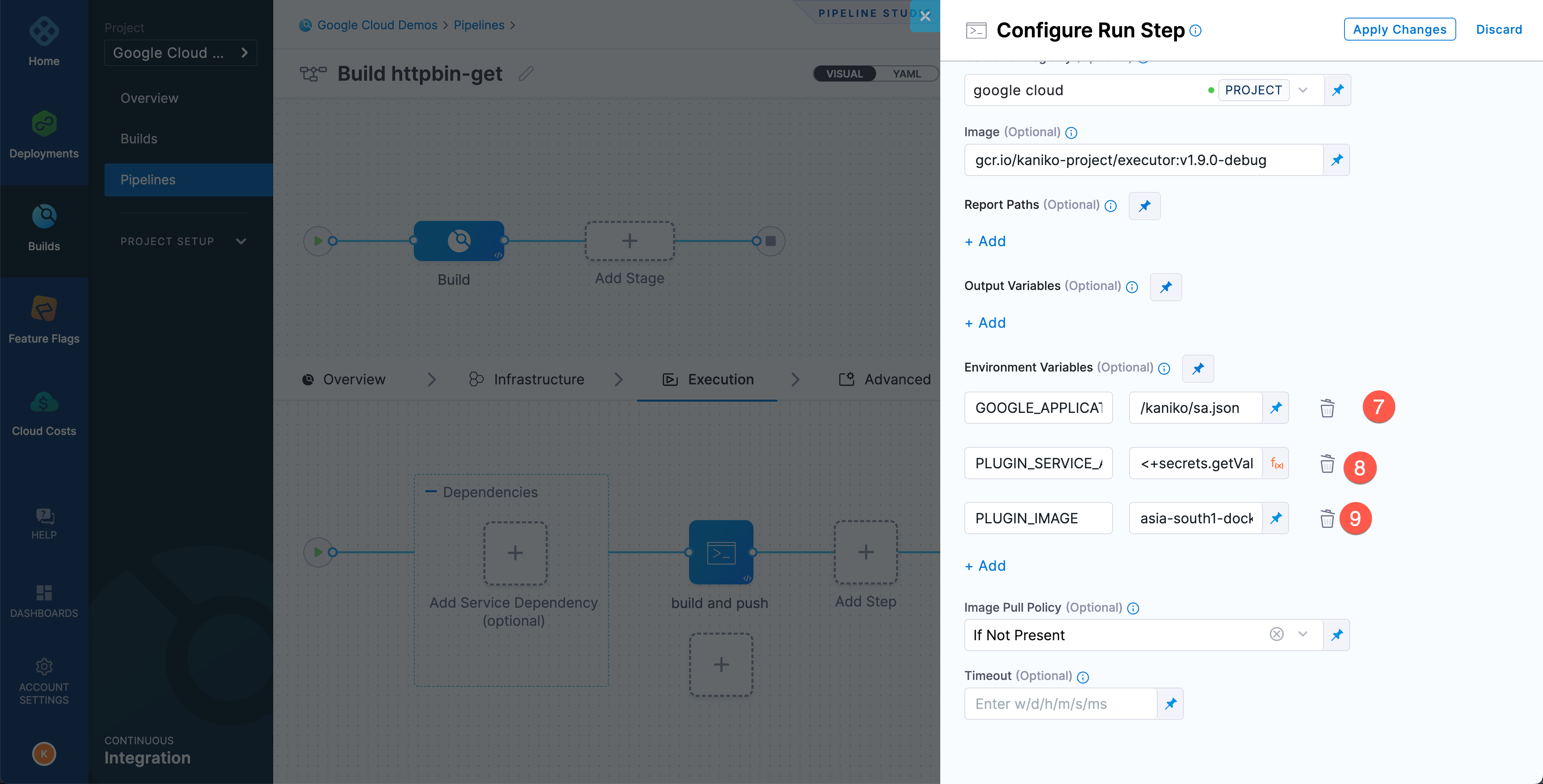

To enable this step to push the container image to Google Artifact Registry, you must provide kaniko with

$GOOGLE_APPLICATION_CREDENTIALS. Select Add under Environment Variables and add the following environment variables:Variable Name Value Description PLUGIN_SERVICE_ACCOUNT_JSON<+secrets.getValue("harness_tutorial_sa_key")>The Google service account secret key GOOGLE_APPLICATION_CREDENTIALS/kaniko/sa.jsonThe json file where the service account key content will be written PLUGIN_IMAGE$PLUGIN_IMAGEThe container image name infoThe

PLUGIN_SERVICE_ACCOUNT_JSONenvironment variable value must be the Expression type.The

PLUGIN_IMAGEvariable's value of$PLUGIN_IMAGEis derived from the$TUTORIAL_HOME/.env

Select Apply Changes to save the step, and then select Save to save the pipeline.

Run the pipeline

Try running the pipeline to see if it can build and push the go application.

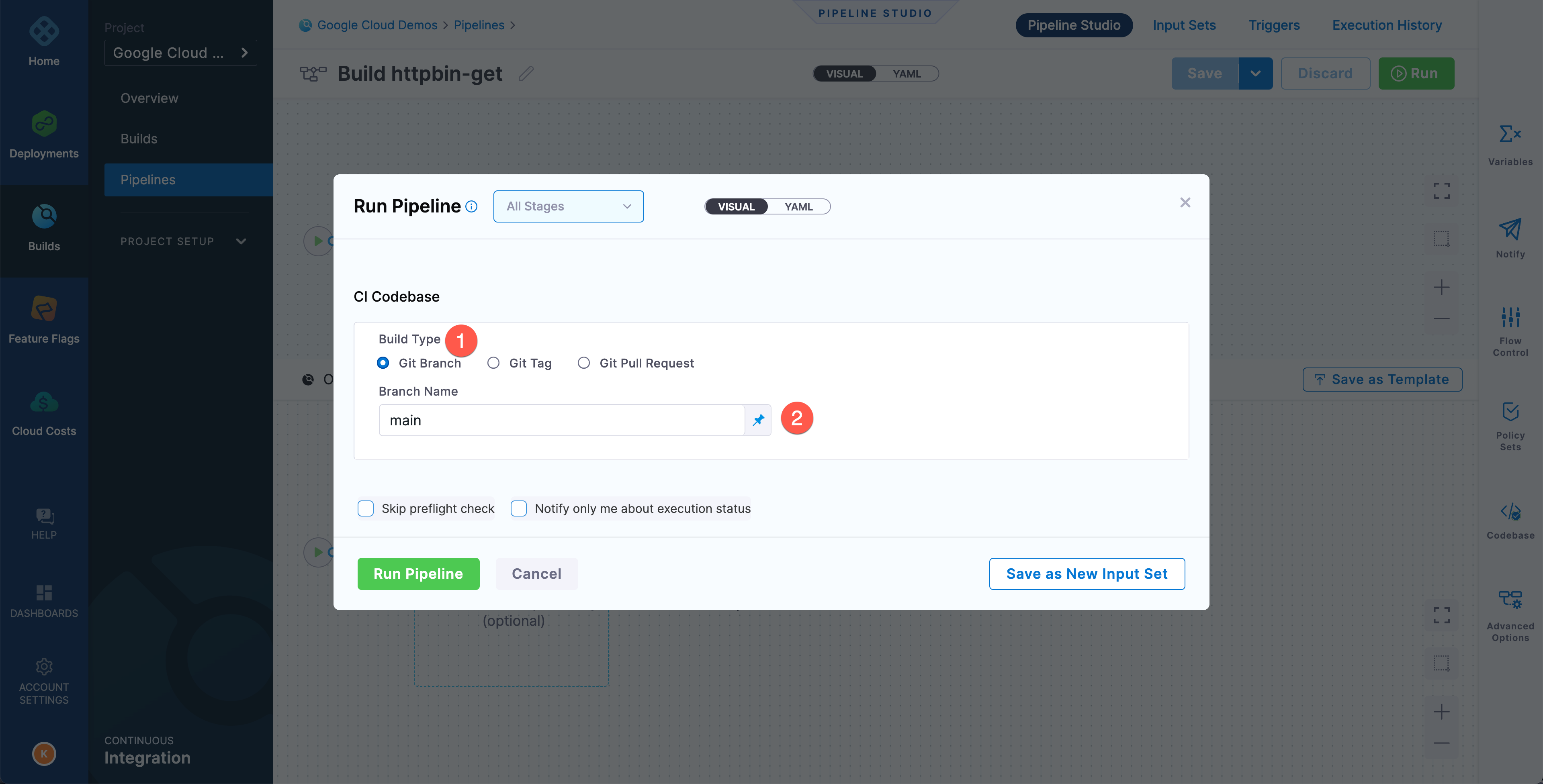

Select Run on the pipeline editor page. On the Run Pipeline screen, make sure Git Branch is selected and the Branch Name is set to main. Select Run Pipeline to start the pipeline run.

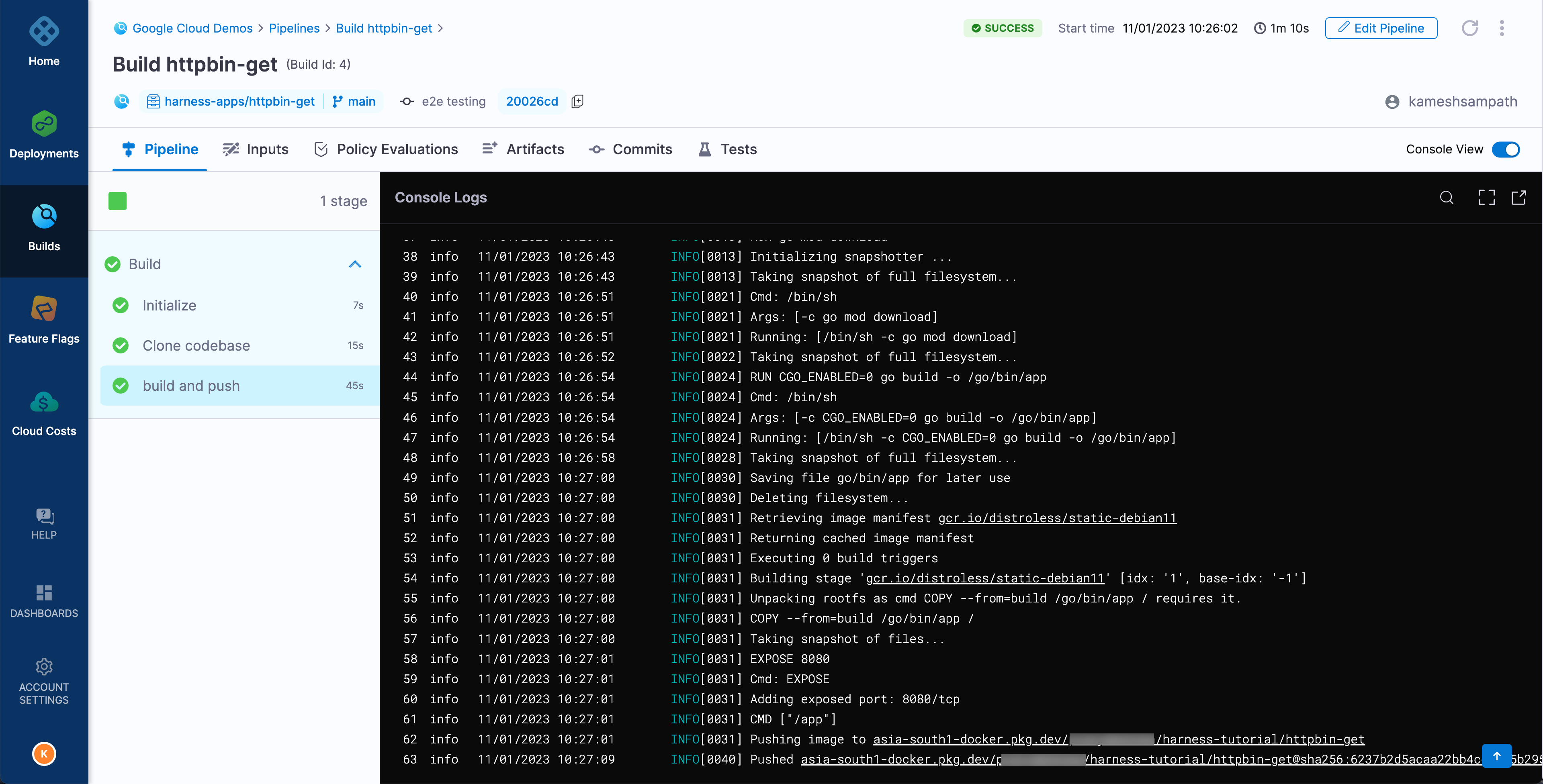

Wait while the pipeline runs to make sure it succeeds.

tip

tipSelect a step to view the step's logs.

If your run step was successful, add another step to build and push the Go application to the container registry.

Continue your Continuous Integration journey

With CI pipelines you can consistently execute your builds at any time. Try modifying the pipeline trigger to watch for SCM events so that, for example, each commit automatically kicks off the pipeline. All objects you create are available to reuse in your pipelines.

You can also save your build pipelines as part of your source code. Everything that you do in Harness is represented by YAML; you can store it all alongside your project files.

After you build an artifact, you can use the Harness Continuous Delivery (CD) module to deploy your artifact. If you're ready to try CD, check out the CD Tutorials.