Tanzu Application Services deployments overview

This topic shows you how to deploy a publicly available application to your Tanzu Application Service (TAS, formerly PCF) space by using any deployment strategy in Harness.

Currently, this feature is behind feature flags NG_SVC_ENV_REDESIGN. Contact Harness Support to enable this feature.

Objectives

You'll learn how to:

- Install and launch a Harness delegate in your target cluster.

- Connect Harness with your TAS account.

- Connect Harness with a public image hosted on Artifactory.

- Specify the manifest to use for the application.

- Set up a TAS pipeline in Harness to deploy the application.

Important notes

- For TAS deployments, Harness supports the following artifact sources. You connect Harness to these registries by using your registry account credentials.

- Before you create a TAS pipeline in Harness, make sure that you have the Continuous Delivery module in your Harness account. For more information, go to create organizations and projects.

- Your Harness delegate profile must have CF CLI v7,

autoscaler, andCreate-Service-Pushplugins added to it.

Connect to a TAS provider

You can connect Harness to a TAS space by adding a TAS connector. Perform the following steps to add a TAS connector.

Open a Harness project and select the Deployments module.

In Project Setup, select Connectors, then select New Connector.

In Cloud Providers, select Tanzu Application Service. The TAS connector settings appear.

Enter a connector name and select Continue.

Enter the TAS Endpoint URL. For example,

https://api.system.tas-mycompany.com.In Authentication, select one of the following options.

- Plaintext - Enter the username and password. For password, you can either create a new secret or use an existing one.

- Encrypted - Enter the username and password. You can create a new secret for your username and password or use exiting ones.

Select Continue.

In Connect to the provider, select Connect through a Harness Delegate, and then select Continue. We don't recommend using the Connect through Harness Platform option here because you'll need a delegate later for connecting to your TAS environment. Typically, the Connect through Harness Platform option is a quick way to make connections without having to use delegates.

Expand the sections below to learn more about installing delegates.

Use the delegate installation wizard

- In your Harness project, select Project Setup.

- Select Delegates.

- Select Install a Delegate.

- Follow the delegate installation wizard.

Use this delegate installation wizard video to guide you through the process.

Install a delegate using the terminal

Install Harness Delegate on Kubernetes or Docker

What is Harness Delegate?

Harness Delegate is a lightweight worker process that is installed on your infrastructure and communicates only via outbound HTTP/HTTPS to the Harness Platform. This enables the Harness Platform to leverage the delegate to execute the CI/CD and other tasks on your behalf, without any of your secrets leaving your network.

You can install the Harness Delegate on either Docker or Kubernetes.

Install Harness Delegate

Create a new delegate token

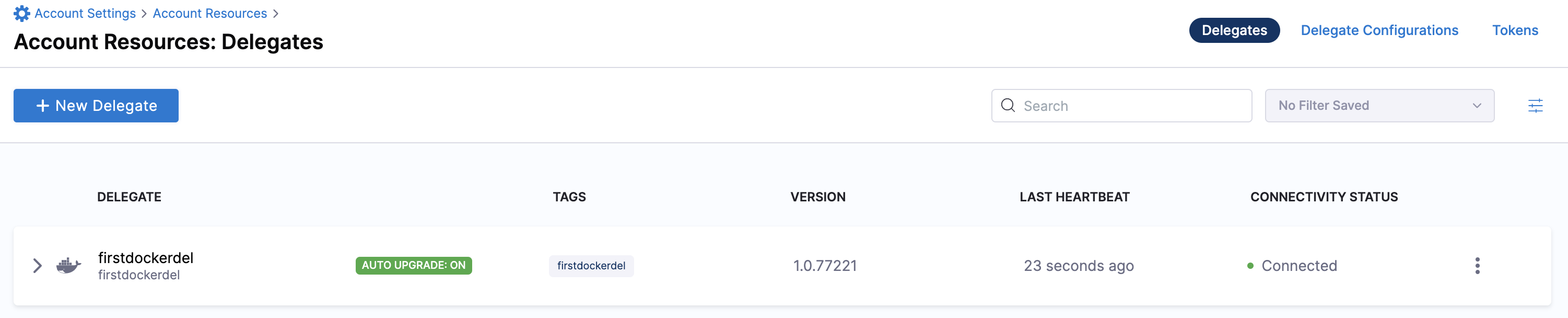

Log in to the Harness Platform and go to Account Settings -> Account Resources -> Delegates. Select the Tokens tab. Select +New Token, and enter a token name, for example firstdeltoken. Select Apply. Harness Platform generates a new token for you. Select Copy to copy and store the token in a temporary file. You will provide this token as an input parameter in the next installation step. The delegate will use this token to authenticate with the Harness Platform.

Get your Harness account ID

Along with the delegate token, you will also need to provide your Harness accountId as an input parameter during delegate installation. This accountId is present in every Harness URL. For example, in the following URL:

https://app.harness.io/ng/#/account/6_vVHzo9Qeu9fXvj-AcQCb/settings/overview

6_vVHzo9Qeu9fXvj-AcQCb is the accountId.

Now you are ready to install the delegate on either Docker or Kubernetes.

- Kubernetes

- Docker

Prerequisite

Ensure that you have access to a Kubernetes cluster. For the purposes of this tutorial, we will use minikube.

Install minikube

- On Windows:

choco install minikube

- On macOS:

brew install minikube

Now start minikube with the following config.

minikube start --memory 4g --cpus 4

Validate that you have kubectl access to your cluster.

kubectl get pods -A

Now that you have access to a Kubernetes cluster, you can install the delegate using any of the options below.

- Helm Chart

- Terraform Helm Provider

- Kubernetes Manifest

Install the Helm chart

As a prerequisite, you must have Helm v3 installed on the machine from which you connect to your Kubernetes cluster.

You can now install the delegate using the delegate Helm chart. First, add the harness-delegate Helm chart repo to your local Helm registry.

helm repo add harness-delegate https://app.harness.io/storage/harness-download/delegate-helm-chart/

helm repo update

helm search repo harness-delegate

We will use the harness-delegate/harness-delegate-ng chart in this tutorial.

NAME CHART VERSION APP VERSION DESCRIPTION

harness-delegate/harness-delegate-ng 1.0.8 1.16.0 A Helm chart for deploying harness-delegate

Now we are ready to install the delegate. The following example installs/upgrades firstk8sdel delegate (which is a Kubernetes workload) in the harness-delegate-ng namespace using the harness-delegate/harness-delegate-ng Helm chart.

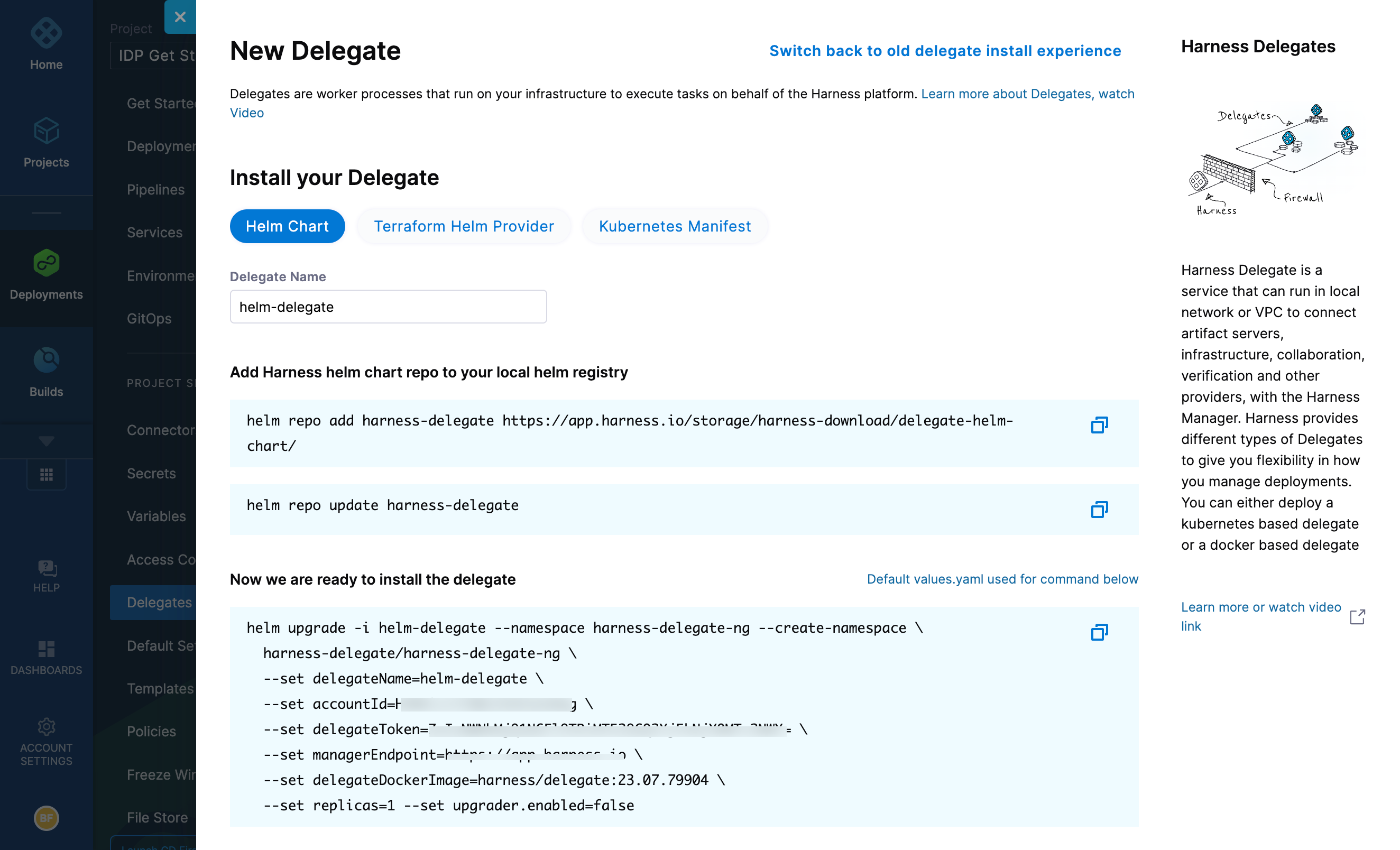

To install the delegate, do the following:

In Harness, select Deployments, then select your project.

Select Delegates under Project Setup.

Select Install a Delegate to open the New Delegate dialog.

Select Helm Chart under Install your Delegate.

Copy the

helm upgradecommand.Run the command.

The command uses the default values.yaml located in the delegate-helm-chart GitHub repo. If you want change one or more values in a persistent manner instead of the command line, you can download and update the values.yaml file as per your need. You can use the updated values.yaml file as shown below.

helm upgrade -i firstk8sdel --namespace harness-delegate-ng --create-namespace \

harness-delegate/harness-delegate-ng \

-f values.yaml \

--set delegateName=firstk8sdel \

--set accountId=PUT_YOUR_HARNESS_ACCOUNTID_HERE \

--set delegateToken=PUT_YOUR_DELEGATE_TOKEN_HERE \

--set managerEndpoint=PUT_YOUR_MANAGER_HOST_AND_PORT_HERE \

--set delegateDockerImage=harness/delegate:23.02.78306 \

--set replicas=1 --set upgrader.enabled=false

Create main.tf file

Harness uses a Terraform module for the Kubernetes delegate. This module uses the standard Terraform Helm provider to install the Helm chart onto a Kubernetes cluster whose config by default is stored in the same machine at the ~/.kube/config path. Copy the following into a main.tf file stored on a machine from which you want to install your delegate.

module "delegate" {

source = "harness/harness-delegate/kubernetes"

version = "0.1.5"

account_id = "PUT_YOUR_HARNESS_ACCOUNTID_HERE"

delegate_token = "PUT_YOUR_DELEGATE_TOKEN_HERE"

delegate_name = "firstk8sdel"

namespace = "harness-delegate-ng"

manager_endpoint = "PUT_YOUR_MANAGER_HOST_AND_PORT_HERE"

delegate_image = "harness/delegate:23.02.78306"

replicas = 1

upgrader_enabled = false

# Additional optional values to pass to the helm chart

values = yamlencode({

javaOpts: "-Xms64M"

})

}

provider "helm" {

kubernetes {

config_path = "~/.kube/config"

}

}

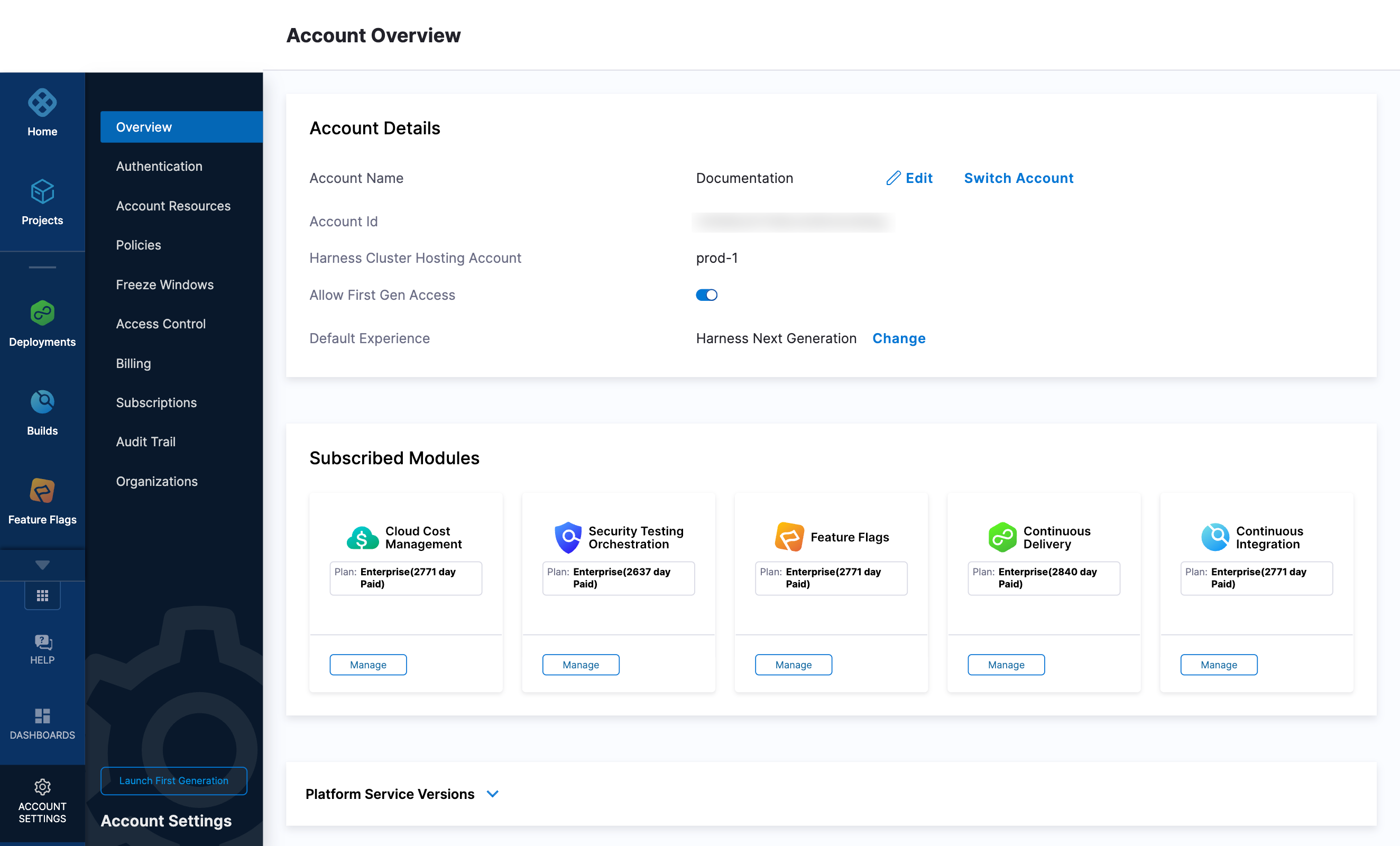

Now replace the variables in the file with your Harness accound ID and delegate token values. Replace PUT_YOUR_MANAGER_HOST_AND_PORT_HERE with the Harness Manager Endpoint noted below. For Harness SaaS accounts, you can find your Harness Cluster Location on the Account Overview page under the Account Settings section of the left navigation. For Harness CDCE, the endpoint varies based on the Docker vs. Helm installation options.

| Harness Cluster Location | Harness Manager Endpoint on Harness Cluster |

|---|---|

| SaaS prod-1 | https://app.harness.io |

| SaaS prod-2 | https://app.harness.io/gratis |

| SaaS prod-3 | https://app3.harness.io |

| CDCE Docker | http://<HARNESS_HOST> if Docker Delegate is remote to CDCE or http://host.docker.internal if Docker Delegate is on same host as CDCE |

| CDCE Helm | http://<HARNESS_HOST>:7143 where HARNESS_HOST is the public IP of the Kubernetes node where CDCE Helm is running |

Run Terraform init, plan, and apply

Initialize Terraform. This downloads the Terraform Helm provider to your machine.

terraform init

Run the following step to view the changes Terraform is going to make on your behalf.

terraform plan

Finally, run this step to make Terraform install the Kubernetes delegate using the Helm provider.

terraform apply

When prompted by Terraform if you want to continue with the apply step, type yes, and then you will see output similar to the following.

helm_release.delegate: Creating...

helm_release.delegate: Still creating... [10s elapsed]

helm_release.delegate: Still creating... [20s elapsed]

helm_release.delegate: Still creating... [30s elapsed]

helm_release.delegate: Still creating... [40s elapsed]

helm_release.delegate: Still creating... [50s elapsed]

helm_release.delegate: Still creating... [1m0s elapsed]

helm_release.delegate: Creation complete after 1m0s [id=firstk8sdel]

Apply complete! Resources: 1 added, 0 changed, 0 destroyed.

Download a Kubernetes manifest template

curl -LO https://raw.githubusercontent.com/harness/delegate-kubernetes-manifest/main/harness-delegate.yaml

Replace variables in the template

Open the harness-delegate.yaml file in a text editor and replace PUT_YOUR_DELEGATE_NAME_HERE, PUT_YOUR_HARNESS_ACCOUNTID_HERE, and PUT_YOUR_DELEGATE_TOKEN_HERE with your delegate name (for example, firstk8sdel), Harness accountId, and delegate token values, respectively.

Replace the PUT_YOUR_MANAGER_HOST_AND_PORT_HERE variable with the Harness Manager Endpoint noted below. For Harness SaaS accounts, you can find your Harness Cluster Location on the Account Overview page under the Account Settings section of the left navigation. For Harness CDCE, the endpoint varies based on the Docker vs. Helm installation options.

| Harness Cluster Location | Harness Manager Endpoint on Harness Cluster |

|---|---|

| SaaS prod-1 | https://app.harness.io |

| SaaS prod-2 | https://app.harness.io/gratis |

| SaaS prod-3 | https://app3.harness.io |

| CDCE Docker | http://<HARNESS_HOST> if Docker Delegate is remote to CDCE or http://host.docker.internal if Docker Delegate is on same host as CDCE |

| CDCE Helm | http://<HARNESS_HOST>:7143 where HARNESS_HOST is the public IP of the Kubernetes node where CDCE Helm is running |

Apply the Kubernetes manifest

kubectl apply -f harness-delegate.yaml

Prerequisite

Ensure that you have the Docker runtime installed on your host. If not, use one of the following options to install Docker:

Install on Docker

Now you can install the delegate using the following command.

docker run --cpus=1 --memory=2g \

-e DELEGATE_NAME=docker-delegate \

-e NEXT_GEN="true" \

-e DELEGATE_TYPE="DOCKER" \

-e ACCOUNT_ID=PUT_YOUR_HARNESS_ACCOUNTID_HERE \

-e DELEGATE_TOKEN=PUT_YOUR_DELEGATE_TOKEN_HERE \

-e LOG_STREAMING_SERVICE_URL=PUT_YOUR_MANAGER_HOST_AND_PORT_HERE/log-service/ \

-e MANAGER_HOST_AND_PORT=PUT_YOUR_MANAGER_HOST_AND_PORT_HERE \

harness/delegate:23.03.78904

Replace the PUT_YOUR_MANAGER_HOST_AND_PORT_HERE variable with the Harness Manager Endpoint noted below. For Harness SaaS accounts, to find your Harness cluster location, select Account Settings, and then select Overview. In Account Overview, look in Account Settings. It is listed next to Harness Cluster Hosting Account.

For more information, go to View account info and subscribe to downtime alerts.

For Harness CDCE, the endpoint varies based on the Docker vs. Helm installation options.

| Harness Cluster Location | Harness Manager Endpoint on Harness Cluster |

|---|---|

| SaaS prod-1 | https://app.harness.io |

| SaaS prod-2 | https://app.harness.io/gratis |

| SaaS prod-3 | https://app3.harness.io |

| CDCE Docker | http://<HARNESS_HOST> if Docker Delegate is remote to CDCE or http://host.docker.internal if Docker Delegate is on same host as CDCE |

| CDCE Helm | http://<HARNESS_HOST>:7143 where HARNESS_HOST is the public IP of the Kubernetes node where CDCE Helm is running |

If you are using a local runner CI build infrastructure, modify the delegate install command as explained in Use local runner build infrastructure

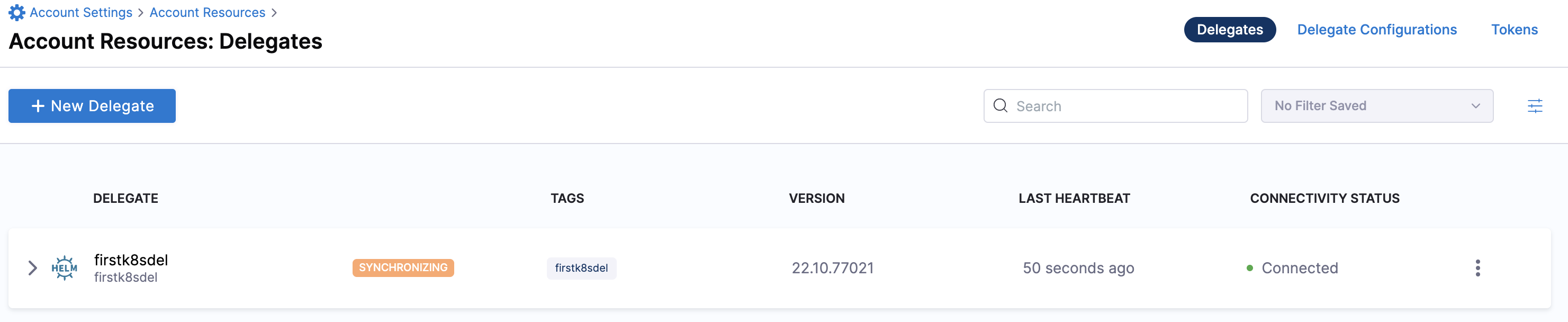

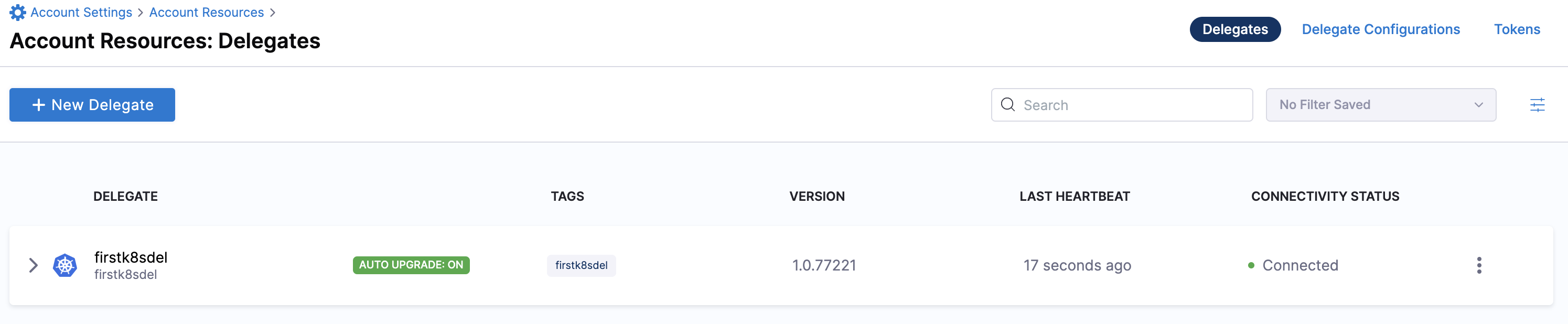

Verify delegate connectivity

Select Continue. After the health checks pass, your delegate is available for you to use. Select Done and verify your new delegate is listed.

Helm chart & Terraform Helm provider

Kubernetes manifest

Docker

You can now route communication to external systems in Harness connectors and pipelines by selecting this delegate via a delegate selector.

Delegate selectors do not override service infrastructure connectors. Delegate selectors only determine the delegate that executes the operations of your pipeline.

Troubleshooting

The delegate installer provides troubleshooting information for each installation process. If the delegate cannot be verified, select Troubleshoot for steps you can use to resolve the problem. This section includes the same information.

Harness asks for feedback after the troubleshooting steps. You are asked, Did the delegate come up?

If the steps did not resolve the problem, select No, and use the form to describe the issue. You'll also find links to Harness Support and to Delegate docs.

- Helm Chart

- Terraform Helm Provider

- Kubernetes Manifest

- Docker

Use the following steps to troubleshoot your installation of the delegate using Helm.

Verify that Helm is correctly installed:

Check for Helm:

helmAnd then check for the installed version of Helm:

helm versionIf you receive the message

Error: rendered manifests contain a resource that already exists..., delete the existing namespace, and retry the Helm upgrade command to deploy the delegate.For further instructions on troubleshooting your Helm installation, go to Helm troubleshooting guide.

Check the status of the delegate on your cluster:

kubectl describe pods -n <namespace>If the pod did not start, check the delegate logs:

kubectl logs -f <harnessDelegateName> -n <namespace>If the state of the delegate pod is

CrashLoopBackOff, check your allocation of compute resources (CPU and memory) to the cluster. A state ofCrashLoopBackOffindicates insufficent Kubernetes cluster resources.If the delegate pod is not healthy, use the

kubectl describecommand to get more information:kubectl describe <pod_name> -n <namespace>

Use the following steps to troubleshoot your installation of the delegate using Terraform.

Verify that Terraform is correctly installed:

terraform -versionFor further instructions on troubleshooting your installation of Terraform, go to the Terraform troubleshooting guide.

Check the status of the delegate on your cluster:

kubectl describe pods -n <namespace>If the pod did not start, check the delegate logs:

kubectl logs -f <harnessDelegateName> -n <namespace>If the state of the delegate pod is

CrashLoopBackOff, check your allocation of compute resources (CPU and memory) to the cluster. A state ofCrashLoopBackOffindicates insufficent Kubernetes cluster resources.If the delegate pod is not healthy, use the

kubectl describecommand to get more information:kubectl describe <pod_name> -n <namespace>

Use the following steps to troubleshoot your installation of the delegate using Kubernetes.

Check the status of the delegate on your cluster:

kubectl describe pods -n <namespace>If the pod did not start, check the delegate logs:

kubectl logs -f <harnessDelegateName> -n <namespace>If the state of the delegate pod is

CrashLoopBackOff, check your allocation of compute resources (CPU and memory) to the cluster. A state ofCrashLoopBackOffindicates insufficent Kubernetes cluster resources.If the delegate pod is not healthy, use the

kubectl describecommand to get more information:kubectl describe <pod_name> -n <namespace>

Use the following steps to troubleshoot your installation of the delegate using Docker:

Check the status of the delegate on your cluster:

docker container ls -aIf the pod is not running, check the delegate logs:

docker container logs <delegatename> -fRestart the delegate container. To stop the container:

docker container stop <delegatename>To start the container:

docker container start <delegatename>Make sure the container has sufficient CPU and memory resources. If not, remove the older containers:

docker container rm [container id]

To learn more, watch the Delegate overview video.

- In Set Up Delegates, select the Connect using Delegates with the following Tags option and enter your delegate name.

- Select Save and Continue.

- Once the test connection succeeds, select Finish. The connector now appears in the Connectors list.

Install Cloud Foundry Command Line Interface (CF CLI) on your Harness delegate

After the delegate pods are created, you must edit your Harness delegate YAML to install CF CLI v7, autoscaler, and Create-Service-Push plugins.

Open the

delegate.yamlin a text editor.Locate the environment variable

INIT_SCRIPTin theDeploymentobject.- name: INIT_SCRIPT

value: ""Replace

value: ""with the following script to install CF CLI,autoscaler, andCreate-Service-Pushplugins.infoHarness delegate uses Red Hat based distributions like Red Hat Enterprise Linux (RHEL) or Red Hat Universal Base Image (UBI). Hence, we recommend that you use

microdnfcommands to install CF CLI on your delegate. If you are using a package manager in Debian based distributions like Ubuntu, useapt-getcommands to install CF CLI on your delegate.infoMake sure to use your API token for pivnet login in the following script.

- microdnf

- apt-get

- name: INIT_SCRIPT

value: |

# update package manager, install necessary packages, and install CF CLI v7

microdnf update

microdnf install yum

microdnf install --nodocs unzip yum-utils

microdnf install -y yum-utils

echo y | yum install wget

wget -O /etc/yum.repos.d/cloudfoundry-cli.repo https://packages.cloudfoundry.org/fedora/cloudfoundry-cli.repo

echo y | yum install cf7-cli -y

# autoscaler plugin

# download and install pivnet

wget -O pivnet https://github.com/pivotal-cf/pivnet-cli/releases/download/v0.0.55/pivnet-linux-amd64-0.0.55 && chmod +x pivnet && mv pivnet /usr/local/bin;

pivnet login --api-token=<replace with api token>

# download and install autoscaler plugin by pivnet

pivnet download-product-files --product-slug='pcf-app-autoscaler' --release-version='2.0.295' --product-file-id=912441

cf install-plugin -f autoscaler-for-pcf-cliplugin-linux64-binary-2.0.295

# install Create-Service-Push plugin from community

cf install-plugin -r CF-Community "Create-Service-Push"

# verify cf version

cf --version

# verify plugins

cf plugins

- name: INIT_SCRIPT

value: |

# update package manager, install necessary packages, and install CF CLI v7

apt-get install wget

wget -q -O - https://packages.cloudfoundry.org/debian/cli.cloudfoundry.org.key | apt-key add -

echo "deb https://packages.cloudfoundry.org/debian stable main" | tee /etc/apt/sources.list.d/cloudfoundry-cli.list

apt-get update

apt-get install cf7-cli

# autoscaler plugin

# download and install pivnet

wget -O pivnet https://github.com/pivotal-cf/pivnet-cli/releases/download/v0.0.55/pivnet-linux-amd64-0.0.55 && chmod +x pivnet && mv pivnet /usr/local/bin;

pivnet login --api-token=<replace with api token>

# download and install autoscaler plugin by pivnet

pivnet download-product-files --product-slug='pcf-app-autoscaler' --release-version='2.0.295' --product-file-id=912441

cf install-plugin -f autoscaler-for-pcf-cliplugin-linux64-binary-2.0.295

# install Create-Service-Push plugin from community

cf install-plugin -r CF-Community "Create-Service-Push"

# verify cf version

cf --version

# verify plugins

cf plugins

Apply the profile to the delegate profile and check the logs.

The output for

cf --versioniscf version 7.2.0+be4a5ce2b.2020-12-10.Here is the output for

cf plugins.App Autoscaler 2.0.295 autoscaling-apps Displays apps bound to the autoscaler

App Autoscaler 2.0.295 autoscaling-events Displays previous autoscaling events for the app

App Autoscaler 2.0.295 autoscaling-rules Displays rules for an autoscaled app

App Autoscaler 2.0.295 autoscaling-slcs Displays scheduled limit changes for the app

App Autoscaler 2.0.295 configure-autoscaling Configures autoscaling using a manifest file

App Autoscaler 2.0.295 create-autoscaling-rule Create rule for an autoscaled app

App Autoscaler 2.0.295 create-autoscaling-slc Create scheduled instance limit change for an autoscaled app

App Autoscaler 2.0.295 delete-autoscaling-rule Delete rule for an autoscaled app

App Autoscaler 2.0.295 delete-autoscaling-rules Delete all rules for an autoscaled app

App Autoscaler 2.0.295 delete-autoscaling-slc Delete scheduled limit change for an autoscaled app

App Autoscaler 2.0.295 disable-autoscaling Disables autoscaling for the app

App Autoscaler 2.0.295 enable-autoscaling Enables autoscaling for the app

App Autoscaler 2.0.295 update-autoscaling-limits Updates autoscaling instance limits for the app

Create-Service-Push 1.3.2 create-service-push, cspush Works in the same manner as cf push, except that it will create services defined in a services-manifest.yml file first before performing a cf push.noteThe CF Command script does not require

cf login. Harness logs in using the credentials in the TAS cloud provider set up in the infrastructure definition for the workflow executing the CF Command.

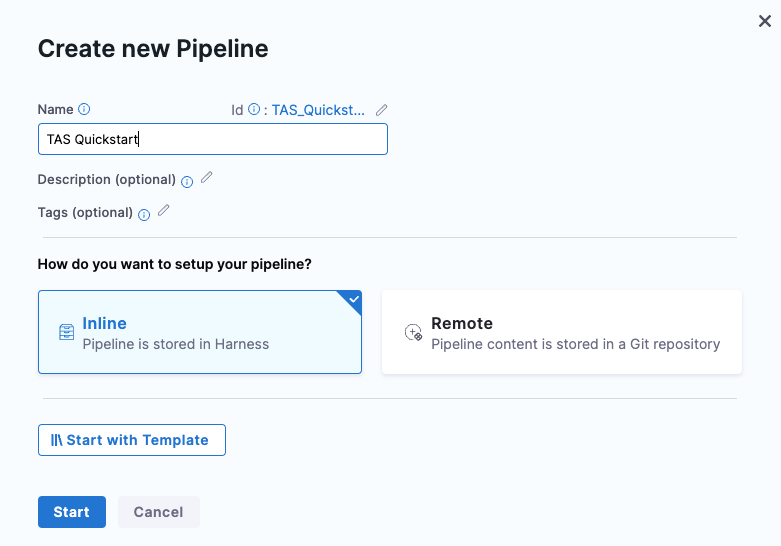

Create the deploy stage

Pipelines are collections of stages. For this tutorial, we'll create a new pipeline and add a single stage.

In your Harness project, select Pipelines, select Deployments, then select Create a Pipeline.

Your pipeline appears.

Enter the name TAS Quickstart and click Start.

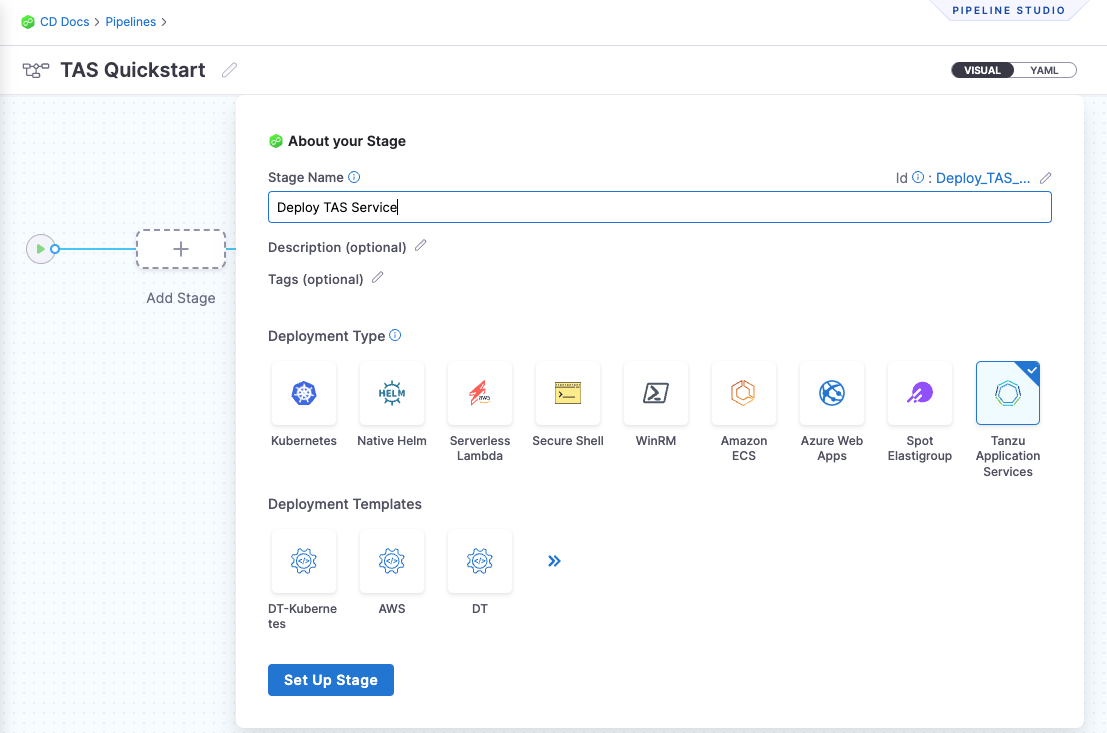

Click Add Stage and select Deploy.

Enter the stage name Deploy TAS Service, select the Tanzu Application Services deployment type, and select Set Up Stage.

The new stage settings appear.

Create the Harness TAS service

Harness services represent your microservices or applications. You can add the same service to as many stages as you need. Services contain your artifacts, manifests, config files, and variables. For more information, go to services and environments overview.

Create a new service

Select the Service tab, then select Add Service.

Enter a service name. For example, TAS.

Services are persistent and can be used throughout the stages of this pipeline or any other pipeline in the project.

In Service Definition, in Deployment Type, verify if Tanzu Application Services is selected.

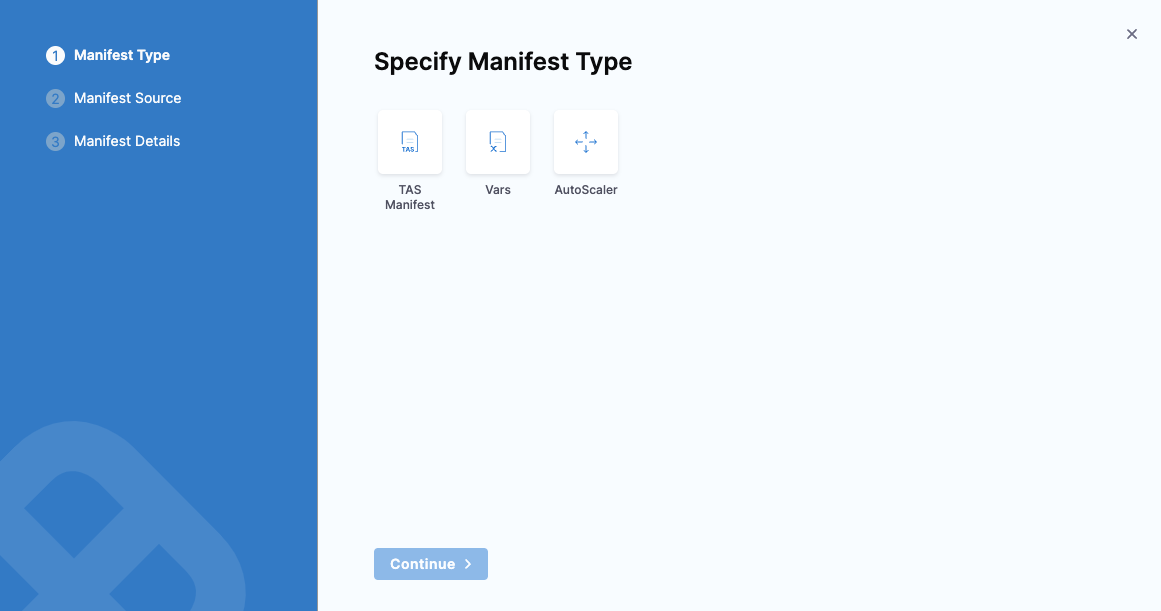

Add the manifest

In Manifests, select Add Manifest.

Harness uses TAS Manifest, Vars, and AutoScaler manifest types for defining TAS applications, instances, and routes.

You can use one TAS manifest and one autoscaler manifest only. You can use unlimited vars file manifests.

Select TAS Manifest and select Continue.

In Specify TAS Manifest Store, select Harness and select Continue.

In Manifest Details, enter a manifest name. For example,

nginx.Select File/Folder Path.

In Create or Select an Existing Config file, select Project. This is where we will create the manifest.

Select New, select New Folder, enter a folder name, and then select Create.

Select the new folder, select New, select New File, and then enter a file name. For example, enter

manifest.Enter the following in the

manifestfile, and then click Save.applications:

- name: ((NAME))

health-check-type: process

timeout: 5

instances: ((INSTANCE))

memory: 750M

routes:

- route: ((ROUTE))

Select Apply Selected.

You can add only one

manifest.yamlfile.Select Vars.yaml path and repeat steps 6.1 and 6.2 to create a

varsfile. Then, enter the following information:NAME: harness_<+service.name>

INSTANCE: 1

ROUTE: harness_<+service.name>_<+infra.name>.apps.tas-harness.comSelect Apply Selected.

You can add any number of

vars.yamlfiles.Select AutoScaler.yaml and repeat steps 6.1 and 6.2 to create an

autoscalerfile. Then, enter the following information:instance_limits:

min: 1

max: 2

rules:

- rule_type: "http_latency"

rule_sub_type: "avg_99th"

threshold:

min: 100

max: 200

scheduled_limit_changes:

- recurrence: 10

executes_at: "2032-01-01T00:00:00Z"

instance_limits:

min: 1

max: 2Select Apply Selected.

You can add only one

autoscaler.yamlfile.Select Submit.

Add the artifact for deployment

In Artifacts, select Add Artifact Source.

In Specify Artifact Repository Type, select Artifactory, and select Continue.

infoFor TAS deployments, Harness supports the following artifact sources. You connect Harness to these registries by using your registry account credentials.

- Artifactory

- Nexus

- Docker Registry

- Amazon S3

- Google Container Registry (GCR)

- Amazon Elastic Container Registry (ECR)

- Azure Container Registry (ACR)

- Google Artifact Registry (GAR)

- Google Cloud Storage (GCS)

- GitHub Package Registry

- Azure Artifacts

- Jenkins

For this tutorial, we will use Artifactory.

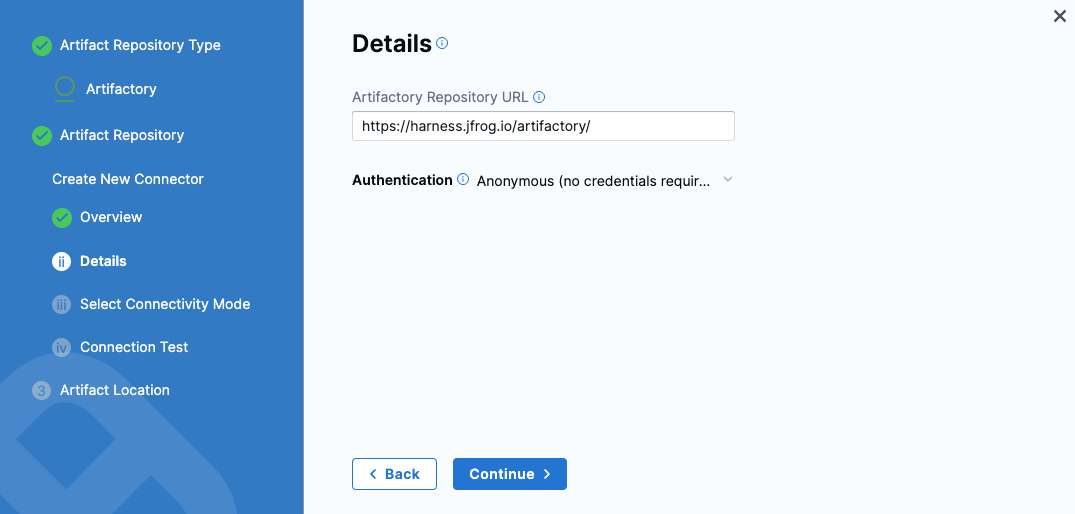

In Artifactory Repository, click New Artifactory Connector.

Enter a name for the connector, such as JFrog, then select Continue.

In Details, in Artifactory Repository URL, enter

https://harness.jfrog.io/artifactory/.In Authentication, select Anonymous, and select Continue.

In Delegates Setup, select Only use Delegate with all of the following tags and enter the name of the delegate created in connect to a TAS provider (step 8).

Select Save and Continue

After the test connection succeeds, select Continue.

In Artifact Details, enter the following details:

- Enter an Artifact Source Name.

- Select Generic or Docker repository format.

- Select a Repository where the artifact is located.

- Enter the name of the folder or repository where the artifact is located.

- Select Value to enter a specific artifact name. You can also select Regex and enter a tag regex to filter the artifact.

Select Submit.

Define the TAS target environment

The target space is your TAS space. This is where you will deploy your application.

In Specify Environment, select New Environment.

Enter the name TAS tutorial and select Pre-Production.

Select Save.

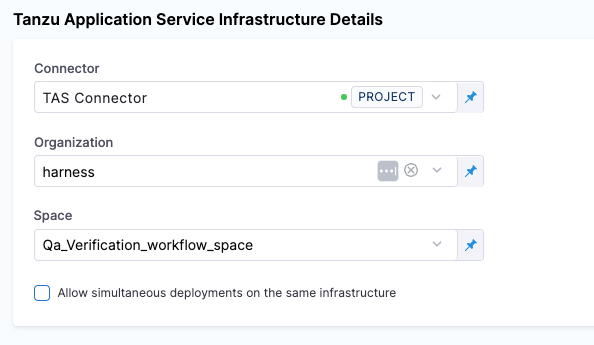

In Specify Infrastructure, select New Infrastructure.

Enter a name, and then verify that the selected deployment type is Tanzu Application Type.

Select the TAS connector you created earlier.

In Organization, select the TAS org in which want to deploy.

In Space, select the TAS space in which you want to deploy.

Select Save.

TAS execution strategies

Now you can select the deployment strategy for this stage of the pipeline.

- Basic

- Canary

- Blue Green

- Rolling

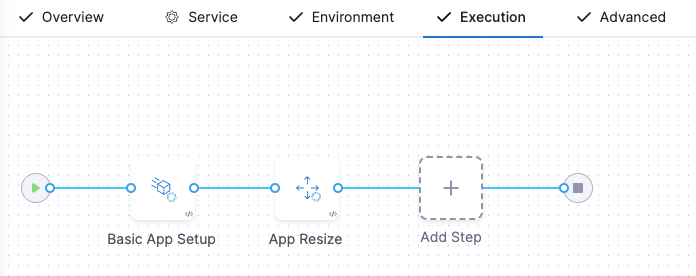

The TAS workflow for performing a basic deployment takes your Harness TAS service and deploys it on your TAS infrastructure definition.

In Execution Strategies, select Basic, then select Use Strategy.

The basic execution steps are added.

Select the Basic App Setup step to define Step Parameters.

The basic app setup configuration uses your manifest in Harness TAS to set up your application.

- Name - Edit the deployment step name.

- Timeout - Set how long you want the Harness delegate to wait for the TAS cloud to respond to API requests before timeout.

- Instance Count - Select whether to Read from Manifest or Match Running Instances.

The Match Running Instances setting can be used after your first deployment to override the instances in your manifest. - Existing Versions to Keep - Enter the number of existing versions you want to keep. This is to roll back to a stable version if the deployment fails.

- Additional Routes - Enter additional routes if you want to add routes other than the ones defined in the manifests.

- Select Apply Changes.

Select the App Resize step to define Step Parameters.

- Name - Edit the deployment step name.

- Timeout - Set how long you want the Harness delegate to wait for the TAS cloud to respond to API requests before timeout.

- Ignore instance count in Manifest - Select this option to override the instance count defined in the

manifest.yamlfile with the values specified in the App Resize step. - Total Instances - Set the number or percentage of running instances you want to keep.

- Desired Instances - Old Version - Set the number or percentage of instances for the previous version of the application you want to keep. If this field is left empty, the desired instance count will be the difference between the maximum possible instance count (from the manifest or match running instances count) and the number of new application instances.

- Select Apply Changes.

Add a Tanzu Command step to your stage if you want to execute custom Tanzu commands in this step.

- Timeout - Set how long you want the Harness delegate to wait for the TAS cloud to respond to API requests before timeout.

- Script - Select one of the following options.

- File Store - Select this option to choose a script from Project, Organization, or Account.

- Inline - Select this option to enter a script inline.

- Select Apply Changes.

Add an App Rollback step to your stage if you want to roll back to an older version of the application in case of deployment failure.

In Advanced configure the following options.

Delegate Selector - Select the delegate(s) you want to use to execute this step. You can select one or more delegates for each pipeline step. You only need to select one of a delegate's tags to select it. All delegates with the tag are selected.

Conditional Execution - Use the conditions to determine when this step is executed. For more information, go to conditional execution settings.

Failure Strategy - Define the failure strategies to control the behavior of your pipeline when there is an error in execution. For more information, go to failure strategy references and define a failure strategy.

Expand the following section to view the error types and failure strategies supported for the steps in a Basic TAS deployment.

Error types and failure strategy

Step name Error types and failure strategy App Setup Error type Rollback Stage Manual Intervention Ignore Failure Retry Mark As Success Abort Mark As Failure Delegate Provisioning Errors Supported, but rollback is skipped because app is not setup. Supported Supported Supported Supported Supported Supported Delegate Restart Supported, but rollback is skipped because app is not setup. Supported Supported Supported Supported Supported Supported Timeout Errors Supported, but rollback is skipped because app is not setup. Supported Supported Supported Supported Supported Supported Execution-time Inputs Timeout Errors Supported, but rollback is skipped because app is not setup. Supported Supported Supported Supported Supported Supported App Resize Error type Rollback Stage Manual Intervention Ignore Failure Retry Mark As Success Abort Mark As Failure Delegate Provisioning Errors Supported Supported Supported Supported Supported Supported Supported Delegate Restart Supported Supported Supported Supported Supported Supported Supported Timeout Errors Supported Supported Supported Supported Supported Supported Supported Execution-time Inputs Timeout Errors Supported Supported Supported Supported Supported Supported Supported App Rollback Error type Rollback Stage Manual Intervention Ignore Failure Retry Mark As Success Abort Mark As Failure Delegate Provisioning Errors Invalid Supported Supported Invalid Supported Supported Supported Delegate Restart Invalid Supported Supported Invalid Supported Supported Supported Timeout Errors Invalid Supported Supported Invalid Supported Supported Supported Execution-time Inputs Timeout Errors Invalid Supported Supported Invalid Supported Supported Supported Tanzu Command Error type Rollback Stage Manual Intervention Ignore Failure Retry Mark As Success Abort Mark As Failure Delegate Provisioning Errors Invalid Supported Supported Supported Supported Supported Supported Delegate Restart Invalid Supported Supported Supported Supported Supported Supported Timeout Errors Invalid Supported Supported Supported Supported Supported Supported Execution-time Inputs Timeout Errors Invalid Supported Supported Supported Supported Supported Supported noteFor the Tanzu Command step, Harness does not provide default rollback steps. You can do a rollback by configuring your own Rollback step.

Looping Strategy - Select Matrix, Repeat, or Parallelism looping strategy. For more information, go to looping strategies overview.

Policy Enforcement - Add or modify a policy set to be evaluated after the step is complete. For more information, go to CD governance.

Select Save.

Now the pipeline stage is complete and you can deploy.

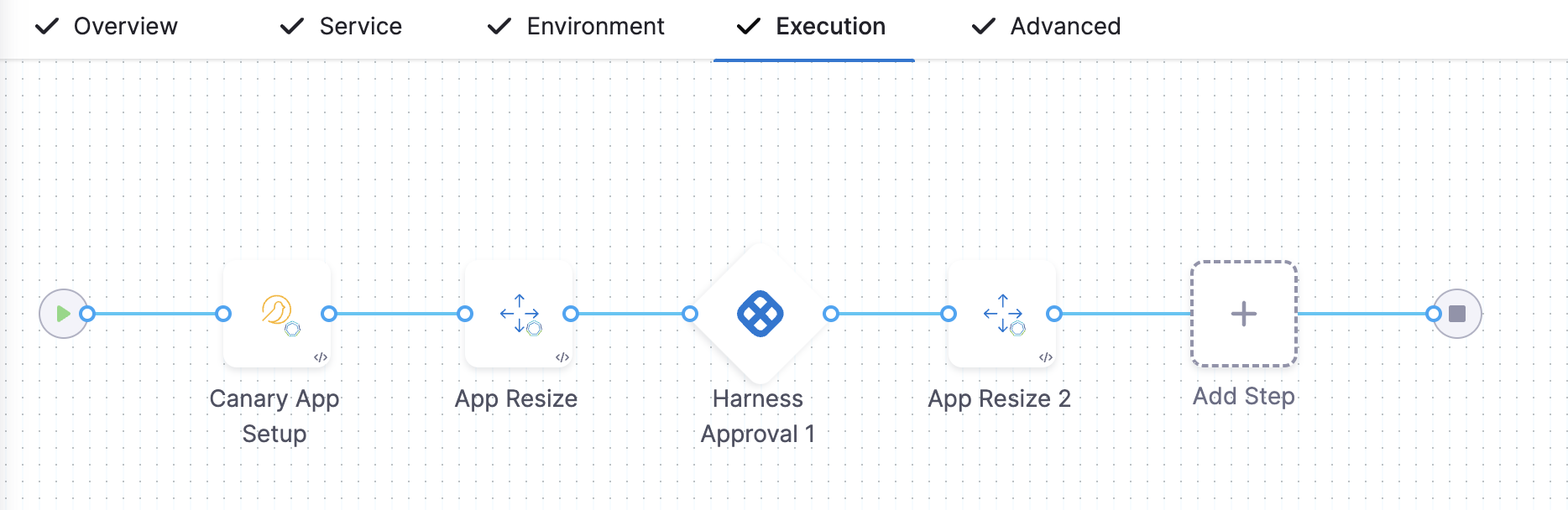

The TAS canary deployment is a phased approach to deploy application instances gradually, ensuring the stability of a small percentage of instances before rolling out to your desired instance count. With canary deployment, all nodes in a single environment are incrementally updated in small phases. You can add verification steps as needed to proceed to the next phase.

Use this deployment method when you want to verify whether the new version of the application is working correctly in your production environment.

The canary deployment contains Canary App Setup and App Resize steps. You can add more App Resize steps to perform gradual deployment.

In Execution Strategies, select Canary, and then click Use Strategy.

The canary execution steps are added.

Select the Canary App Setup step to define Step Parameters.

- Name - Edit the deployment step name.

- Timeout - Set how long you want the Harness delegate to wait for the TAS cloud to respond to API requests before timeout.

- Instance Count - Select whether to Read from Manifest or Match Running Instances.

The Match Running Instances setting can be used after your first deployment to override the instances in your manifest. - Resize Strategy - Select Add new instances first, then downsize old instances or Downsize old instances first, then add new instances strategy. You can also add Resize Strategy as a runtime input.

- Existing Versions to Keep - Enter the number of existing versions you want to keep. This is to roll back to a stable version if the deployment fails.

- Additional Routes - Enter additional routes if you want to add routes other than the ones defined in the manifests.

- Select Apply Changes.

Select the App Resize step to define Step Parameters.

- Name - Edit the deployment step name.

- Timeout - Set how long you want the Harness delegate to wait for the TAS cloud to respond to API requests before timeout.

- Ignore instance count in Manifest - Select this option to override the instance count mentioned in the

manifest.yamlfile with the values mentioned in the App Resize step. - Total Instances - Set the number or percentage of running instances you want to keep.

- Desired Instances - Old Version - Set the number or percentage of instances for the previous version of the application you want to keep. If this field is left empty, the desired instance count will be the difference between the maximum possible instance count (from the manifest or match running instances count) and the number of new application instances.

- Select Apply Changes.

Add more App Resize steps to perform gradual deployment.

Add a Tanzu Command step to your stage if you want to execute custom Tanzu commands in this step.

- Timeout - Set how long you want the Harness delegate to wait for the TAS cloud to respond to API requests before timeout.

- Script - Select one of the following options.

- File Store - Select this option to choose a script from Project, Organization, or Account.

- Inline - Select this option to enter a script inline.

- Select Apply Changes.

Add an App Rollback step to your stage if you want to rollback to an older version of the application in case of deployment failure.

In Advanced configure the following options.

Delegate Selector - Select the delegate(s) you want to use to execute this step. You can select one or more delegates for each pipeline step. You only need to select one of a delegate's tags to select it. All the delegates with that specific tag are selected.

Conditional Execution - Use the conditions to determine when this step is executed. For more information, go to conditional execution settings.

Failure Strategy - Define the failure strategies to control the behavior of your pipeline when there is an error in execution. For more information, go to failure strategy references and define a failure strategy.

Expand the following section to view the error types and failure strategies supported for the steps in a Canary TAS deployment.

Error types and failure strategy

Step name Error types and failure strategy App Setup Error type Rollback Stage Manual Intervention Ignore Failure Retry Mark As Success Abort Mark As Failure Delegate Provisioning Errors Supported, but not required. Rollback step is skipped. Supported Supported Supported Supported Supported Supported Delegate Restart Supported, but not required. Rollback step is skipped. Supported Supported Supported Supported Supported Supported Timeout Errors Supported, but not required. Rollback step is skipped. Supported Supported Supported Supported Supported Supported Execution-time Inputs Timeout Errors Supported, but not required. Rollback step is skipped. Supported Supported Supported Supported Supported Supported App Resize Error type Rollback Stage Manual Intervention Ignore Failure Retry Mark As Success Abort Mark As Failure Delegate Provisioning Errors Supported Supported Supported Supported Supported Supported Supported Delegate Restart Supported Supported Supported Supported Supported Supported Supported Timeout Errors Supported Supported Supported Supported Supported Supported Supported Execution-time Inputs Timeout Errors Supported Supported Supported Supported Supported Supported Supported App Rollback Error type Rollback Stage Manual Intervention Ignore Failure Retry Mark As Success Abort Mark As Failure Delegate Provisioning Errors Invalid Supported Supported Invalid Supported Supported Supported Delegate Restart Invalid Supported Supported Invalid Supported Supported Supported Timeout Errors Invalid Supported Supported Invalid Supported Supported Supported Execution-time Inputs Timeout Errors Invalid Supported Supported Invalid Supported Supported Supported Tanzu Command Error type Rollback Stage Manual Intervention Ignore Failure Retry Mark As Success Abort Mark As Failure Delegate Provisioning Errors Invalid Supported Supported Supported Supported Supported Supported Delegate Restart Invalid Supported Supported Supported Supported Supported Supported Timeout Errors Invalid Supported Supported Supported Supported Supported Supported Execution-time Inputs Timeout Errors Invalid Supported Supported Supported Supported Supported Supported noteFor the Tanzu Command step, Harness does not provide default rollback steps. You can do a rollback for this step by configuring your own Rollback step.

Looping Strategy - Select Matrix, Repeat, or Parallelism looping strategy. For more information, go to looping strategies overview.

Policy Enforcement - Add or modify a policy set to be evaluated after the step is complete. For more information, go to CD governance.

Select Save.

Now the pipeline stage is complete and can be deployed.

Harness TAS blue green deployments use the route(s) in the TAS manifest and a temporary route you specify in the deployment configuration.

The blue green deployment deploys the applications using the temporary route first using the App Setup configuration. Next, in the App Resize configuration, Harness maintains the number of instances at 100% of the instances specified in the TAS manifest.

Use this deployment method when you want to perform verification in a full production environment, or when you want zero downtime.

For blue green deployments, by default, the App Resize step is 100% because it does not change the number of instances as it did in the canary deployment. However, you can define the percentage in the App Resize step. In blue green, you are deploying the new application to the number of instances set in the App Setup step and keeping the old application at the same number of instances. You

Once the deployment is successful, the Swap Routes configuration switches the networking routing, directing production traffic (green) to the new application and stage traffic (blue) to the old application.

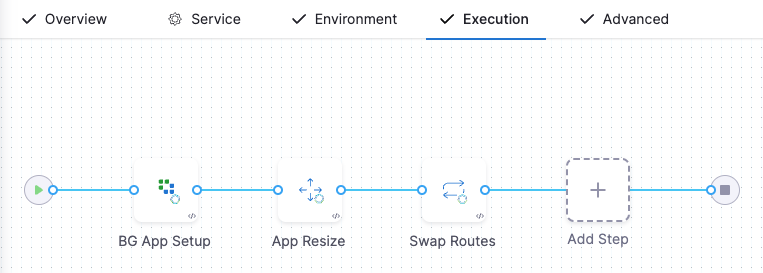

In Execution Strategies, select Blue Green, and then click Use Strategy.

The blue green execution steps are added.

Select the BG App Setup step to define Step Parameters.

Name - Edit the deployment step name.

Timeout - Set how long you want the Harness delegate to wait for the TAS cloud to respond to API requests before timeout.

Instance Count - Select whether to Read from Manifest or Match Running Instances.

The Match Running Instances setting can be used after your first deployment to override the instances in your manifest.Existing Versions to Keep - Enter the number of existing versions you want to keep. This is to roll back to a stable version if the deployment fails.

Additional Routes - Add additional routes in addition to the routes added in the TAS manifest.

Additional routes has two uses in blue green deployments.

- Select the routes that you want to map to the application in addition to the routes already mapped in the application in the manifest in your Harness service.

- You can also omit routes in the manifest in your Harness service, and select them in Additional Routes. The routes selected in Additional Routes will be used as the final (green) routes for the application.

Temporary Routes - Add temporary routes in addition to additional routes.

Later, in the Swap Route step, Harness will replace these routes with the routes in the TAS manifest in your service.

If you do not select a route in Temporary Routes, Harness will create one automatically.Select Apply Changes.

Select the App Resize step to define Step Parameters.

- Name - Edit the deployment step name.

- Timeout - Set how long you want the Harness delegate to wait for the TAS cloud to respond to API requests before timeout.

- Ignore instance count in Manifest - Select this option to override the instance count defined in the

manifest.yamlfile with the values specified in the App Resize step. - Total Instances - Set the number or percentage of running instances you want to keep.

- Desired Instances - Old Version - Set the number or percentage of instances for the previous version of the application you want to keep. If this field is left empty, the desired instance count will be the difference between the maximum possible instance count (from the manifest or match running instances count) and the number of new application instances.

- Select Apply Changes.

Select the Swap Routes step to define Step Parameters.

- Name - Edit the deployment step name.

- Timeout - Set how long you want the Harness delegate to wait for the TAS cloud to respond to API requests before timeout.

- Downsize Old Application - Select this option to down size older applications.

- Select Apply Changes.

Add a Tanzu Command step to your stage if you want to execute custom Tanzu commands in this step.

- Timeout - Set how long you want the Harness delegate to wait for the TAS cloud to respond to API requests before timeout.

- Script - Select one of the following options.

- File Store - Select this option to choose a script from Project, Organization, or Account.

- Inline - Select this option to enter a script inline.

- Select Apply Changes.

Add a Swap Rollback step to your stage if you want to rollback to an older version of the application in case of deployment failure.

When Swap Rollback is used in a deployment's Rollback Steps, the application that was active before the deployment is restored to its original state with the same instances and routes it had before the deployment.

The failed application is deleted.

In Advanced configure the following options.

Delegate Selector - Select the delegate(s) you want to use to execute this step. You can select one or more delegates for each pipeline step. You only need to select one of a delegate's tags to select it. All the delegates with the specified tag are selected.

Conditional Execution - Use the conditions to determine when this step should be executed. For more information, go to conditional execution settings.

Failure Strategy - Define the failure strategies to control the behavior of your pipeline when there is an error in execution. For more information, go to failure strategy references and define a failure strategy.

Expand the following section to view the error types and failure strategies supported for the steps in a Blue Green TAS deployment.

Error types and failure strategy

Step name Error types and failure strategy App Setup Error type Rollback Stage Manual Intervention Ignore Failure Retry Mark As Success Abort Mark As Failure Delegate Provisioning Errors Supported, but not required. Rollback step is skipped. Supported Supported Supported Supported Supported Supported Delegate Restart Supported, but not required. Rollback step is skipped. Supported Supported Supported Supported Supported Supported Timeout Errors Supported, but not required. Rollback step is skipped. Supported Supported Supported Supported Supported Supported Execution-time Inputs Timeout Errors Supported, but not required. Rollback step is skipped. Supported Supported Supported Supported Supported Supported App Resize Error type Rollback Stage Manual Intervention Ignore Failure Retry Mark As Success Abort Mark As Failure Delegate Provisioning Errors Supported Supported Supported Supported Supported Supported Supported Delegate Restart Supported Supported Supported Supported Supported Supported Supported Timeout Errors Supported Supported Supported Supported Supported Supported Supported Execution-time Inputs Timeout Errors Supported Supported Supported Supported Supported Supported Supported Swap Routes Error type Rollback Stage Manual Intervention Ignore Failure Retry Mark As Success Abort Mark As Failure Delegate Provisioning Errors Support coming soon Rollback changes required Rollback changes required Rollback changes required Rollback changes required Rollback changes required Rollback changes required Delegate Restart Support coming soon Rollback changes required Rollback changes required Rollback changes required Rollback changes required Rollback changes required Rollback changes required Timeout Errors Support coming soon Rollback changes required Rollback changes required Rollback changes required Rollback changes required Rollback changes required Rollback changes required Execution-time Inputs Timeout Errors Support coming soon Rollback changes required Rollback changes required Rollback changes required Rollback changes required Rollback changes required Rollback changes required Swap Rollback Error type Rollback Stage Manual Intervention Ignore Failure Retry Mark As Success Abort Mark As Failure Delegate Provisioning Errors Invalid Supported Supported Invalid Supported Supported Supported Delegate Restart Invalid Supported Supported Invalid Supported Supported Supported Timeout Errors Invalid Supported Supported Invalid Supported Supported Supported Execution-time Inputs Timeout Errors Invalid Supported Supported Invalid Supported Supported Supported Tanzu Command Error type Rollback Stage Manual Intervention Ignore Failure Retry Mark As Success Abort Mark As Failure Delegate Provisioning Errors Invalid Supported Supported Supported Supported Supported Supported Delegate Restart Invalid Supported Supported Supported Supported Supported Supported Timeout Errors Invalid Supported Supported Supported Supported Supported Supported Execution-time Inputs Timeout Errors Invalid Supported Supported Supported Supported Supported Supported noteFor the Tanzu Command step, Harness does not provide any default rollback steps. You can do a rollback for this step by configuring your own Rollback step.

Looping Strategy - Select Matrix, Repeat, or Parallelism looping strategy. For more information, go to looping strategies overview.

Policy Enforcement - Add or modify a policy set to be evaluated after the step is complete. For more information, go to CD governance.

Select Save.

Now the pipeline stage is complete and can be deployed.

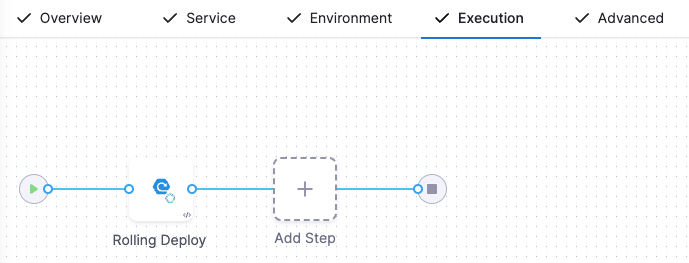

The TAS rolling deployment deploys all pods or instances in a single environment incrementally added one-by-one with a new service or artifact version.

Use this deployment method when you want to support both new and old deployments. You can also use with load balancing scenarios that require reduced downtime.

In Execution Strategies, select Rolling, and then click Use Strategy.

The rolling deploy step is added.

Select the Rolling Deploy step to define Step Parameters.

- Name - Edit the deployment step name.

- Timeout - Set how long you want the Harness delegate to wait for the TAS cloud to respond to API requests before timeout.

- Additional Routes - Add additional routes in addition to the routes added in the TAS manifest.

Add a Tanzu Command step to your stage if you want to execute custom Tanzu commands in this step.

- Timeout - Set how long you want the Harness delegate to wait for the TAS cloud to respond to API requests before timeout.

- Script - Select one of the following options.

- File Store - Select this option to choose a script from Project, Organization, or Account.

- Inline - Select this option to enter a script inline.

- Select Apply Changes.

Add a Rolling Rollback step to your stage if you want to rollback to an older version of the application in case of deployment failure.

In Advanced configure the following options.

Delegate Selector - Select the delegate(s) you want to use to execute this step. You can select one or more delegates for each pipeline step. You only need to select one of a delegate's tags to select it. All delegates with the specified tag are selected.

Conditional Execution - Use the conditions to determine when this step should be executed. For more information, go to conditional execution settings.

Failure Strategy - Define the failure strategies to control the behavior of your pipeline when there is an error in execution. For more information, go to failure strategy references and define a failure strategy.

Expand the following section to view the error types and failure strategies supported for the steps in a Rolling TAS deployment.

Error types and failure strategy

Step name Error types and failure strategy Rolling Deploy Error type Rollback Stage Manual Intervention Ignore Failure Retry Mark As Success Abort Mark As Failure Delegate Provisioning Errors Supported Supported Supported Supported Supported Supported Supported Delegate Restart Supported Supported Supported Supported Supported Supported Supported Timeout Errors Supported Supported Supported Supported Supported Supported Supported Execution-time Inputs Timeout Errors Supported Supported Supported Supported Supported Supported Supported Rolling Rollback Error type Rollback Stage Manual Intervention Ignore Failure Retry Mark As Success Abort Mark As Failure Delegate Provisioning Errors Invalid Supported Supported Invalid Supported Supported Supported Delegate Restart Invalid Supported Supported Invalid Supported Supported Supported Timeout Errors Invalid Supported Supported Invalid Supported Supported Supported Execution-time Inputs Timeout Errors Invalid Supported Supported Invalid Supported Supported Supported Tanzu Command Error type Rollback Stage Manual Intervention Ignore Failure Retry Mark As Success Abort Mark As Failure Delegate Provisioning Errors Invalid Supported Supported Supported Supported Supported Supported Delegate Restart Invalid Supported Supported Supported Supported Supported Supported Timeout Errors Invalid Supported Supported Supported Supported Supported Supported Execution-time Inputs Timeout Errors Invalid Supported Supported Supported Supported Supported Supported noteFor the Tanzu Command step, Harness does not provide any default rollback steps. You can do a rollback for this step by configuring your own Rollback step.

Looping Strategy - Select Matrix, Repeat, or Parallelism looping strategy. For more information, go to looping strategies overview.

Policy Enforcement - Add or modify a policy set to be evaluated after the step is complete. For more information, go to CD governance.

Select Save.

Now the pipeline stage is complete and can be deployed.

Deploy and review

Click Save > Save Pipeline, then select Run. Now you can select the specific artifact to deploy.

Select a Primary Artifact.

Select a Tag.

Select the following Infrastructure parameters.

- Connector

- Organization

- Space

Click Run Pipeline. Harness will verify the pipeline and then run it. You can see the status of the deployment, pause or abort it.

Toggle Console View to watch the deployment with more detailed logging.

The deployment was successful.

In your project's Deployments, you can see the deployment listed.

Next steps

See CD tutorials for other deployment features.