Continuous Deployment FAQs

This article addresses some frequently asked questions about Harness Continuous Deployments (CD).

- Before You Begin

- General

- What can I deploy using Harness?

- What is a Service Instance in Harness?

- How often does Harness Sync with my Deployed Service Instances?

- What if I add more Service Instance infrastructure outside of Harness?

- When is a Service Instance removed?

- If the instance/pod is in a failed state does it still count towards the service instance count?

- What deployment strategies can I use?

- How do I filter deployments on the Deployments page?

- How do I know which Harness Delegates were used in a deployment?

- Can I export deployment logs?

- Can I restrict deployments to specific User Groups?

- Can I deploy a Workflow to multiple infrastructures at the same time?

- Can I resume a failed deployment?

- Can I pause all deployments?

- AWS AMI/ASG

- AWS AMI/ASG with Spotinst

- AWS ECS

- What is a Harness ECS deployment?

- What ECS strategies can I use?

- What deployment strategies can I use?

- What limitations are there on ECS deployments?

- Can I do traffic shifting with AMI/ASG deployments?

- What ECS networking strategies can I use?

- Do Harness support ECS auto scaling?

- Can I use ECS definitions in my repo?

- Can I deploy sidecar containers?

- Can I run an ECS task?

- AWS CloudFormation

- AWS Lambda

- Azure

- CI/CD: Artifact Build and Deploy Pipelines

- Google Cloud Builds

- Native Helm

- IIS (.NET)

- Kubernetes

- What is a Harness Kubernetes deployment?

- What workloads can Harness deploy in a Kubernetes cluster?

- For Kubernetes, does Harness count only pods as a service instance (SI)? What about a ConfigMap or a Secret?

- How is Harness sure that a pod is a service instance?

- If I create a pod using Harness and keep managing it, is it still counted as a service instance?

- Does Harness support OpenShift?

- Can I use Helm?

- Can I deploy Helm charts without adding an artifact source to Harness?

- Can I use OpenShift?

- Can I run Kubernetes jobs?

- Can I deploy a Kubernetes resources using CRDs?

- Can I deploy resources outside of the main Kubernetes workload?

- Can I ignore a manifest during deployment?

- Can I pull an image from a private registry?

- Can I use remote sources for my manifests?

- Can I provision Kubernetes infrastructure?

- What deployment strategies can I use with Kubernetes?

- Can I create namespaces during deployment?

- Do you support Kustomize?

- Can I use Ingress traffic routing?

- Can I perform traffic splitting with Istio?

- Can I perform traffic splitting without Istio?

- Can I scale my pods up and down?

- How do I delete Kubernetes resources?

- Can I use Helm 3 with Kubernetes?

- Can I use Helm Chart Hooks in Kubernetes Deployments?

- Tanzu Application Service (TAS)

- Terraform

- Traditional (SSH)

- Configure as Code

- Uncommon Deployment Platforms

- Harness Applications

- Harness Services

- Artifacts

- Harness Environments

- Harness Infrastructure Definitions

- Harness Workflows

- Harness Pipelines

- Harness Infrastructure Provisioners

- Harness Triggers

- Approvals

- Harness Variables Expressions

Before You Begin

General

For an overview of Harness' support for platforms, methodologies, and related technologies, see Supported Platforms and Technologies.

What can I deploy using Harness?

Harness supports all of the most common platforms and deployment use cases. For example, you can deploy applications to cloud platforms, VM images and auto scaling groups, CI/CD pipelines, and dynamically build infrastructure.

Always start with the Quickstarts in Start Here. These will take you from novice to advanced Harness user in a matter of minutes.

The following topics will walk you through how Harness implements common deployments according to platforms and scenarios:

- AMI (Amazon Machine Image)

- AWS Elastic Container Service (ECS)

- AWS Lambda

- Azure

- CI/CD: Artifact Build and Deploy Pipelines

- Google Cloud

- Native Helm

- IIS (.NET)

- Kubernetes (includes Helm, OpenShift, etc)

- Tanzu Application Service (TAS)

- Traditional Deployments

- Custom Deployments

Also, other key platforms that help you make your CD powerful and efficient:

- Terraform

- CloudFormation

- Configuration as Code (work exclusively in YAML and sync with your Git repos)

- Harness GitOps

For topics on general CD modeling in Harness, see Model Your CD Pipeline.

What is a Service Instance in Harness?

Harness licensing is determined by the services you deploy.

See the Pricing FAQ at Harness Pricing.

How often does Harness Sync with my Deployed Service Instances?

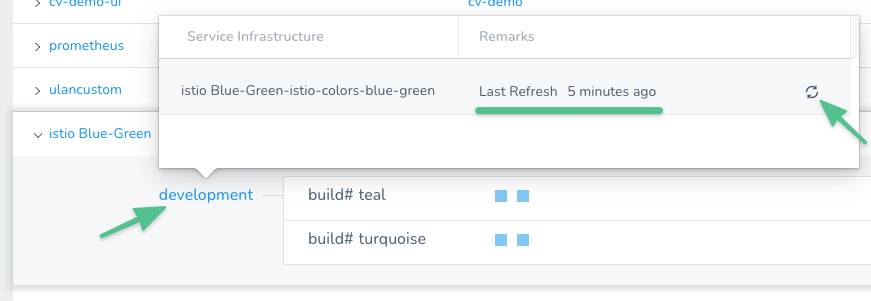

Harness syncs just after the deployment and then It syncs every 10 minutes.

You can check the status using the Instances section of the Services dashboard by hovering over the Environment.

You can also sync on demand using the Refresh icon. You don't need to rerun the deployment.

What if I add more Service Instance infrastructure outside of Harness?

If you increase the Harness-deployed service instance infrastructure outside of Harness, Harness considers this increase part of the service instance infrastructure and licensing is applied.

See the Pricing FAQ at Harness Pricing.

When is a Service Instance removed?

If Harness cannot find the service instance infrastructure it deployed, it removes it from the Services dashboard.

If Harness cannot connect to the service instance infrastructure, it will retry until it determines if the service instance infrastructure is still there.

If the instance/pod is in a failed state does it still count towards the service instance count?

Harness performs a steady state check during deployment and requires that each instance/pod reach a healthy state.

A Kubernetes liveness probe failing later would mean the pod is restarted. Harness continues to count it as a service instance.

A Kubernetes readiness probe failing later would mean traffic is no longer routed to the pods. Harness continues to count pods in that state.

Harness does not count an instance/pod if it no longer exists. For example, if the replica count is reduced.

What deployment strategies can I use?

Harness supports all deployment strategies, such as blue/green, rolling, and canary.

See Deployment Concepts and Strategies.

How do I filter deployments on the Deployments page?

You can filter deployments on the the Deployments page according to multiple criteria, and save these filters as a quick way to filter deployments in the future.

How do I know which Harness Delegates were used in a deployment?

Harness displays which Delegate performed a task in the Deployments page. You simply click on a command in a deployment's graph and select View Delegate Selection in its details.

See View the Delegates Used in a Deployment.

Can I export deployment logs?

Yes. Large enterprises want to save deployment information to their centralized archives for years to come. Harness includes deployment exports to serve this need.

Deployment exports give you control over what is stored and audited and allows you to save deployment information in your archives.

Harness also provides an API for deployment exports, enabling you to extract logs programmatically.

Can I restrict deployments to specific User Groups?

Yes. Using Harness RBAC functionality, you can restrict the deployments a User Group may perform to specific Harness Applications and their subordinate Environments.

Restricting a User Group's deployments to specific Environments enables you to manage which target infrastructures are impacted by your different teams. For example, you can have Dev environments only impacted by Dev teams, and QA environments only impacted by QA teams.

See Restrict Deployment Access to Specific Environments.

Can I deploy a Workflow to multiple infrastructures at the same time?

Yes. Harness lets you deploy a single Workflow to several infrastructures.

First you template the Infrastructure Definition setting, add the Workflow to a Pipeline, and then select multiple infrastructures when you deploy the Pipeline.

Next, Harness reruns the same Workflow for each infrastructure, in parallel.

See Deploy a Workflow to Multiple Infrastructures Simultaneously.

Can I resume a failed deployment?

Yes. You can resume Pipeline deployments that fail during execution.

Factors like changes to resource access or infrastructure issues can cause deployment failure. In such a scenario, rerunning an entire Pipeline can be costly and also time-consuming.

Harness provides an option to resume your Pipeline deployment from the first failed stage or any successfully executed stage before that. Fix the error that caused the failure and then resume your deployment. This also helps you avoid rerunning stages, such as Build Workflow that have built and collected an artifact.

The subsequent stages from where you select to resume your deployment from, get executed. Stages preceding the one you selected are not executed again.

See Resume Pipeline Deployments.

Can I pause all deployments?

Yes. You can stop all of your deployments using Harness Deployment Freeze.

Deployment Freeze is a Harness Governance feature that stops all Harness deployments, including their Triggers. A deployment freeze helps ensure stability during periods of low engineering and support activity, such as holidays, trade shows, or company events.

See Pause All Triggers using Deployment Freeze.

AWS AMI/ASG

For an overview of Harness' support for platforms, methodologies, and related technologies, see Supported Platforms and Technologies.

What is a Harness AMI/ASG deployment?

Harness uses existing Amazon Machine Images (AMIs) and AWS Auto Scaling Groups (ASGs) to deploy new ASGs and instances to Amazon Elastic Compute Cloud (EC2).

You can deploy using basic, canary, and blue/green strategies.

See AWS AMI Quickstart and AWS AMI Deployments Overview.

Are public AMIs supported?

No. Harness only supports private AMIs.

AWS EC2 allows you to share an AMI so that all AWS accounts can launch it. AMIs shared this way are called public AMIs. Harness does not support public AMIs.

Can I do traffic shifting with AMI/ASG deployments?

Yes. Harness provides two methods:

- Incrementally Shift Traffic — In this Workflow strategy, you specify a Production Listener and Rule with two Target Groups for the new ASG to use. Next you add multiple Shift Traffic Weight steps.

Each Shift Traffic Weight step increments the percentage of traffic that shifts to the Target Group for the new ASG.

Typically, you add Approval steps between each Shift Traffic Weight to verify that the traffic may be increased. - Instantly Shift Traffic — In this Workflow strategy, you specify Production and Stage Listener Ports and Rules to use, and then a Swap Production with Stage step swaps all traffic from Stage to Production.

See AMI Blue/Green Deployment.

How does Harness rollback and downsize old ASGs?

Harness identifies the ASGs it deploys using the Harness Infrastructure Definition used to deploy it. During deployments, Harness tags the new ASG with an Infrastructure Definition ID.

It uses that ID to identify the previous ASG version(s), and downsize them.

See How Does Harness Downsize Old ASGs?.

AWS AMI/ASG with Spotinst

For an overview of Harness' support for platforms, methodologies, and related technologies, see Supported Platforms and Technologies.

Do you support Spotinst?

Yes. You can configure and execute AMI/ASG deployments—using Blue/Green and Canary strategies—via the Spotinst Elastigroup management platform.

See AMI Spotinst Elastigroup Deployments Overview.

Can I do traffic shifting with Spotinst deployments?

Yes. There are two Blue/Green deployment options for Spotinst, defined by the traffic-shifting strategy you want to use:

- Incrementally Shift Traffic — In this Workflow, you specify a Production Listener and Rule with two Target Groups for the new Elastigroup to use. Next you add multiple Shift Traffic Weight steps.

Each Shift Traffic Weight step increments the percentage of traffic that shifts to the Target Group for the new Elastigroup.

Typically, you add Approval steps between each Shift Traffic Weight to verify that the traffic may be increased. - Instantly Shift Traffic — In this Workflow, you specify Production and Stage Listener Ports and Rules to use, and then a Swap Production with Stage step swaps all traffic from Stage to Production.

See Spotinst Blue/Green Deployments Overview.

Can I perform verification on Spotinst traffic shifting?

Yes, for SpotInst Blue/Green deployments.

See Configure Spotinst Traffic Shift Verification.

AWS ECS

For an overview of Harness' support for platforms, methodologies, and related technologies, see Supported Platforms and Technologies.

What is a Harness ECS deployment?

Harness deploys applications to an AWS ECS cluster.

See AWS ECS Quickstart and AWS ECS Deployments Overview.

What ECS strategies can I use?

Harness supports the following ECS strategies:

- Replica Strategy.

- Daemon Strategy.

See ECS Services.

What deployment strategies can I use?

Harness supports:

- Canary Deployment with Replica Scheduling.

- Basic Deployment with Daemon Scheduling.

- See ECS Workflows.

- Blue/Green Workflow.

What limitations are there on ECS deployments?

Harness ECS deployments have the following limits:

- For EC2, the required

${DOCKER_IMAGE_NAME}placeholder must be in your task definition. See Review: Task Definition Placeholders. - For Fargate, the required

${EXECUTION_ROLE}placeholder must be in your task definition. See Review: Task Definition Placeholders. - You can use remote files for the task definition and the service definitions, or you can use a remote task definition and inline service specification.

- You cannot use an inline task definition and remote service specification.

- Remote files must be in JSON format. See Use Remote ECS Task and Service Definitions in Git Repos.

- Remote files must be formatted to meet ECS JSON formatting standards. See task definition and service definition parameters from AWS.

- Remote definition files are supported for Git repos only. AWS S3 buckets will be supported in the near future.

Can I do traffic shifting with AMI/ASG deployments?

Yes. There are two types of ECS Blue/Green deployments in Harness:

- Elastic Load Balancer (Classic, ALB, and NLB) - Using two Target Groups in the ELB, each with its own listener, traffic between the stage and production environments is swapped each time a new service is deployed and verified.

Both ALB and Network ELB are supported.* Route 53 DNS - Using a AWS Service Discovery namespace containing two service discovery services, and a Route 53 zone that hosts CNAME (alias) records for each service, Harness swaps traffic between the two service discovery services. The swap is achieved using Weighted Routing, where Harness assigns each CNAME record a relative weight that corresponds with how much traffic to send to each resource.

What ECS networking strategies can I use?

Harness supports the following ECS networking features:

- awsvpc Network Mode.

- Service Discovery.

See ECS Services.

Do Harness support ECS auto scaling?

Yes. Auto Scaling adjusts the ECS desired count up or down in response to CloudWatch alarms.

See AWS Auto Scaling with ECS.

Can I use ECS definitions in my repo?

Yes. You can use your Git repo for task and/or service definition JSON files. At deployment runtime, Harness will pull these files and use them to create your containers and services.

This remote definition support enables you to leverage the build tooling and scripts you use currently for updating the definitions in your repos.

See Use Remote ECS Task and Service Definitions in Git Repos.

Can I deploy sidecar containers?

Yes. You can deploy sidecar containers using a single Harness ECS Service and Workflow.

In the Harness Service for ECS, in addition to the spec for the Main Container used by Harness, you simply add container specs for however many sidecar containers you need.

See Deploy Multiple ECS Sidecar Containers.

Can I run an ECS task?

Yes. In addition to deploying tasks as part of your standard ECS deployment, you can use the ECS Run Task step to run individual tasks separately as a step in your ECS Workflow.

The ECS Run Task step is available in all ECS Workflow types.

See Run an ECS Task.

AWS CloudFormation

For an overview of Harness' support for platforms, methodologies, and related technologies, see Supported Platforms and Technologies.

Does Harness support AWS CloudFormation?

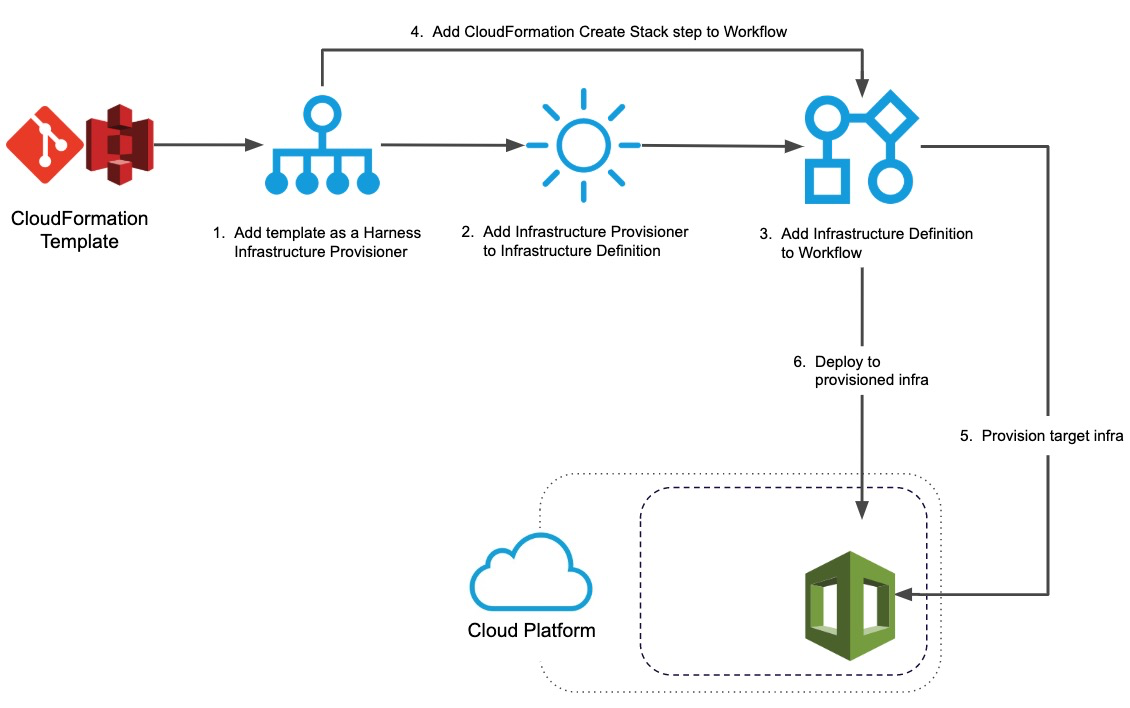

Yes. Harness has first-class support for AWS CloudFormation as an infrastructure provisioner.

You can use CloudFormation in your Workflows to provision the target infrastructure for your deployment on the fly.

Here is an overview of the process:

See CloudFormation Provisioning with Harness.

See CloudFormation Provisioning with Harness.

Do I need to deploy an application to use CloudFormation?

No. You do not need to deploy artifacts via Harness Services to use CloudFormation provisioning in a Workflow.

You can simply set up a CloudFormation Provisioner and use it in a Workflow to provision infrastructure without deploying any artifact. In Harness documentation, we include artifact deployment as it is the ultimate goal of Continuous Delivery.

Are Harness Service Instances counted with CloudFormation provisioning?

No. Harness Service Instances (SIs) are not consumed and no additional licensing is required when a Harness Workflow uses CloudFormation to provision resources.

When Harness deploys artifacts via Harness Services to the provisioned infrastructure in the same Workflow or Pipeline, SIs licensing is consumed.

See the Pricing FAQ at Harness Pricing.

Does Harness support AWS Serverless Application Model (SAM) templates?

No. Harness CloudFormation integration does not support AWS Serverless Application Model (SAM) templates. Only standard AWS CloudFormation templates.

What deployment strategies can I use CloudFormation with?

For most deployments, Harness Infrastructure Provisioners are only supported in Canary and Multi-Service types. For AMI/ASG and ECS deployments, Infrastructure Provisioners are also supported in Blue/Green deployments.

AWS Lambda

For an overview of Harness' support for platforms, methodologies, and related technologies, see Supported Platforms and Technologies.

What is a Harness Lambda deployment?

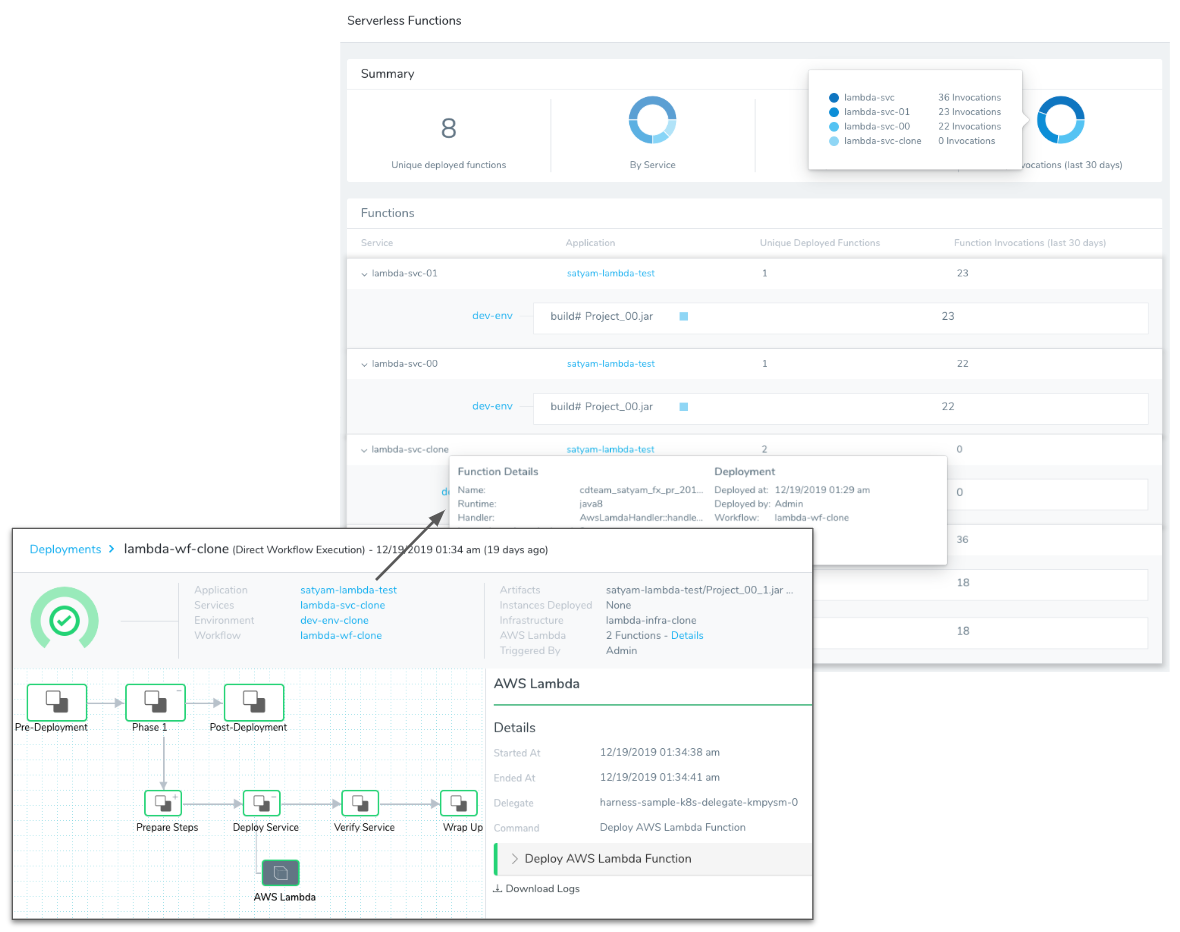

Harness has first-class support for AWS Lambda deployments, enabling you to deploy your functions without having to worry about compute constraints or complexity.

Setting up a Lambda deployment is as simple as adding your function zip file, configuring function compute settings, and adding aliases and tags. Harness takes care of the rest of the deployment, making it consistent, reusable, and safe with automatic rollback.

See AWS Lambda Quickstart and AWS Lambda Deployments Overview.

How many Service Instances (SIs) would 1 Lambda function consume in Harness?

1 service instance equals 5 serverless functions. There's no limit on the calls made using those functions.

What artifact servers are support for Lambda deployments?

Harness supports the following artifact sources with Lambda:

Can I monitor my Lambda function deployments?

Yes. You can view your Lambda function deployments in the Serverless Functions Dashboard.

See View Lambda Deployments in the Serverless Functions Dashboard.

See View Lambda Deployments in the Serverless Functions Dashboard.

Azure

For an overview of Harness' support for platforms, methodologies, and related technologies, see Supported Platforms and Technologies.

What Azure deployments does Harness support?

Harness supports the following Azure service deployments:

- Azure Virtual Machine Scale Set (VMSS). See Azure Virtual Machine Scale Set Deployments Overview.

- Azure Container Registry to Azure Kubernetes Service. See Azure ACR to AKS Deployments Overview and Azure Kubernetes Service (AKS) Deployments Overview. This includes Harness Native Helm and Kubernetes deployments.

- Secure Shell (SSH). Deploy application package files and a runtime environment to Azure VMs. See Traditional Deployments (SSH) Overview.

- IIS (.NET) via Windows Remote Management (WinRM). See IIS (.NET) Deployment Overview.

What does an Azure virtual machine scale set (VMSS) deployment involve?

To deploy an Azure virtual machine scale set (VMSS) using Harness, you only need to provide two things: an instance image and a base VMSS template.

Harness creates a new VMSS from the base VMSS template and adds instances using the instance image you provided.

See Azure Virtual Machine Scale Set Deployments Overview.

What operation systems are supported for VMSS?

Linux and Windows VMSS deployments are supported.

What deployment strategies can I use with VMSS?

Harness supports basic, canary, and blue/green.

See: Create an Azure VMSS Basic Deployment, Create an Azure VMSS Canary Deployment, and Create an Azure VMSS Blue/Green Deployment.

What does an Azure ACR to AKS deployment involve?

This deployment involves deploying a Docker image from Azure ACR to Azure AKS using Harness. Basically, the Harness deployment does the following:

- Docker Image - Pull Docker image from Azure ACR.

- Kubernetes Cluster - Deploy the Docker image to a Kubernetes cluster in Azure AKS in a Kubernetes Rolling Deployment.

See Azure ACR to AKS Deployments Overview.

CI/CD: Artifact Build and Deploy Pipelines

For an overview of Harness' support for platforms, methodologies, and related technologies, see Supported Platforms and Technologies.

What is a Harness Artifact Build and Deploy deployment?

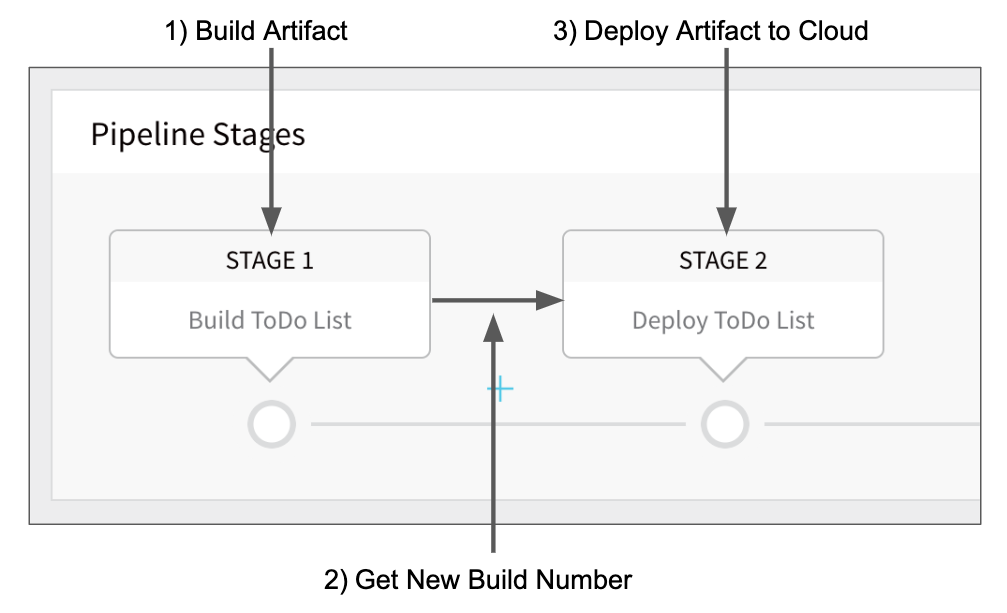

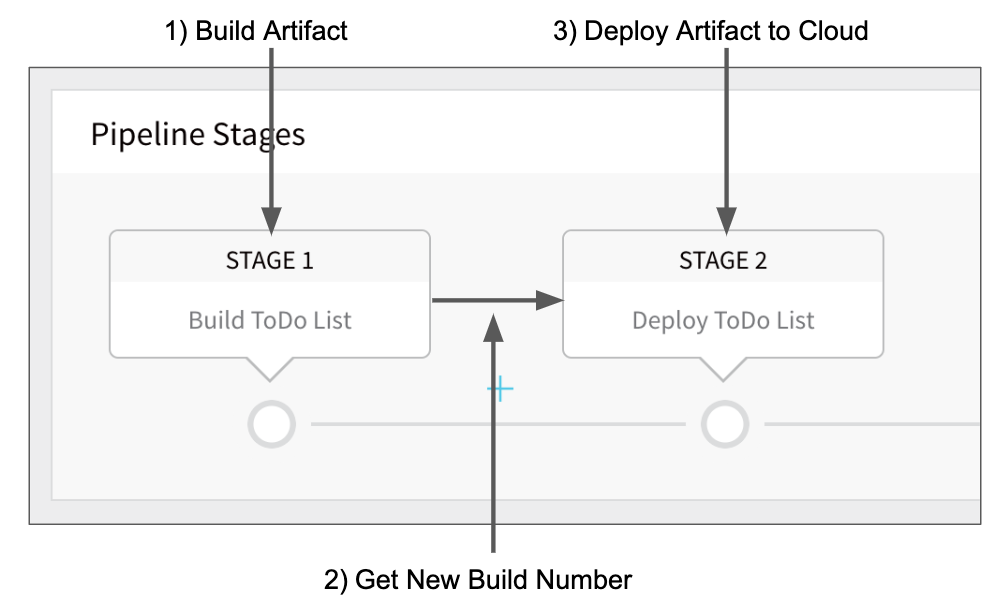

Artifact Build and Deploy Pipelines involve two Workflows, Artifact Build and Deploy, executed in succession by a Pipeline.

- Build Workflow - The Build Workflow connects to your Artifact Server and runs a build, such as a Jenkins job that creates a WAR file or an AMI and deposits it in a repo. Next, the Workflow connects to the repo and pulls the built artifact/metadata into Harness. Now the new artifact is awaiting deployment.

- Deploy Workflow - The Deploy Workflow obtains the new artifact from Harness and deploys it to the target deployment environment.

- Pipeline - The Pipeline runs the Build Workflow followed by the Deploy Workflow, and the latest artifact is deployed.

See Artifact Build and Deploy Pipelines Overview.

See Artifact Build and Deploy Pipelines Overview.

What can I deploy using Artifact Build and Deploy?

You can build and deploy any application, such as a file-based artifact like a WAR file or an AWS AMI/ASG.

For an AMI Artifact Build and Deploy Pipeline, the only difference from the File-based Example is that the Harness Service is an AMI type and the Deploy Workflow deploys the AMI instances in an Auto Scaling Group.

How do I create the build Workflow?

A Build Workflow doesn't require a deployment environment, unlike other Harness Workflows. It simply runs a build process via Jenkins, Bamboo, or Shell Script, and then saves the artifact to an explicit path.

See Create the Build Workflow for Build and Deploy Pipelines.

How do I create the deploy Workflow?

The Deploy Workflow takes the artifact you built in the Build Workflow by using the Service you created for the Artifact Source.

Then the Deploy Workflow installs the build into the nodes in the Environment.

See Create the Deploy Workflow for Build and Deploy Pipelines.

How do I build the Artifact Build and Deploy Pipeline?

An Artifact Build and Deploy Pipeline simply runs your Build Workflow followed by your Deploy Workflow. The Deploy Workflow uses the Harness Service you set up to get the new build number.

See Create the Build and Deploy Pipeline.

See Create the Build and Deploy Pipeline.

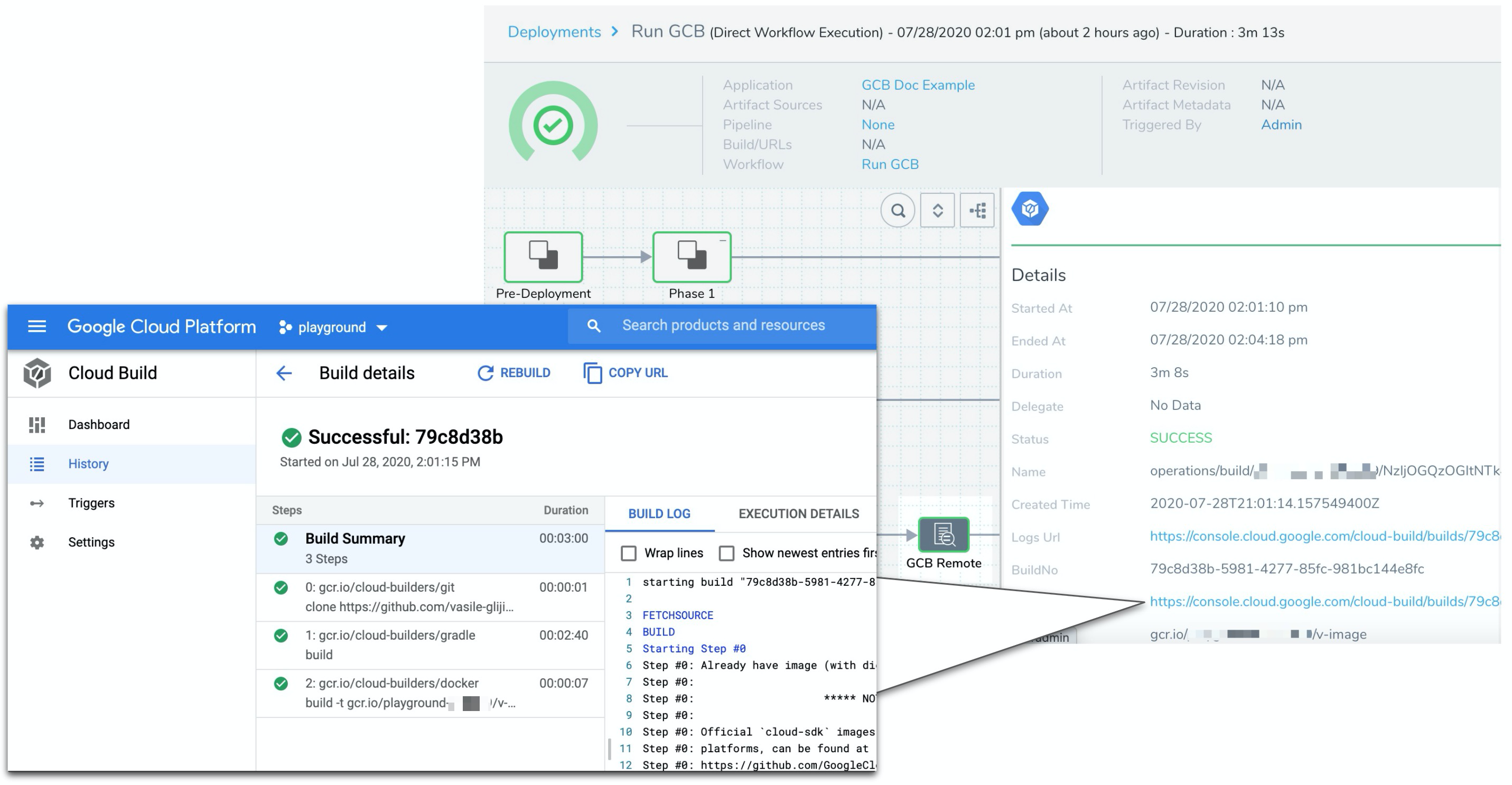

Google Cloud Builds

For an overview of Harness' support for platforms, methodologies, and related technologies, see Supported Platforms and Technologies.

What is a Harness Google Cloud Build deployment?

Google Cloud Build (GCB) can import source code from a variety of repositories or cloud storage spaces, execute a build to your specifications, and produce artifacts such as Docker containers or Java archives.

Harness GCB integration lets you do the following:

- Run GCB builds as part of your Harness Workflow.

- Run GCB builds using config files inline or in remote Git repos.

- Execute GCB Triggers, including substituting specific variables at build time.

Can I pull the GCB spec from my repo?

Yes. You can specify the repo where your build config file and its related files are located.

Can I use an existing GCB trigger?

Yes. If you have created a Cloud Build trigger for your Cloud Build you can execute it in your Workflow.

See Execute Existing GCB Trigger.

Can I use GCB substitutions?

Yes. GCB lets you use substitutions for specific variables at build time. You can do this in the Harness Google Cloud Build step, also.

What formats are supported for config files?

Harness only supports the use of JSON in inline and remote build config files. If you use a GCB trigger in the Google Cloud Build step, the config file it uses can be either YAML or JSON.

Native Helm

For an overview of Harness' support for platforms, methodologies, and related technologies, see Supported Platforms and Technologies.

What is a Harness Native Helm deployment?

Harness includes both Kubernetes and Native Helm deployments, and you can use Helm charts in both. Here's the difference:

- Harness Kubernetes Deployments allow you to use your own Kubernetes manifests or a Helm chart (remote or local), and Harness executes the Kubernetes API calls to build everything without Helm and Tiller needing to be installed in the target cluster.

Harness Kubernetes deployments also support all deployment strategies (Canary, Blue/Green, Rolling, etc). - For Harness Native Helm Deployments, you must always have Helm and Tiller (for Helm v2) running on one pod in your target cluster. Tiller makes the API calls to Kubernetes in these cases. You can perform a Basic or Rolling deployment strategy only (no Canary or Blue Green). For Harness Native Helm v3 deployments, you no longer need Tiller, but you are still limited to Basic or Rolling deployments.

- Versioning: Harness Kubernetes deployments version all objects, such as ConfigMaps and Secrets. Native Helm does not.

- Rollback: Harness Kubernetes deployments will roll back to the last successful version. Native Helm will not. If you did 2 bad Native Helm deployments, the 2nd one will just rollback to the 1st. Harness will roll back to the last successful version.

See Native Helm Deployments Overview.

For a quick tutorial on using Helm with a Harness Kubernetes deployment, see the Helm Quickstart.

What Helm versions does Harness support?

Harness supports Helm v2 and v3.

See Harness Continuous Delivery Supports Helm V3.

Can I upgrade my Native Helm deployment from Helm 2 to Helm 3?

Yes. When you create your native Helm deployments in Harness, you can choose to use Helm 2 or Helm 3.

If you have already created native Helm 2 deployments, you can upgrade your deployments to Helm 3.

See Upgrade Native Helm 2 Deployments to Helm 3.

Can I deploy Helm charts without adding an artifact source to Harness?

Yes. Typically, Harness Kubernetes and Native Helm deployments using Helm charts involve adding your artifact (image) to Harness in addition to your chart. The chart refers to the artifact you added to Harness (via its values.yaml). During deployment, Harness deploys the artifact you added to Harness and uses the chart to manage it.

In addition to this method, you can also simply deploy the Helm chart without adding your artifact to Harness. Instead, the Helm chart identifies the artifact. Harness installs the chart, gets the artifact from the repo, and then installs the artifact. We call this a Helm chart deployment.

See Deploy Helm Charts.

IIS (.NET)

For an overview of Harness' support for platforms, methodologies, and related technologies, see Supported Platforms and Technologies.

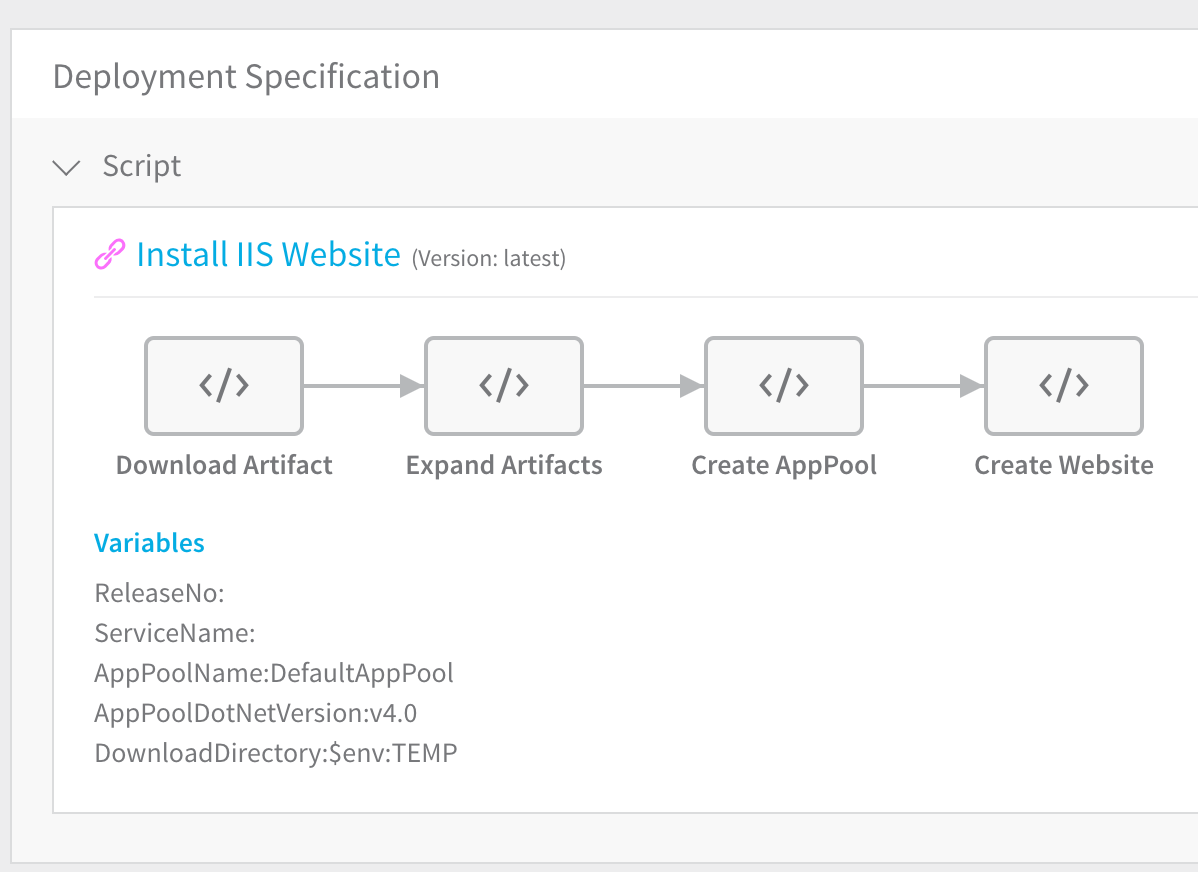

What is a Harness IIS (.NET) deployment?

Harness deploys an IIS Website, Application, and Virtual Directory as separate Harness Services. For all service types, Harness automatically creates the Deployment Specifications, which you can customize.

For example, the Deployment Specification section of the Harness Service is automatically filled with the Install IIS Website template, which pulls the artifact, expands it, creates the Application Pool (AppPool) and creates the website.

Most often, you deploy your Workflows in the following order in a Harness Pipeline:

Most often, you deploy your Workflows in the following order in a Harness Pipeline:

- IIS Website Workflow.

- IIS Application Workflow.

- IIS Virtual Directory Workflow.

Harness provides PowerShell and WinRM support to execute Workflows and communicate within the Microsoft ecosystem.

See the IIS (.NET) Quickstart and IIS (.NET) Deployments Overview.

How do I set up a WinRM on my target hosts?

WinRM is a management protocol used by Windows to remotely communicate with another server, in our case, the Harness Delegate.

\WinRM communicates over HTTP (5985)/HTTPS (5986), and is included in all recent Windows operating systems.

See:

How do I set up a WinRM connection in Harness?

You set up a WinRM connection from Harness to your target hosts using the Harness WinRM Connection settings.

See Add WinRM Connection Credentials.

How do I create a Pipeline that deploys all my IIS components?

Once you have the Harness Services for IIS website, application, and virtual directory, and the Harness Environment for your target infrastructure, you create Harness Workflows to deploy the IIS website, application, and virtual directory Services.

Once you have Workflows for your IIS website, application, and virtual directory set up, you can create a Harness Pipeline that deploys them in the correct order. For IIS, you must deploy them in the order website, application, and then virtual directory.

See IIS Workflows and Pipelines.

Kubernetes

For an overview of Harness' support for platforms, methodologies, and related technologies, see Supported Platforms and Technologies.

What is a Harness Kubernetes deployment?

Harness takes the artifacts and Kubernetes manifests you provide and deploys them to the target Kubernetes cluster. You can simply deploy Kubernetes objects via manifests and you can provide manifests using remote sources and Helm charts.

See the Kubernetes Quickstart and Kubernetes Deployments Overview.

For detailed instructions on using Kubernetes in Harness, see the Kubernetes How-tos.

What workloads can Harness deploy in a Kubernetes cluster?

See What Can I Deploy in Kubernetes?.

For Kubernetes, does Harness count only pods as a service instance (SI)? What about a ConfigMap or a Secret?

Only pods. Only pods will consume SI licenses. ConfigMaps and Secrets do not consume SI licenses.

See the Pricing FAQ at Harness Pricing.

How is Harness sure that a pod is a service instance?

Harness tracks the workloads it deploys. Harness checks every 10 minutes using the Kubernetes API to see how many pods are currently running for the given workload.

If I create a pod using Harness and keep managing it, is it still counted as a service instance?

Yes. Sidecar containers within pods (log proxy, service mesh proxy) do not consume SI licenses. Harness licenses by pod, not the number of containers in a pod.

See the Pricing FAQ at Harness Pricing.

Does Harness support OpenShift?

Yes. Harness supports DeploymentConfig, Route, and ImageStream across Canary, Blue Green, and Rolling deployment strategies. Please use apiVersion: apps.openshift.io/v1 and not apiVersion: v1.

You can leverage Kubernetes list objects as needed without modifying your YAML for Harness.

When you deploy, Harness will render the lists and show all the templated and rendered values in the log.

Harness supports:

- List

- NamespaceList

- ServiceList

- For Kubernetes deployments, these objects are supported for all deployment strategies (Canary, Rolling, Blue/Green).

- For Native Helm, these objects are supported for Basic deployments.

If you run kubectl api-resources you should see a list of resources, and kubectl explain will work with any of these.

See Using OpenShift with Harness Kubernetes.

Can I use Helm?

Yes. Harness Kubernetes deployments support Helm v2 and v3.

Harness supports Helm charts in both its Kubernetes and Native Helm implementations. There are important differences:

- Harness Kubernetes Deployments allow you to use your own Kubernetes manifests or a Helm chart (remote or local), and Harness executes the Kubernetes API calls to build everything without Helm and Tiller needing to be installed in the target cluster.

- Harness Kubernetes deployments also support all deployment strategies (Canary, Blue/Green, Rolling, etc).

- For Harness Native Helm Deployments, you must always have Helm and Tiller running on one pod in your target cluster. Tiller makes the API calls to Kubernetes in these cases.

- Harness Native Helm deployments only support Basic deployments.

Can I deploy Helm charts without adding an artifact source to Harness?

Yes. Typically, Harness Kubernetes deployments using Helm charts involve adding your artifact (image) to Harness in addition to your chart. The chart refers to the artifact you added to Harness (via its values.yaml). During deployment, Harness deploys the artifact you added to Harness and uses the chart to manage it.

In addition to this method, you can also simply deploy the Helm chart without adding your artifact to Harness. Instead, the Helm chart identifies the artifact. Harness installs the chart, gets the artifact from the repo, and then installs the artifact. We call this a Helm chart deployment.

See Deploy Helm Charts.

Can I use OpenShift?

Yes. Harness supports OpenShift for Kubernetes deployments.

See Using OpenShift with Harness Kubernetes.

Can I run Kubernetes jobs?

Yes. In Harness Kubernetes deployments, you define Jobs in the Harness Service Manifests. Next you add the Apply step to your Harness Workflow to execute the Job.

See Run Kubernetes Jobs.

Can I deploy a Kubernetes resources using CRDs?

Yes. Harness supports all Kubernetes default resources, such as Pods, Deployments, StatefulSets, DaemonSets, etc. For these resources, Harness supports steady state checking, versioning, displays instances on Harness dashboards, performs rollback, and other enterprise features.

In addition, Harness provides many of the same features for Kubernetes custom resource deployments using Custom Resource Definitions (CRDs). CRDs are resources you create that extend the Kubernetes API to support your application.

Harness supports CRDs for both Kubernetes and OpenShift. There is no difference in their custom resource implementation.See Deploy Kubernetes Custom Resources using CRDs.

Can I deploy resources outside of the main Kubernetes workload?

Yes. By default, the Harness Kubernetes Workflow will deploy all of the resources you have set up in the Service Manifests section.

In some cases, you might have resources that you do not want to deploy as part of the main Workflow deployment, but want to apply as another step in the Workflow. For example, you might want to deploy an additional resource only after Harness has verified the deployment of the main resources in the Service Manifests section.

Workflows include an Apply step that allows you to deploy any resource you have set up in the Service Manifests section.

See Deploy Manifests Separately using Apply Step.

Can I ignore a manifest during deployment?

You might have manifest files for resources that you do not want to deploy as part of the main deployment.

Instead, you tell Harness to ignore these files and then apply them separately using the Harness Apply step.

Or you can simply ignore them until you wish to deploy them as part of the main deployment.

See Ignore a Manifest File During Deployment.

Can I pull an image from a private registry?

Typically, If the Docker artifact source is in a private registry, Harness has access to that registry using the credentials set up in the Harness Add Artifact Servers.

If some cases, your Kubernetes cluster might not have the permissions needed to access a private Docker registry. For these cases, the default values.yaml file in Service Manifests section contains dockercfg: ${artifact.source.dockerconfig} . This key will import the credentials from the Docker credentials file in the artifact.

See Pull an Image from a Private Registry for Kubernetes.

Can I use remote sources for my manifests?

You can use your Git repo for the configuration files in Manifests and Harness uses them at runtime. You have the following options for remote files:

- Kubernetes Specs in YAML format - These files are simply the YAML manifest files stored on a remote Git repo. See Link Resource Files or Helm Charts in Git Repos.

- Helm Chart from Helm Repository - Helm charts files stored in standard Helm syntax in YAML on a remote Helm repo. See Use a Helm Repository with Kubernetes.

- Helm Chart Source Repository - These are Helm chart files stored in standard Helm syntax in YAML on a remote Git repo or Helm repo. See Link Resource Files or Helm Charts in Git Repos.

- Kustomization Configuration — kustomization.yaml files stored on a remote Git repo. See Use Kustomize for Kubernetes Deployments.

- OpenShift Template — OpenShift params file from a Git repo. See Using OpenShift with Harness Kubernetes.

Remote files can also use Go templating.#### Do you support Go templating for Kubernetes manifests?

Yes. you can use Go templating and Harness built-in variables in combination in your Manifests files.

See Use Go Templating in Kubernetes Manifests.

Can I provision Kubernetes infrastructure?

Yes, you can use Terraform. You can provision the target Kubernetes infrastructure as part of a pre-deployment step in your Workflow. When the Workflow runs, it builds your Kubernetes infrastructure first, and then deploys to the new infrastructure.

See Provision Kubernetes Infrastructures.

What deployment strategies can I use with Kubernetes?

You can use canary, rolling, and blue/green. See:

- Create a Kubernetes Canary Deployment

- Create a Kubernetes Rolling Deployment

- Create a Kubernetes Blue/Green Deployment

Can I create namespaces during deployment?

Yes. You can create namespaces on the fly using different methods:

- Create Kubernetes Namespaces based on InfraMapping

- Create Kubernetes Namespaces with Workflow Variables

Do you support Kustomize?

Yes. Harness supports Kustomize kustomizations in your Kubernetes deployments. You can use overlays, multibase, plugins, sealed secrets, etc, just as you would in any native kustomization.

See Use Kustomize for Kubernetes Deployments.

Can I use Ingress traffic routing?

Yes. You can route traffic using the Ingress rules defined in your Harness Kubernetes Service.

See Set up Kubernetes Ingress Rules.

Can I perform traffic splitting with Istio?

Yes. Harness supports Istio 1.2 and above.

See Set Up Kubernetes Traffic Splitting.

Can I perform traffic splitting without Istio?

Yes. You can progressively increase traffic to new application versions using Ingress resources, Harness annotations, and the Apply step.

See Traffic Splitting Without Istio.

Can I scale my pods up and down?

Yes. When you deploy a Kubernetes workload using Harness, you set the number of pods you want in your manifests and in the deployment steps.

With the Scale step, you can scale this number of running pods up or down, by count or percentage.

How do I delete Kubernetes resources?

Harness includes a Delegate step to remove any deployed Kubernetes resources.

See Delete Kubernetes Resources.

Can I use Helm 3 with Kubernetes?

Yes. You can use Helm 3 charts in both Kubernetes and Native Helm Services.

You can select Helm 3 when you create the Service, or upgrade Helm 2 to Helm 3.

See Upgrade to Helm 3 Charts in Kubernetes Services.

Can I use Helm Chart Hooks in Kubernetes Deployments?

Yes. You can use Helm chart hooks in your Kubernetes deployments to intervene at specific points in a release cycle.

See Use Helm Chart Hooks in Kubernetes Deployments.

Tanzu Application Service (TAS)

For an overview of Harness' support for platforms, methodologies, and related technologies, see Supported Platforms and Technologies.

What is a Harness TAS deployment?

Harness provides support for TAS (formerly Pivotal Cloud Foundry) app development and deployment platform for public and private clouds, including route mapping.

Harness takes the artifacts and TAS specs you provide and deploys them to the target TAS Organization and Space.

See Tanzu Application Service (TAS) Quickstart and Tanzu Application Service Deployment Overview.

Can I use TAS plugins?

You can use CLI plugins in your deployments. The App Autoscaler plugin has first-class support in Harness, enabling you to ensure app performance and control the cost of running apps. See Use CLI Plugins in Harness Tanzu Deployments.

Can I use remote resource files?

Yes. You can upload local and remote Manifest and Variable files.

See Upload Local and Remote Tanzu Resource Files.

What deployment strategies can I use with TAS?

Basic, canary, and blue/green are all supported.

See:

Can I run CF CLI commands and scripts during deployment?

Yes. You can run any CF CLI command or script at any point in your Harness TAS Workflows.

See Run CF CLI Commands and Scripts in a Workflow.

What CF CLI version does Harness support?

Harness supports Cloud Foundry CLI version 6 only. Support for version 7 is pending.

Can I use the App Autoscaler Service?

Yes. The App Autoscaler plugin has first-class support in Harness, enabling you to ensure app performance and control the cost of running apps.

Harness supports App Autoscaler Plugin release 2.0.233.

See Use the App Autoscaler Service.

Terraform

For an overview of Harness' support for platforms, methodologies, and related technologies, see Supported Platforms and Technologies.

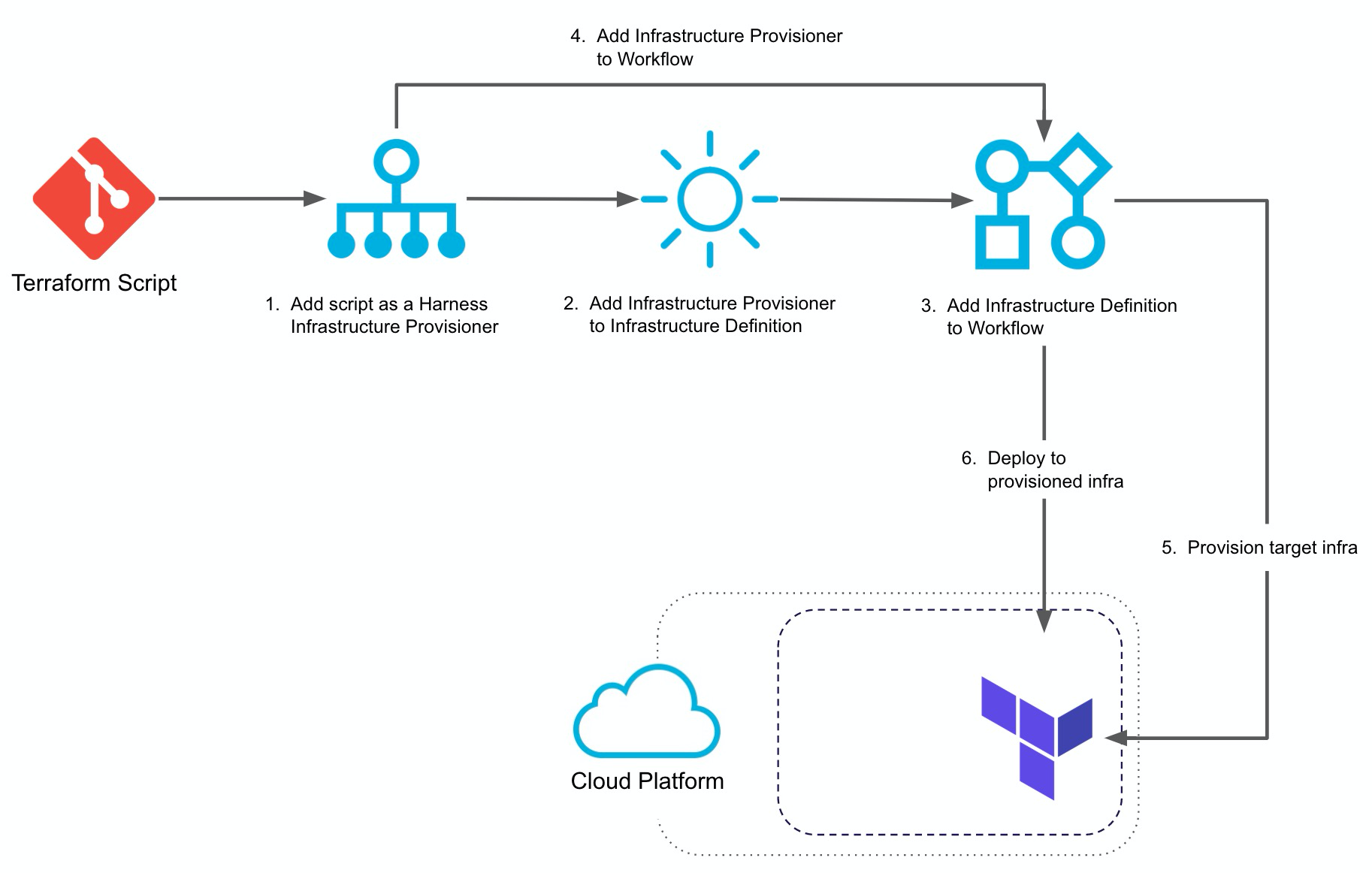

How does Harness support Terraform?

Harness lets you use Terraform to provision infrastructure as part of your deployment process. Harness can provision any resource that is supported by a Terraform provider or plugin.

You can use Terraform in your Workflows to provision the target infrastructure for your deployment on the fly.

Here is an overview of the process:

See Terraform Provisioning with Harness and Terraform How-tos.

See Terraform Provisioning with Harness and Terraform How-tos.

Do I need to deploy an application to use Terraform?

You do not need to deploy artifacts via Harness Services to use Terraform provisioning in a Workflow. You can simply set up a Terraform Provisioner and use it in a Workflow to provision infrastructure without deploying any artifact. In Harness documentation, we include artifact deployment as it is the ultimate goal of Continuous Delivery.

See Using the Terraform Apply Command.

Are Harness Service Instances counted with Terraform provisioning?

Harness Service Instances (SIs) are not consumed and no additional licensing is required when a Harness Workflow uses Terraform to provision resources. When Harness deploys artifacts via Harness Services to the provisioned infrastructure in the same Workflow or Pipeline, SIs licensing is consumed.

See the Pricing FAQ at Harness Pricing.

What deployment strategies can I use Terraform with?

Terraform Infrastructure Provisioners are only supported in Canary and Multi-Service deployment types. For AMI/ASG and ECS deployments, Terraform Infrastructure Provisioners are also supported in Blue/Green deployments.

The Terraform Provision and Terraform Rollback commands are available in the Pre-deployment section and the Terraform Destroy command is available in the Post-deployment section.

Can I perform a Terraform dry run?

Yes. The Terraform Provision and Terraform Apply steps in a Workflow can be executed as a dry run, just like running the terraform plan command.

The dry run will refresh the state file and generate a plan, but not apply the plan. You can then set up an Approval step to follow the dry run, followed by the Terraform Provision or Terraform Apply step to apply the plan.

See Perform a Terraform Dry Run.

Can I remove resources provisioned with Terraform?

Yes. You can add a Terraform Destroy Workflow step to remove any provisioned infrastructure, just like running the terraform destroy command. See destroy from Terraform.

See Remove Provisioned Infra with Terraform Destroy.

Traditional (SSH)

For an overview of Harness' support for platforms, methodologies, and related technologies, see Supported Platforms and Technologies.

What is a Harness Traditional (SSH) deployment?

Traditional deployments involve obtaining an application package from an artifact source, such as a WAR file in an AWS S3 bucket, and deploying it to a target host, such an AWS AMI.

You can perform traditional deployments to AWS and Azure, and to any server on any platform via a platform agnostic Physical Data Center connection. In all cases, you simply set up a Harness Infrastructure Definition and target the hosts on the platform.

See Traditional Deployments (SSH) Overview.

For a Build and Deploy Pipeline using a Traditional deployment, see Artifact Build and Deploy Pipelines Overview.#### Can I target specific hosts during deployment?

Yes. You can choose to deploy to specific hosts when you start or rerun a Workflow whose Service uses the Secure Shell (SSH) Deployment Type.

See Target Specific Hosts During Deployment.

Can I add Application Stacks?

Yes. You can upload and select the app stack you want to use to as a runtime environment for your application, such as Tomcat.

See Add Application Stacks and Add Artifacts and App Stacks for Traditional (SSH) Deployments.

Can I add scripts to my Traditional (SSH) deployment?

yes. When you create the Harness Secure Shell (SSH) Service, Harness automatically generates the commands and scripts needed to install the app and stack on the target host, copy the file(s) to the correct folder, and start the app.

You can add more scripts to the Service.

See Add Scripts for Traditional (SSH) Deployments.

What types of deployment strategies can I use with Traditional (SSH) deployment?

Harness supports the following strategies:

- Basic

- Canary

- Rolling

See Create a Basic Workflow for Traditional (SSH) Deployments.

Configure as Code

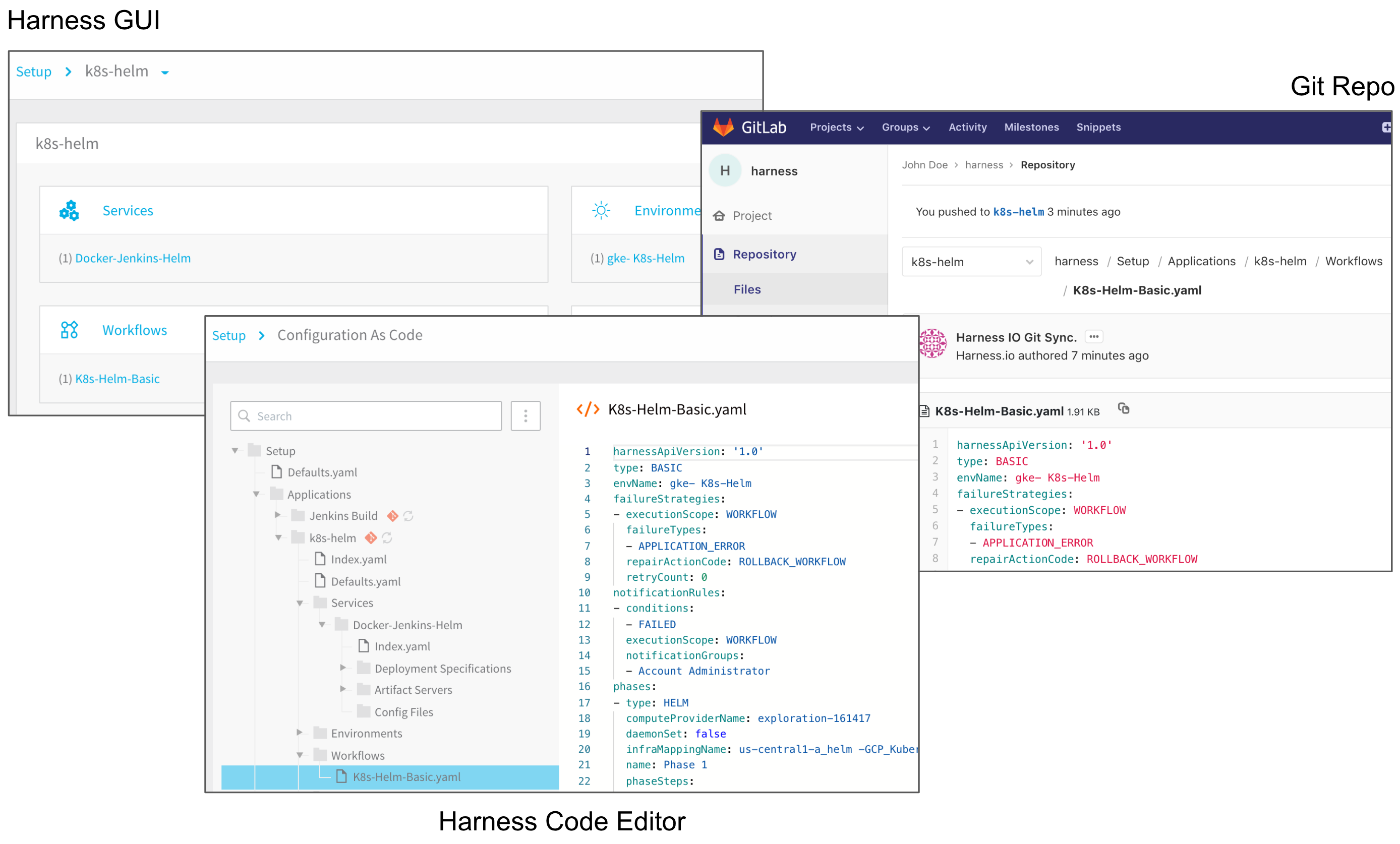

Can I create my deployments using YAML?

Yes. Harness Configuration As Code allows you to configure settings like Pipelines, Triggers, Workflows, Environments, and Services in Harness using YAML. Nearly everything you can do in the Harness platform GUI, you can do in YAML as well.

See Configuration as Code Overview and Harness YAML Code Reference.

See Configuration as Code Overview and Harness YAML Code Reference.

Can I sync my Harness account or application with my repo?

Yes, both.

You can sync your Harness account with a Git repo. The Harness account information can be synced with one repo and the Applications in your account can be synced separately with other repos or branches.

You can sync any of your Harness Applications with your Git repo. Harness Applications are set up and managed with Git separately from the Account-level sync.

See:

- Harness Account-Level Git Sync

- Harness Application-Level Git Sync

- Delink Git Sync

- Edit Harness Components as YAML

Can I view Git sync activity?

Yes. Once you have synched your Harness account or Applications with your Git repo(s), you can view bi-directional activity between Harness and your repos using Git Sync Activity.

Git Sync Activity provides you with a granular, commit-by-commit audit of every change and ensures that you are fully aware of all Git activity with your Harness account and Applications.

See View Harness Git Sync Activity.

Can Harness troubleshoot Git sync errors?

Yes. Harness you diagnose errors you might encounter during the Harness Git sync process. For example, when you sync a Harness Application or account with a Git repo, or when you sync an Application or Workflow in Git with Harness.

Does Harness support GitOps?

Yes. Harness includes many features to help with GitOps.

You can create a Harness Application template you can sync and clone in Git for onboarding new teams.

Often, teams create an Application template for engineering leads or DevOps engineers. Each team then gets a clone of the Application in Git that they can modify for their purposes.

Development teams can then deploy consistently without using the Harness UI to create their Applications from scratch. They simply change a few lines of YAML vis scripts and deploy their application.

See Onboard Teams Using GitOps.

Uncommon Deployment Platforms

Harness provides deployment support for all of the major artifact, approval, provisioner, and cloud platforms.

This section describes how Harness provides features for uncommon, custom platforms.

For an overview of Harness' support for platforms, methodologies, and related technologies, see Supported Platforms and Technologies.

What is a Harness Custom Deployment?

Harness provides deployment support for all of the major platforms.

In some cases, you might be using a platform that does not have first class support in Harness. For these situations, Harness provides a custom deployment option.

Custom deployments use shell scripts to connect to target platforms, obtain target host information, and execute deployment steps.

See Create a Custom Deployment.

Does Harness support custom artifact repositories?

Yes. For enterprises that use a custom repository, Harness provides the Custom Artifact Source to add their custom repository to the Service.

See Using Custom Artifact Sources.

Does Harness support custom provisioners?

Yes. Harness has first-class support for Terraform and AWS CloudFormation provisioners, but to support different provisioners, or your existing shell script implementations, Harness includes the Shell Script Infrastructure Provisioner.

Does Harness support custom approvals?

Yes. Harness provides first-class support for the following common approval tools:

You can also add approval steps in Pipelines and Workflows using a custom shell script ticketing system.

See Custom Shell Script Approvals.

Harness Applications

What is a Harness Application?

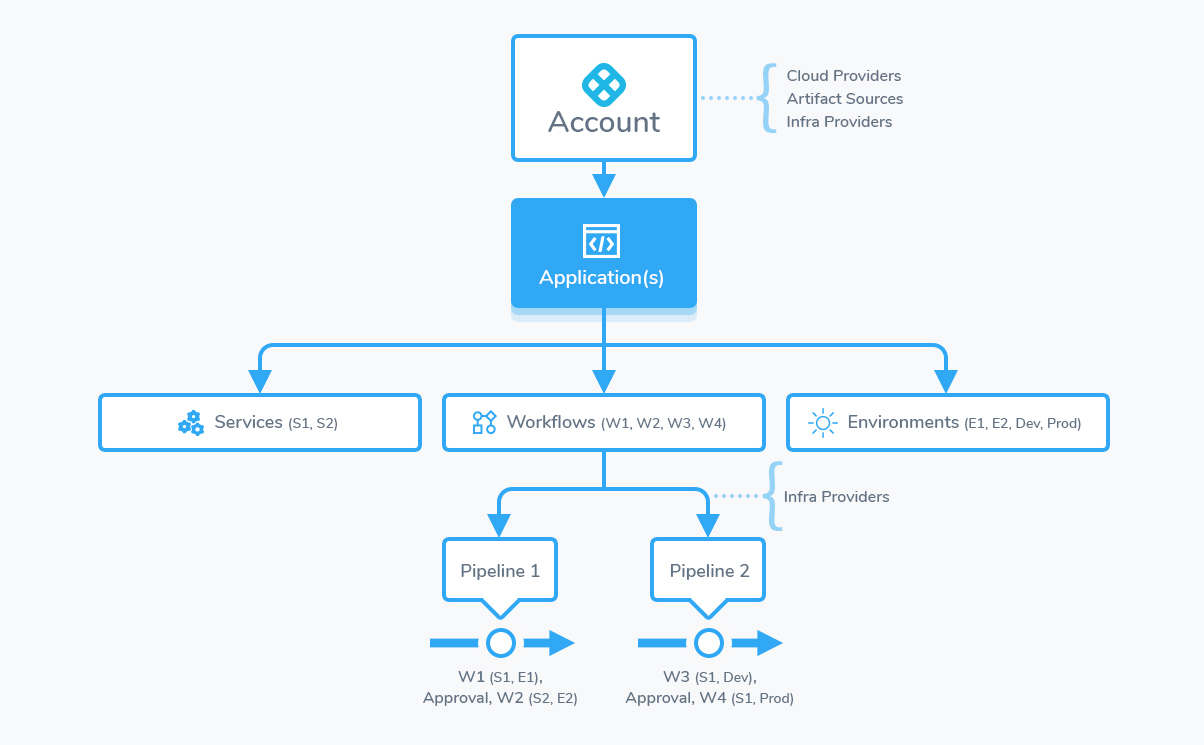

A Harness Application represents a group of microservices, their deployment pipelines, and all the building blocks for those pipelines. Harness represents your microservice using a logical group of one or more entities: Services, Environments, Workflows, Pipelines, Triggers, and Infrastructure Provisioners. Applications organize all of the entities and configurations in Harness CI/CD.

See Create an Application and Create Default Application Directories and Variables.

See Create an Application and Create Default Application Directories and Variables.

Harness Services

For an overview of Harness' support for platforms, methodologies, and related technologies, see Supported Platforms and Technologies.

What is a Harness Service?

Services represent your microservices/apps. You define where the artifacts for those services come from, and you define the container specs, configuration variables, and files for those services.

See Add Specs and Artifacts using a Harness Service, Add a Docker Artifact Source, and Service Types and Artifact Sources.

Artifacts

For an overview of Harness' support for platforms, methodologies, and related technologies, see Supported Platforms and Technologies.

What artifact sources can I use?

Harness supports all of the common repos.

The following table lists the artifact types, such as Docker Image and AMI, and the Artifact Sources' support for metadata.

Legend:

- M - Metadata. This includes Docker image and registry information. For AMI, this means AMI ID-only.

- Blank - Not supported.

| Sources | Docker Image(Kubernetes/Helm) | AWS AMI | AWS CodeDeploy | AWS Lambda | JAR | RPM | TAR | WAR | ZIP | TAS | IIS |

| Jenkins | M | M | M | M | M | M | M | M | M | ||

| Docker Registry | M | M | |||||||||

| Amazon S3 | M | M | M | M | M | M | M | M | M | ||

| Amazon AMI | M | ||||||||||

| Elastic Container Registry (ECR) | M | M | |||||||||

| Azure Reg | M | ||||||||||

| Azure DevOps Artifact | M | M | M | M | M | M | M | M | |||

| GCS | M | M | M | M | M | M | M | ||||

| GCR | M | M | |||||||||

| Artifactory | M | M | M | M | M | M | M | M | M | M | |

| Nexus | M | M | M | M | M | M | M | M | M | M | |

| Bamboo | M | M | M | M | M | M | M | M | |||

| SMB | M | M | M | M | M | M | M | M | |||

| SFTP | M | M | M | M | M | M | M | M |

See Service Types and Artifact Sources.

For uncommon repos, see Using Custom Artifact Sources.

Does Harness support Azure DevOps?

Yes. You can add an Azure DevOps Artifact Server as a Harness Connector, and then use that Connector in your Service to add the Azure DevOps organization, project, feed, and package name.

See Add an Azure DevOps Artifact Source.

Harness Environments

What is a Harness Environment?

Environment organize your deployment infrastructures, such as Dev, QA, Stage, Production, etc.

See Add an Environment.

Harness Infrastructure Definitions

The Infrastructure Definition is where you specify the target infrastructure for your deployment. This can be a VM, Kubernetes or ECS cluster, TAS space, etc.

The target infrastructure can be an existing infrastructure or an infrastructure provisioner, such as Terraform or CloudFormation, set up as a Harness Infrastructure Provisioner.

For every deployment you create, you will define an Infrastructure Definition as a target for your deployment.

See Add an Infrastructure Definition.

Harness Workflows

What is a Harness Workflow?

Workflows define the deployment orchestration steps, including how a Service is deployed, verified, rolled back, and more.

Some of the common Workflow types are Canary, Blue/Green, and Rolling. An Application might have different deployment orchestration steps for different Environments, each managed in a Workflow.

See:

- Add a Workflow

- Deploy Individual Workflow

- Verify Workflow

- Add a Workflow Notification Strategy

- Define Workflow Failure Strategy

- Set Workflow Variables

- Use Steps for Different Workflow Tasks

- Add Phases to a Workflow

- Synchronize Workflows in your Pipeline Using Barrier

- Templatize a Workflow

- Clone a Workflow

For a list of all Workflow topics, see Add Workflows.

How are deployment strategies implemented as Workflows?

When you create a Harness Workflow, you specify the deployment strategy for the Workflow, such as canary or blue/green.

See Deployment Concepts and Strategies and Workflow Types.

Are Workflow deployments to the same infrastructure queued?

Yes. To prevent too many Workflows or Pipelines from being deployed to the same infrastructure at the same time, Harness uses Workflow queuing. See Workflow Queuing.

Harness Pipelines

What is a Harness Pipeline?

Pipelines define your release process using multiple Workflows and Approvals in sequential and/or parallel stages.

Pipelines can involve multiple Services, Environments, and Workflows. A Pipeline can be triggered either manually or using Triggers.

See Create a Pipeline.

Can I skip Pipeline stages?

Yes. You can use skip conditions to control how Pipeline stages execute.

For example, you could evaluate a branch name to determine which Stage to run. If the branch name is not master, you could skip a Workflow that deploys from the master branch.

Can I create a Pipeline template?

Yes. Pipeline templates allow you to use one Pipeline with multiple Services and Infrastructure Definitions and a single Environment.

You template a Pipeline by replacing these settings with variable expressions. Each time you run the Pipeline, you provide values for these expressions.

See Create Pipeline Templates and Onboard Teams Using GitOps.

Are Pipeline deployments to the same infrastructure queued?

Yes. To prevent too many Workflows or Pipelines from being deployed to the same infrastructure at the same time, Harness uses Workflow queuing. See Workflow Queuing.

Harness Infrastructure Provisioners

For an overview of Harness' support for platforms, methodologies, and related technologies, see Supported Platforms and Technologies.

What is a Harness Infrastructure Provisioner?

Harness Infrastructure Provisioners define deployment infrastructure blueprints from known Infrastructure-as-Code technologies, such as Terraform and CloudFormation, and map their output settings to provision the infrastructure. Infrastructure Provisioners enable Harness Workflows to provision infrastructure on the fly when deploying Services.

See:

- Terrform Provisioner

- Using the Terraform Apply Command

- CloudFormation Provisioner

- Shell Script Provisioner

Harness Triggers

What is a Harness Trigger?

Triggers automate deployments using a variety of conditions, such as Git events, new artifacts, schedules, and the success of other Pipelines.

To trigger Workflows and Pipeline using the Harness GraphQL API, see Trigger Workflows or Pipelines Using GraphQL API.#### How can I trigger a deployment?

Harness provides multiple methods for triggering deployments.

See:

- Trigger Deployments when Pipelines Complete

- Schedule Deployments using Triggers

- Trigger Deployments using Git Events

- Trigger a Deployment using cURL

- Trigger a Deployment when a File Changes

Can I pause triggers?

Yes. You can stop all of your Harness Triggers from executing deployments using Harness Deployment Freeze.

Deployment Freeze is a Harness Governance feature that stops all Harness deployments, including their Triggers. A deployment freeze helps ensure stability during periods of low engineering and support activity, such as holidays, trade shows, or company events.

See Pause All Triggers using Deployment Freeze.

Approvals

For an overview of Harness' support for platforms, methodologies, and related technologies, see Supported Platforms and Technologies.

What is a Harness Approval?

Approvals are Workflow or Pipeline steps you can use to approve or reject deployments during execution.

What can I use for Approvals?

Harness provides the following approval options and integrations:

Harness Variables Expressions

What are Harness Variable Expressions?

Harness variable expressions are used to parameterize settings in your Harness components, create environment variables, and create templates that can be used by your team.

You can create your own variable expressions to pass information through your Pipeline, or use built-in expressions to reference most of the settings and deployment information in Harness.

See What is a Harness Variable Expression?.

Are there built-in variables?

Yes. Harness includes many built-in variables for each platform integration and common deployment components.

Where can I use variables?

Everywhere. Whenever you have a Harness field that permits variables, begin by typing ${ and the variables available to that entity are displayed.

The variables used in Harness follow a naming convention that describes where they can be used. When you select a variable, it helps to understand the naming convention, as there might be numerous variables from which to choose.

For example, an account-level variable created in Account Defaults begins with the namespace account.defaults followed by the variable name. The reference for an Account Defaults variable named productName is ${account.defaults.productName}.

See Availability and Scope of Harness Variables.

Can I pass variables into Workflows and Pipelines?

Yes. You can create variables and pass them into a Workflow or Pipeline from a Trigger.

You can also pass variables between Workflows.

See Passing Variables into Workflows and Pipelines from Triggers and Pass Variables between Workflows.

How can I override variables?

You can override a lot of variable values in order to templatize and customize deployment settings.

These overrides have priority. For example, you can override a Service variable in an Environment and a Workflow.

See Variable Override Priority.

Can I use JSON and XML in my expressions?

Yes. Harness includes JSON and XML functors you can use to select contents from JSON and XML sources. These functors reduce the amount of shell scripting needed to pull JSON and XML information into your Harness Workflow steps.

See

See